The Force Engine development news and updates.

01 Jan 2024

Table of Contents

- 2023 Retrospective

- 2024 Plans

2023

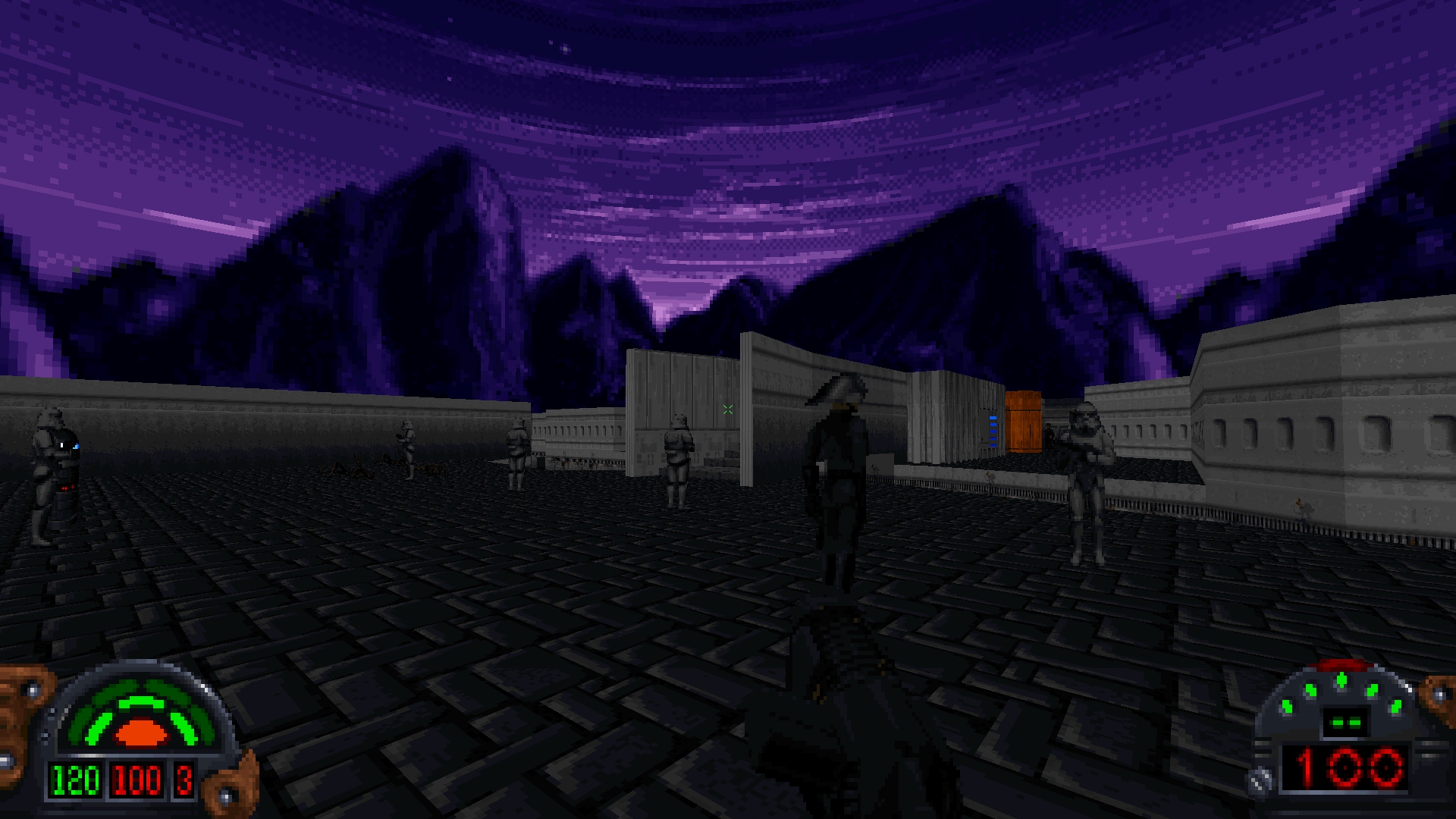

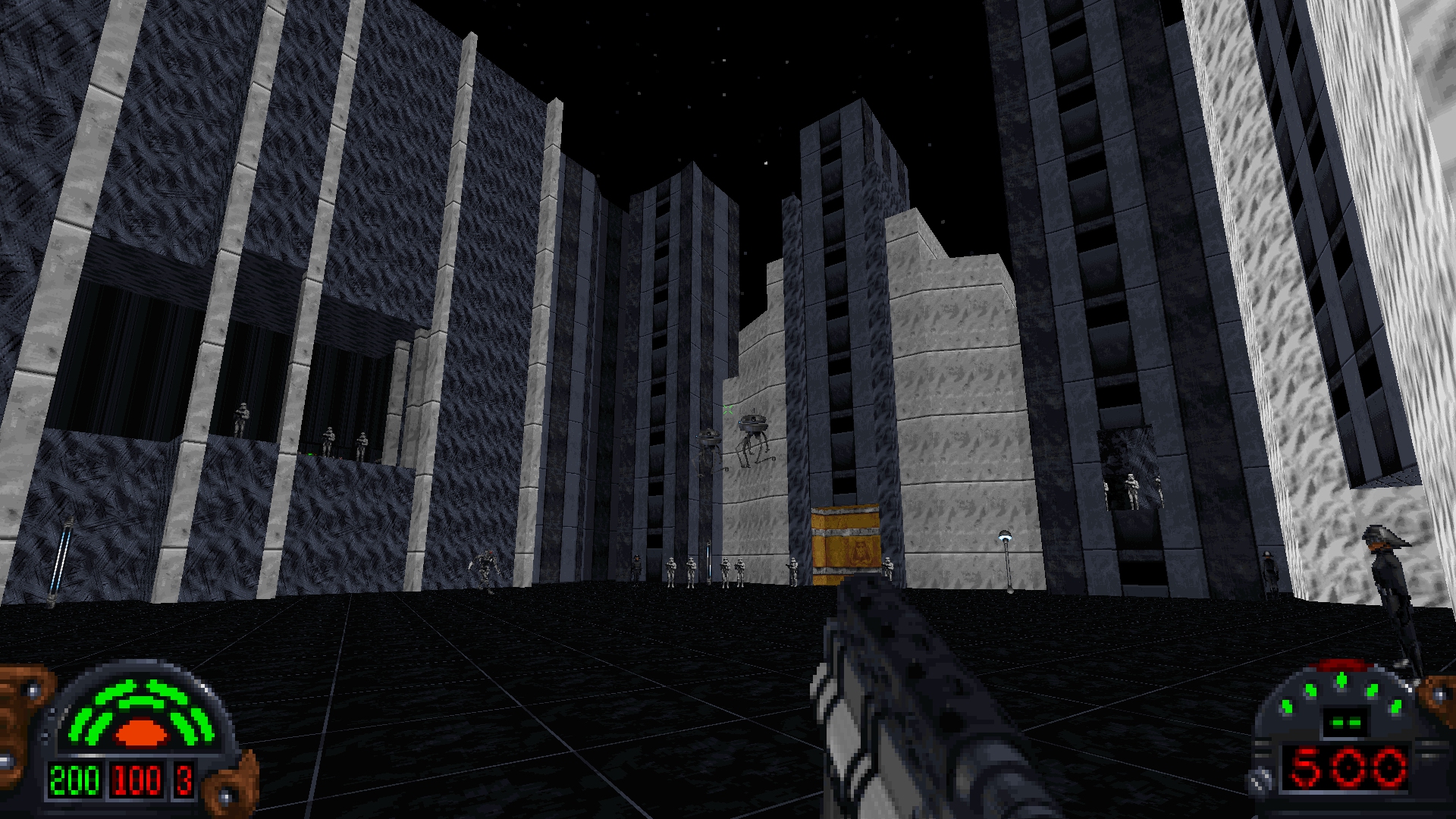

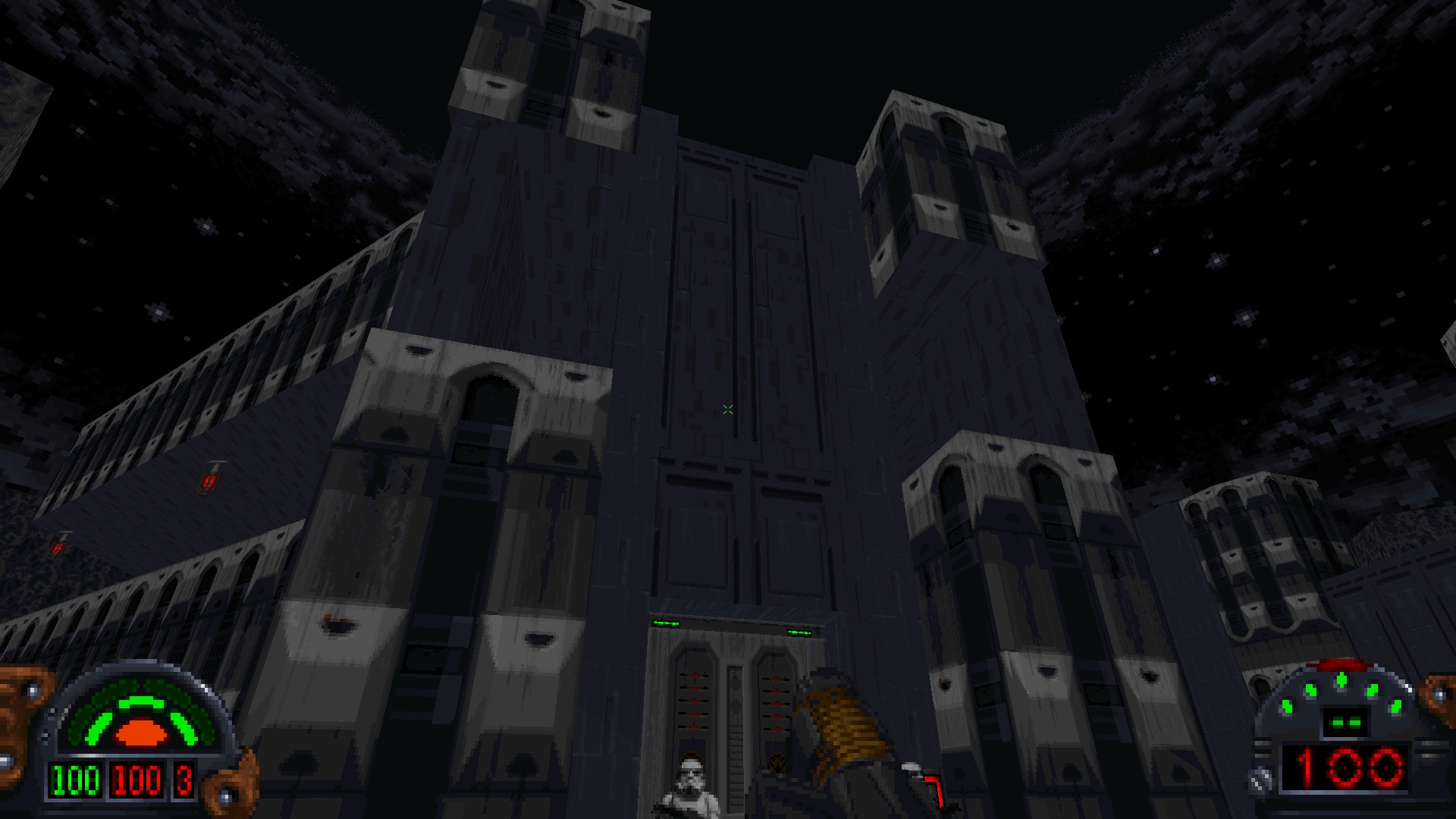

With the release of The Force Engine (TFE) version 1.0 at the end of 2022, I had big plans for 2023. Unfortunately, as often happens in these cases, the year was pretty busy and development proceeded at a slower pace overall. That said, several key milestones were reached in 2023 that will set up 2024 features and aid in the path to version 2.0 - Outlaws support.

Version 1.02 - January 16

The version 1.0 release exposed many bugs and issues with TFE, so a few weeks after the version 1.0 milestone, a large bug-fix build was released. It included quality of life features like Alt+Enter for fullscreen, the framerate limiter, and improvements to the audio system. It also featured over two dozen bug fixes.

Version 1.08 - February 6

Version 1.08 was the first time Linux was officially supported, thanks in large part to the contributions of Manuel Lauss. He also contributed fixes when running Dark Forces using different languages, and did the initial pass at removing 3DO hardcoded limits (which was then further modified later).

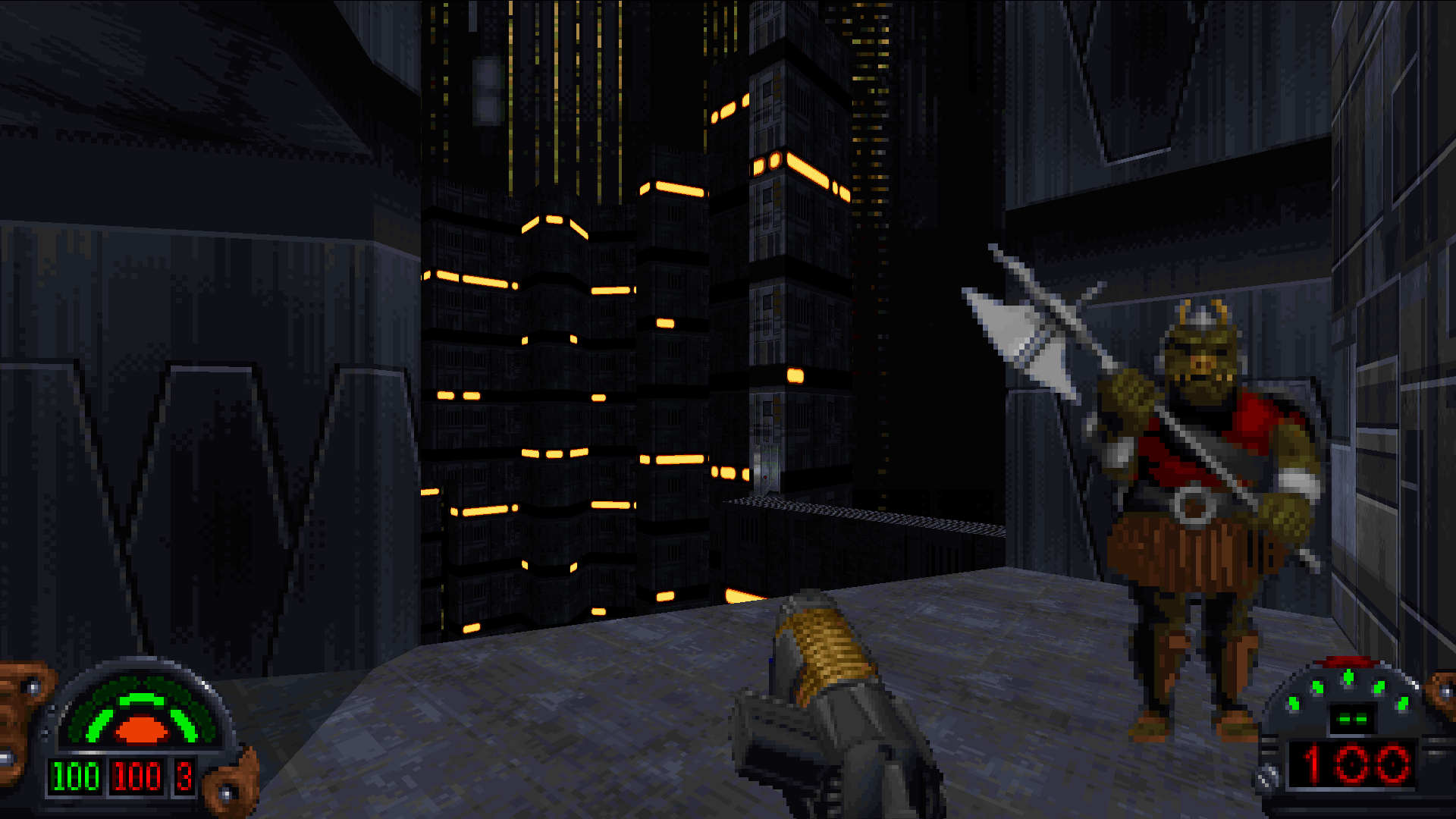

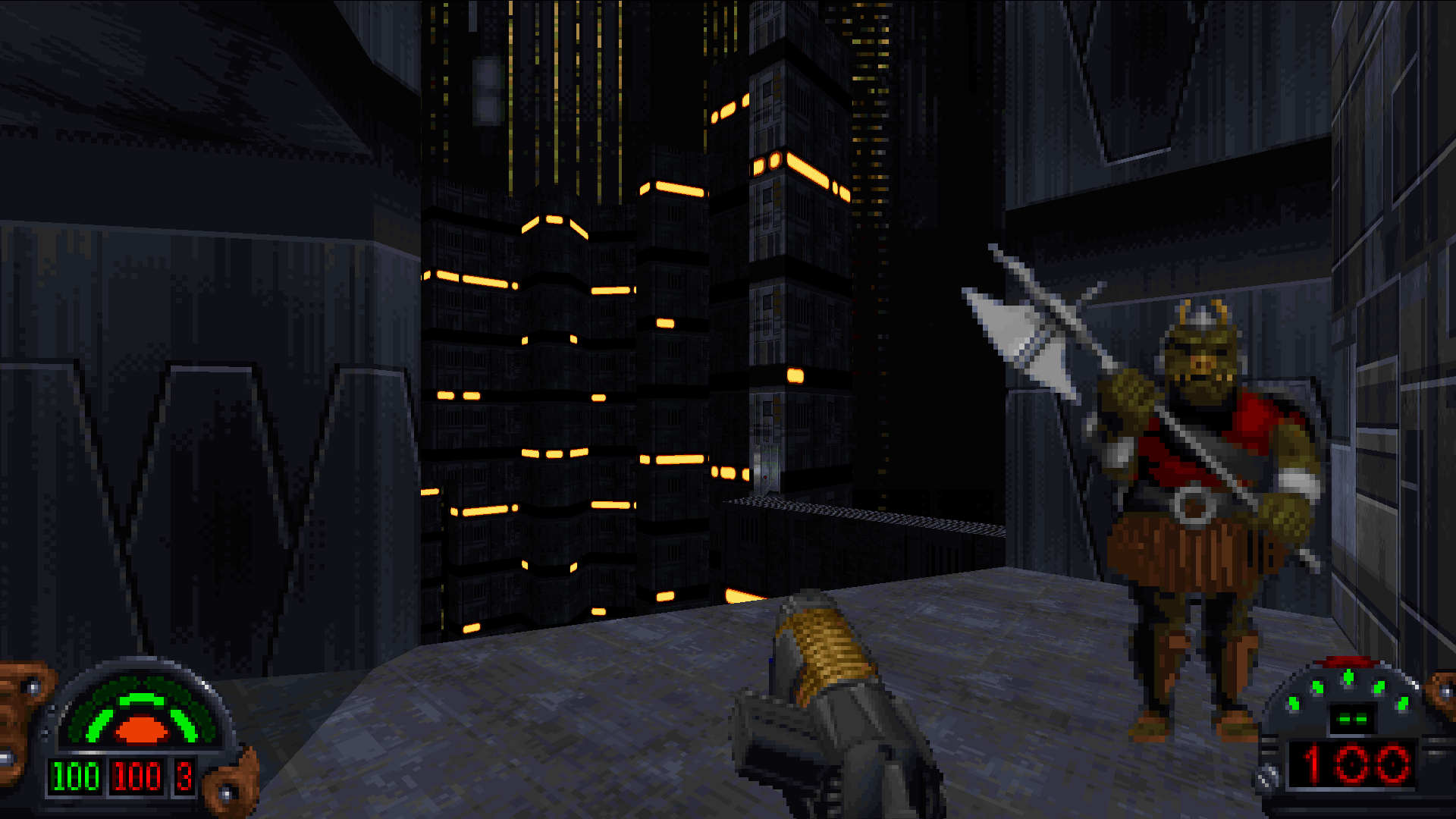

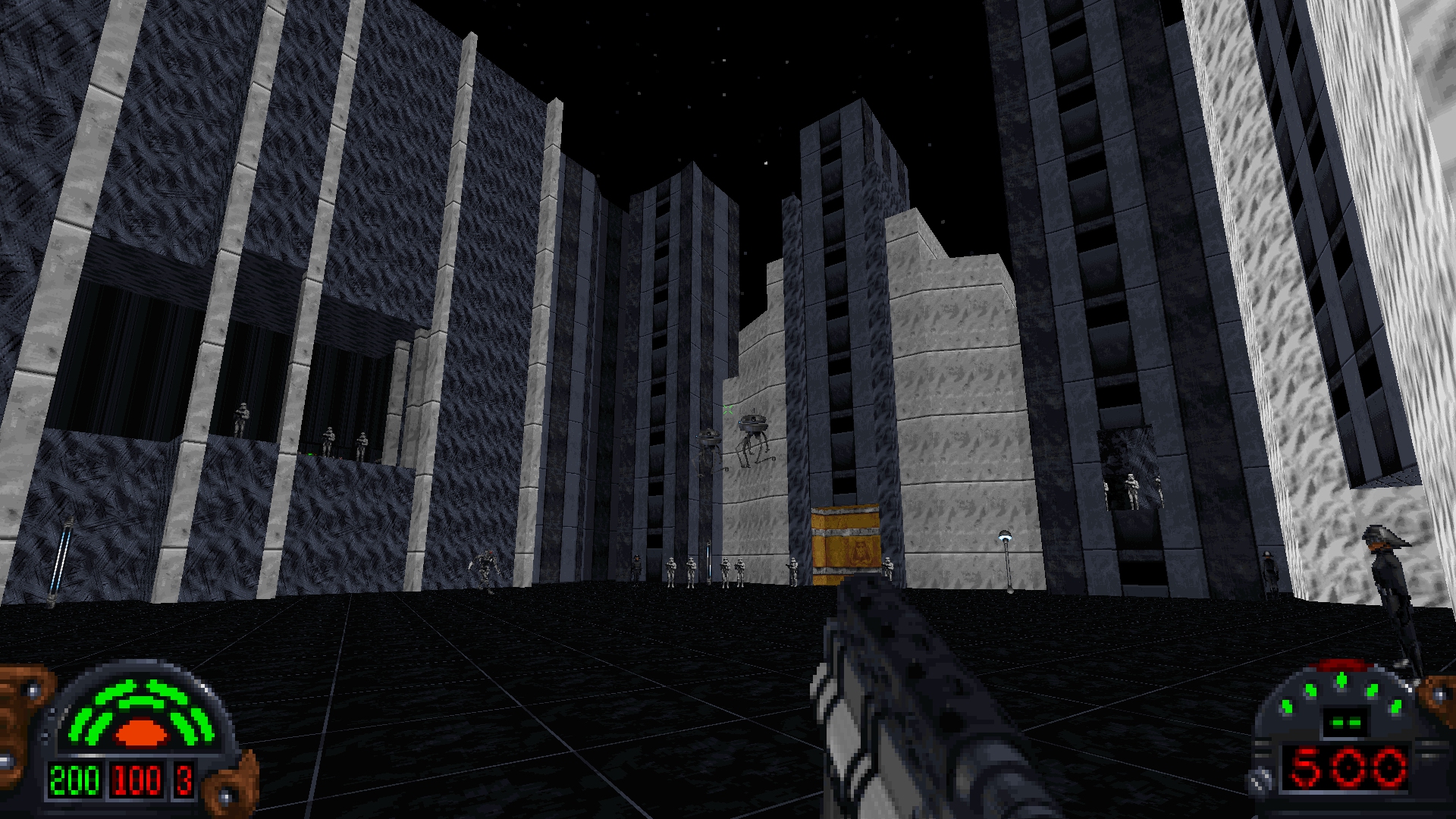

This release also saw more bug fixes, such as HOM in Executor using the GPU Renderer, fixed font rendering issues, fixed the Gamorrean Guard so he attacked properly, similar fixes to the Sewer Creatures, more work on the midi system, and more crashes.

Version 1.09 - February 15

This version added midi synthesis using Sound Font 2 (SF2) data. It also added proper OPL 3 emulation, which became the new default, making the music sound similar to playing Dark Forces through DosBox.

Version 1.09.2 - May 26, Version 1.09.3 - July 4

During this time, work on the project was slow, but feature work was happening in the background. These two releases fixed dozens of additional bugs and issues found throughout the year.

Version 1.09.4 - July 31

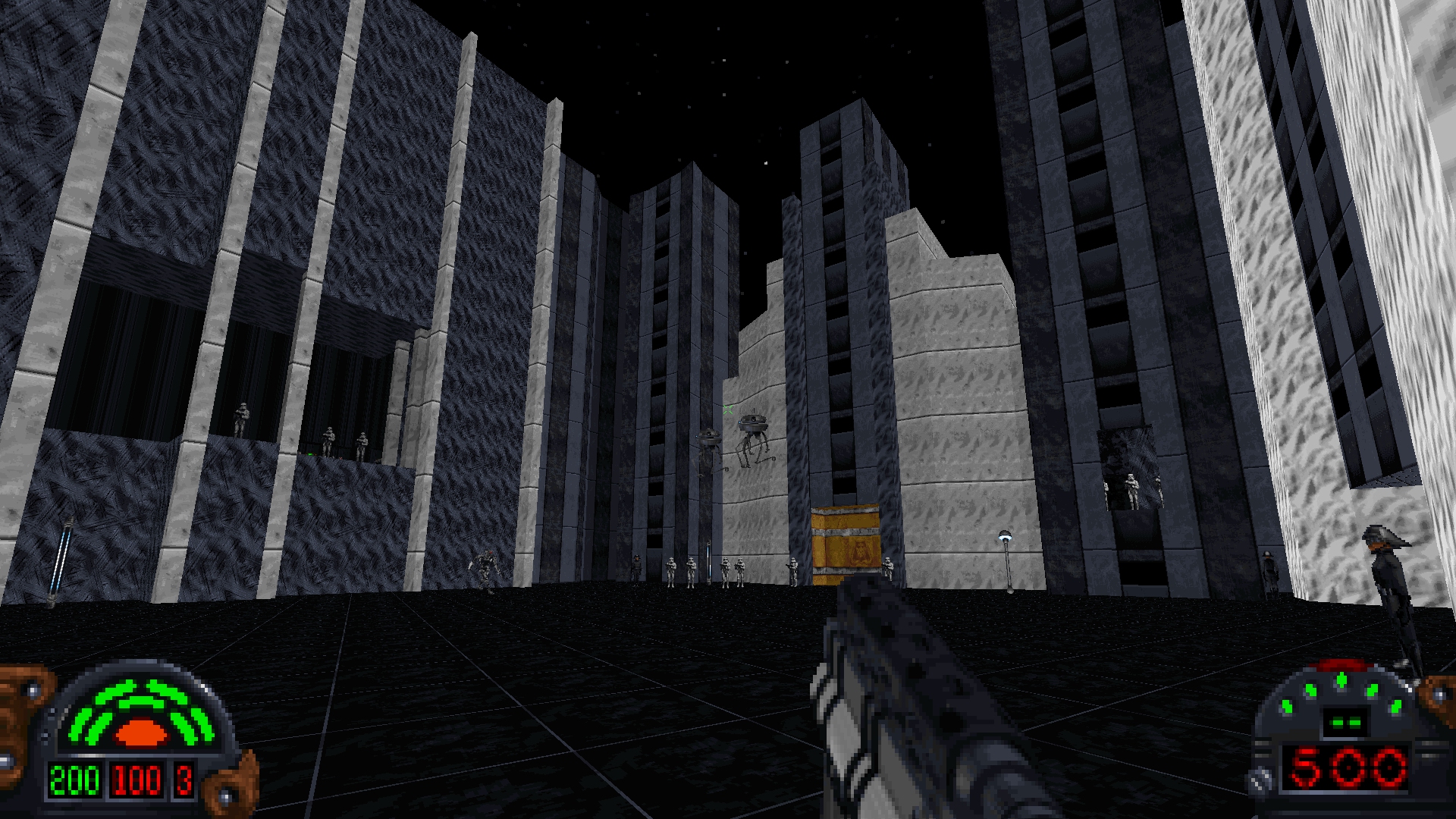

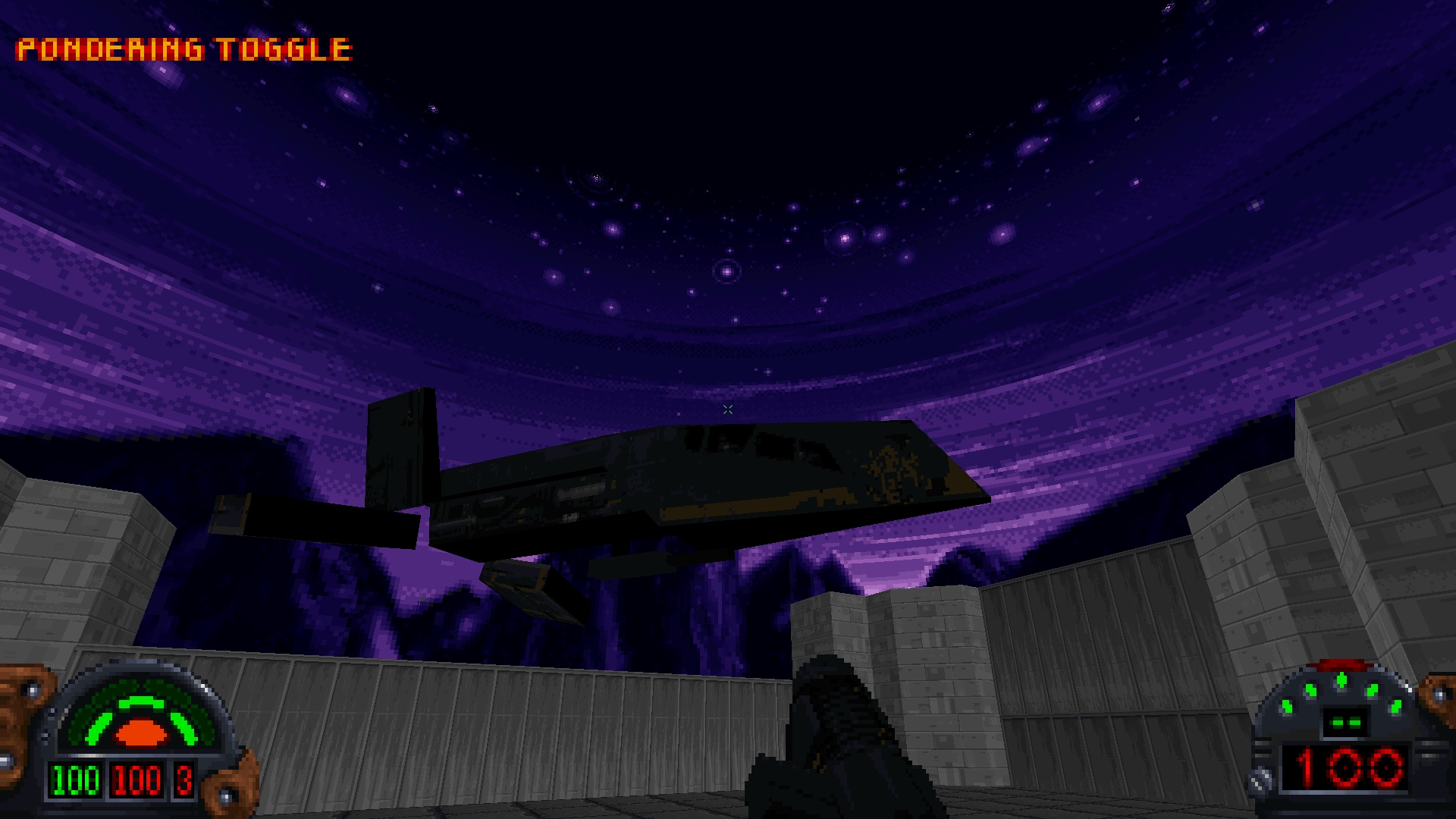

Kevin Foley made large contributions to this release with Smooth Vue Animations, which interpolated rotation, translation, and scale for Vue animated objects - such as flying ships. This greatly enhances the animation quality. He also added the Beta version of the Closed Captions feature.

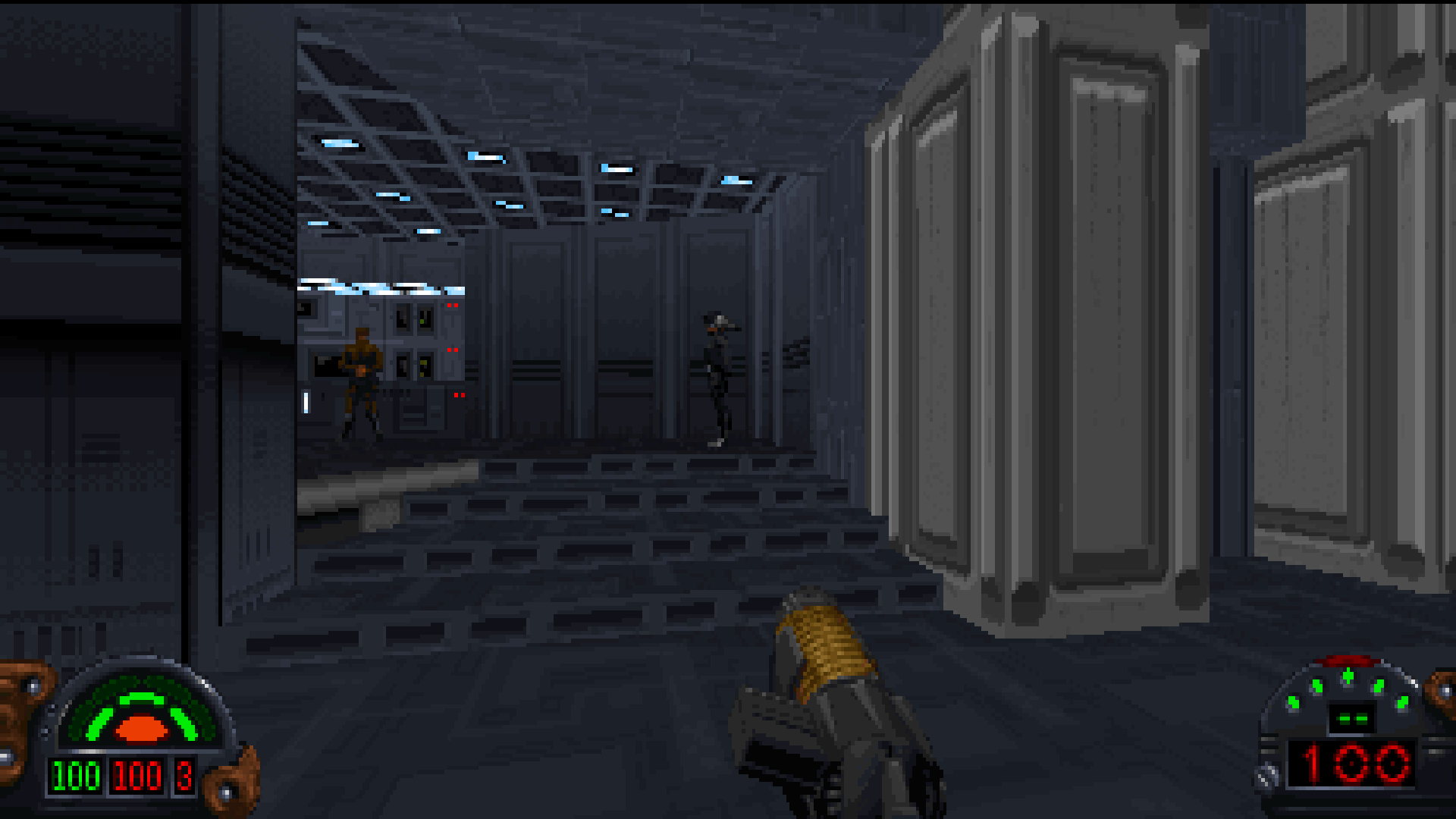

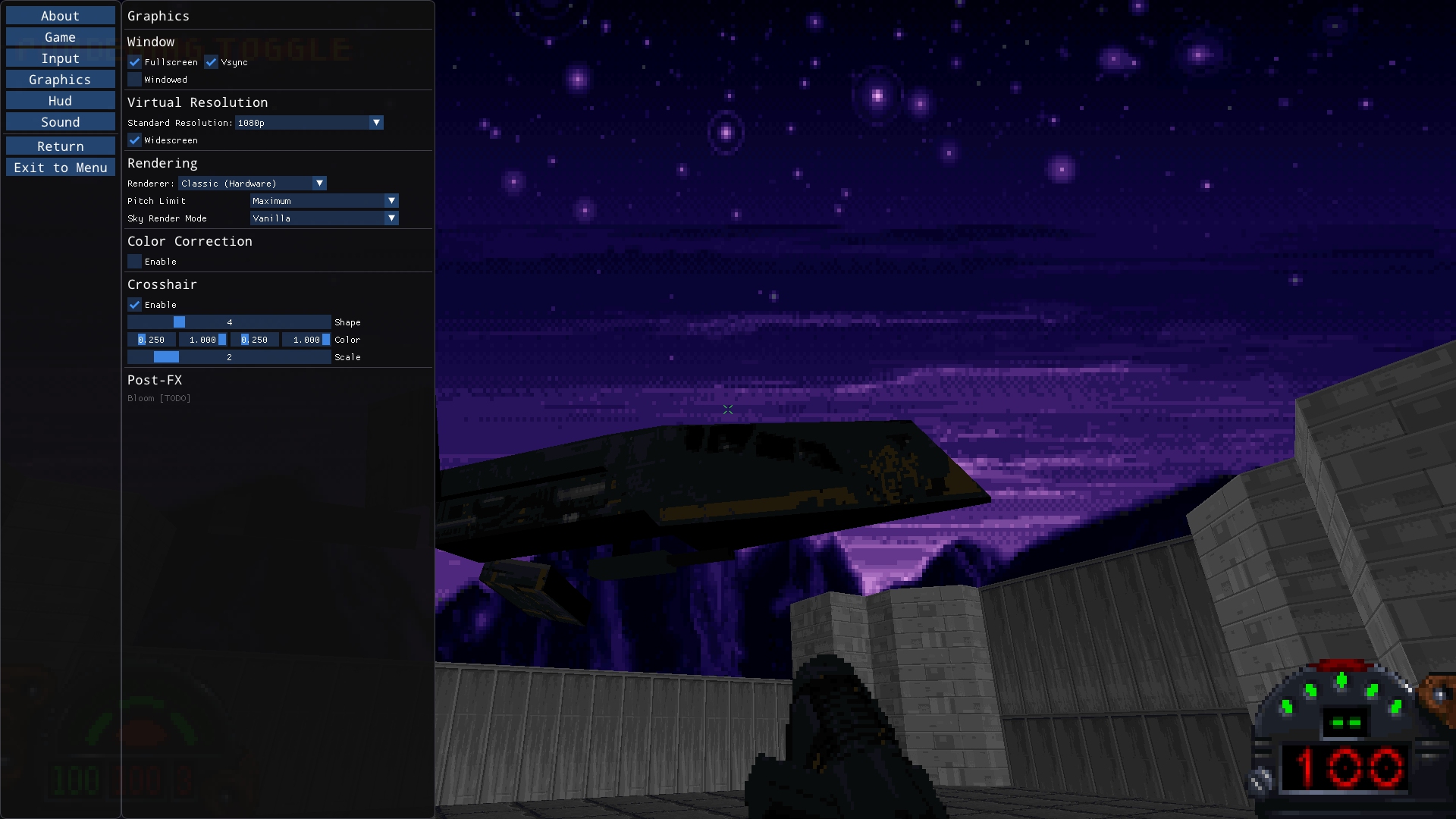

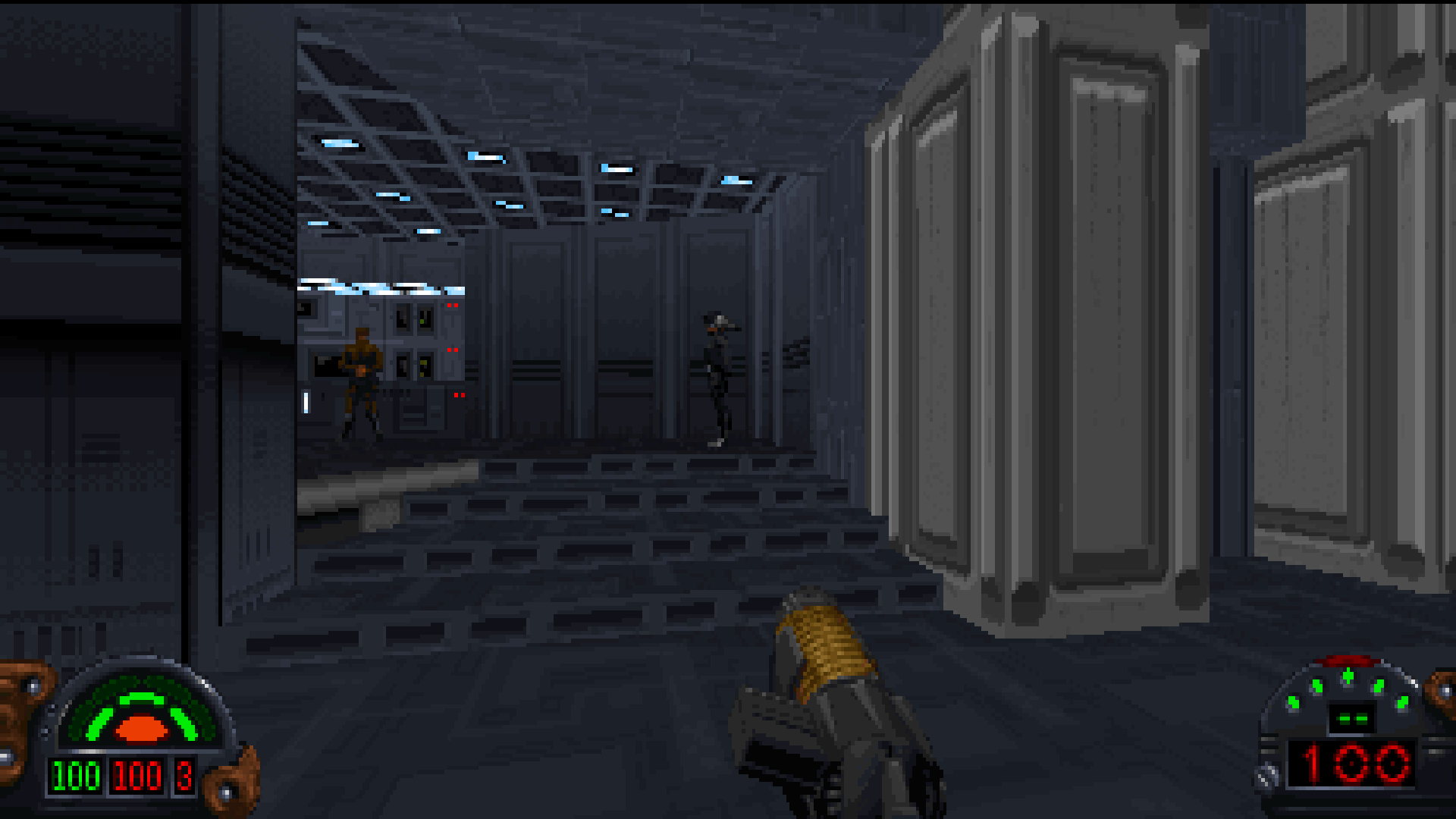

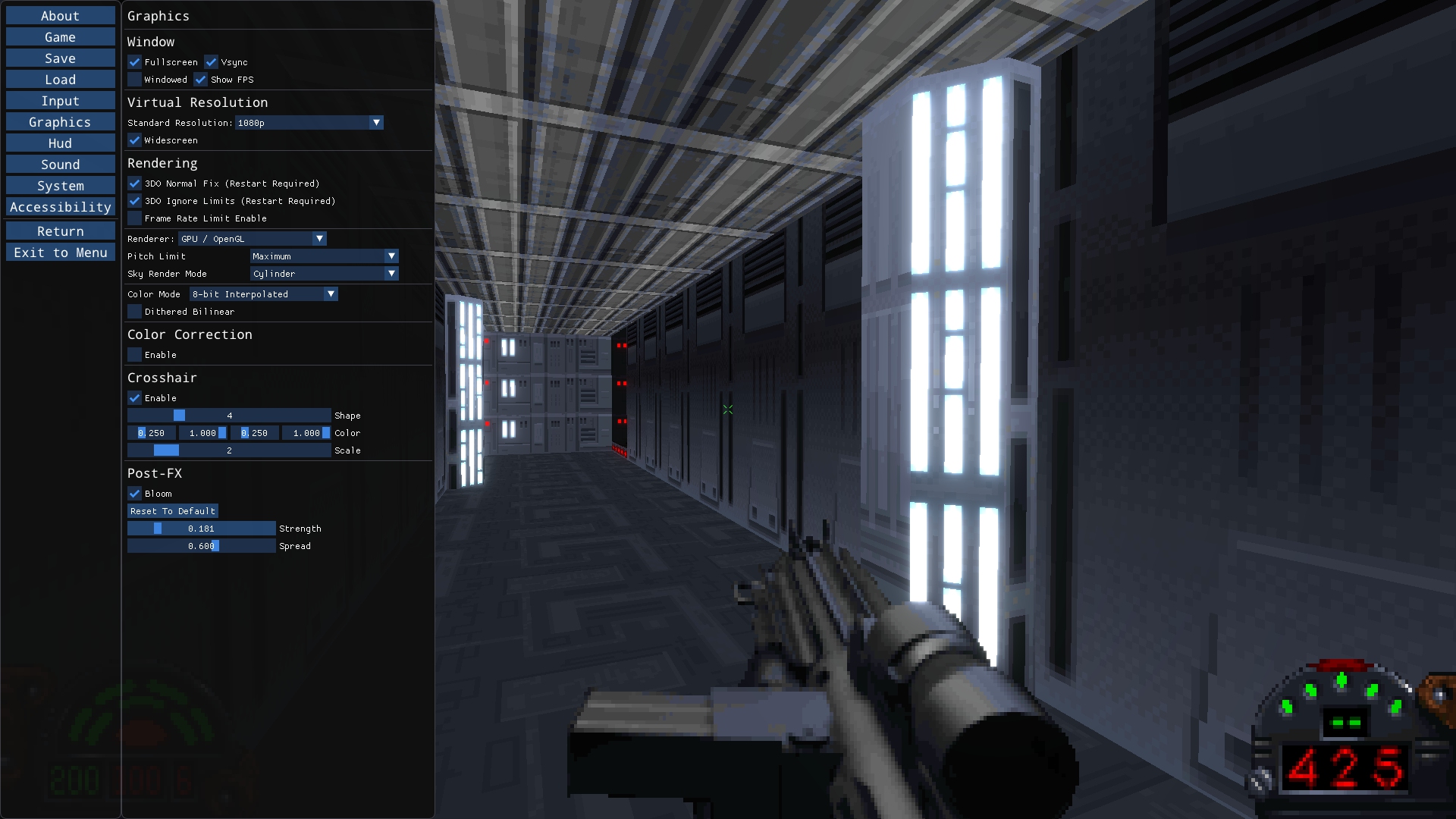

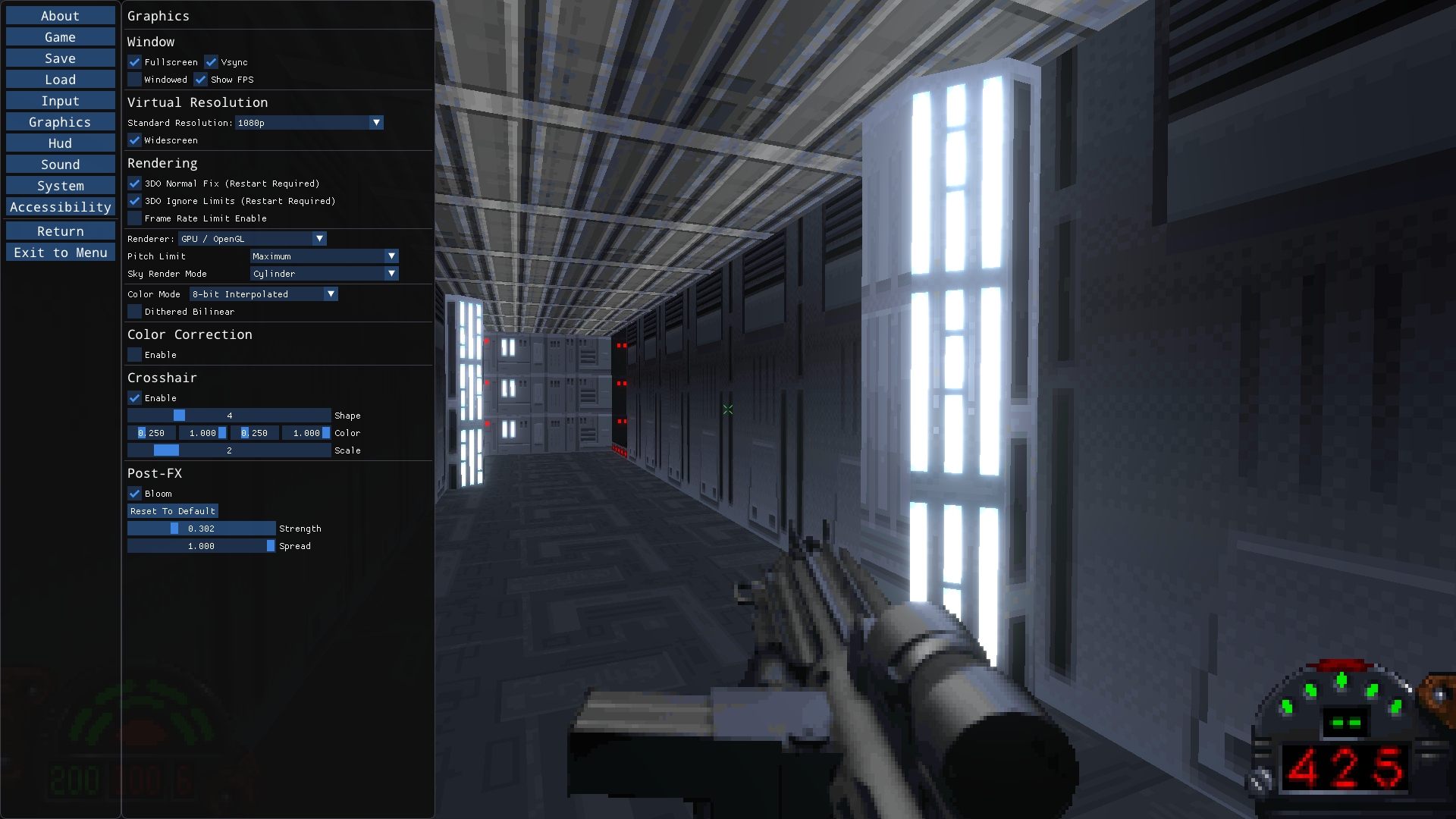

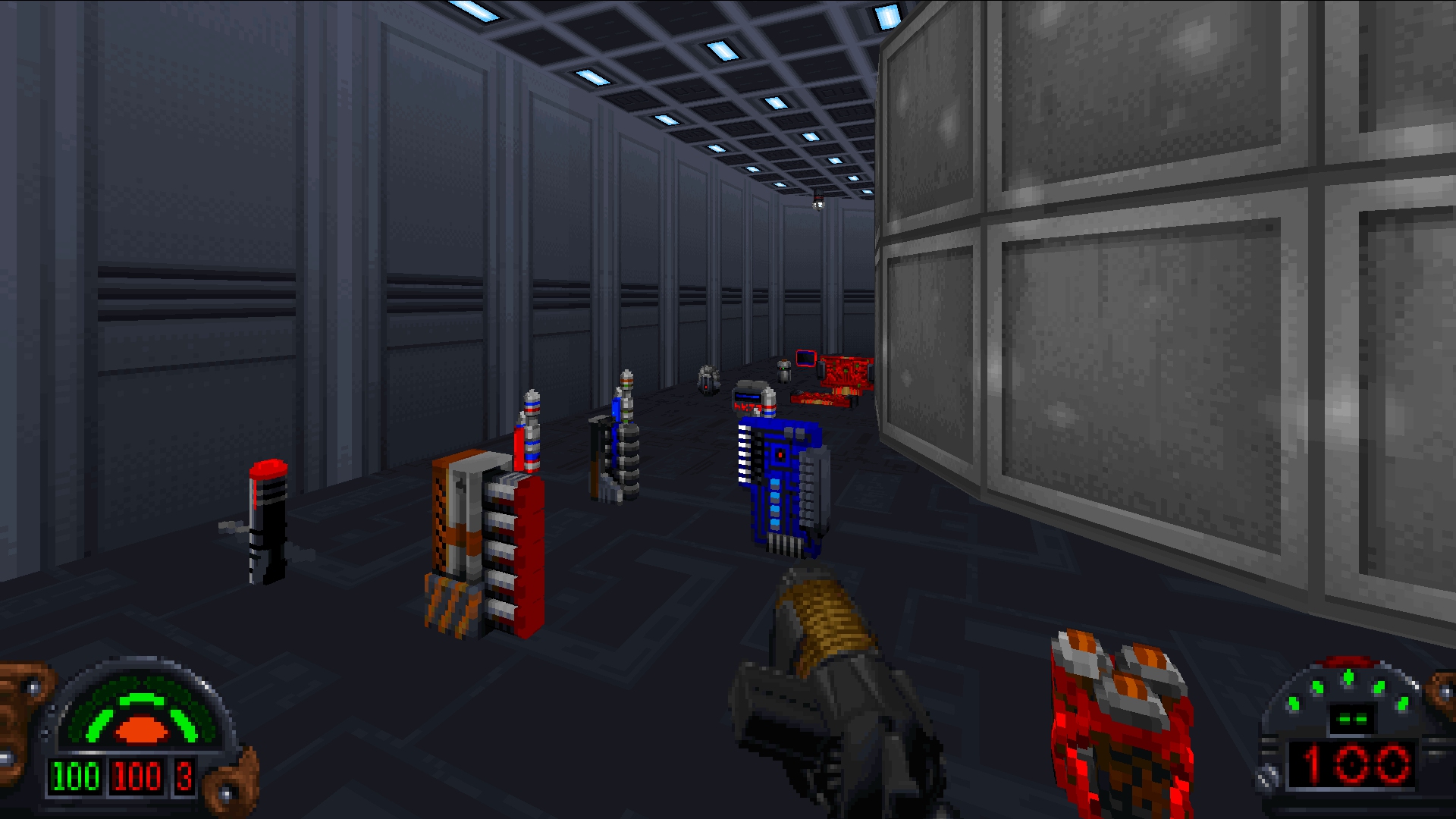

In addition to contributions by Kevin and Manuel, I implemented a number of improvements to the GPU renderer allowing for up to 65536 visible portals in a frame, properly handling more than 65536 visible sector vertices in a frame (as originally intended), improved debug view modes, added settings templates, added the 8-bit interpolated color mode, and finally the post processing system and bloom.

Version 1.09.5 - September 21

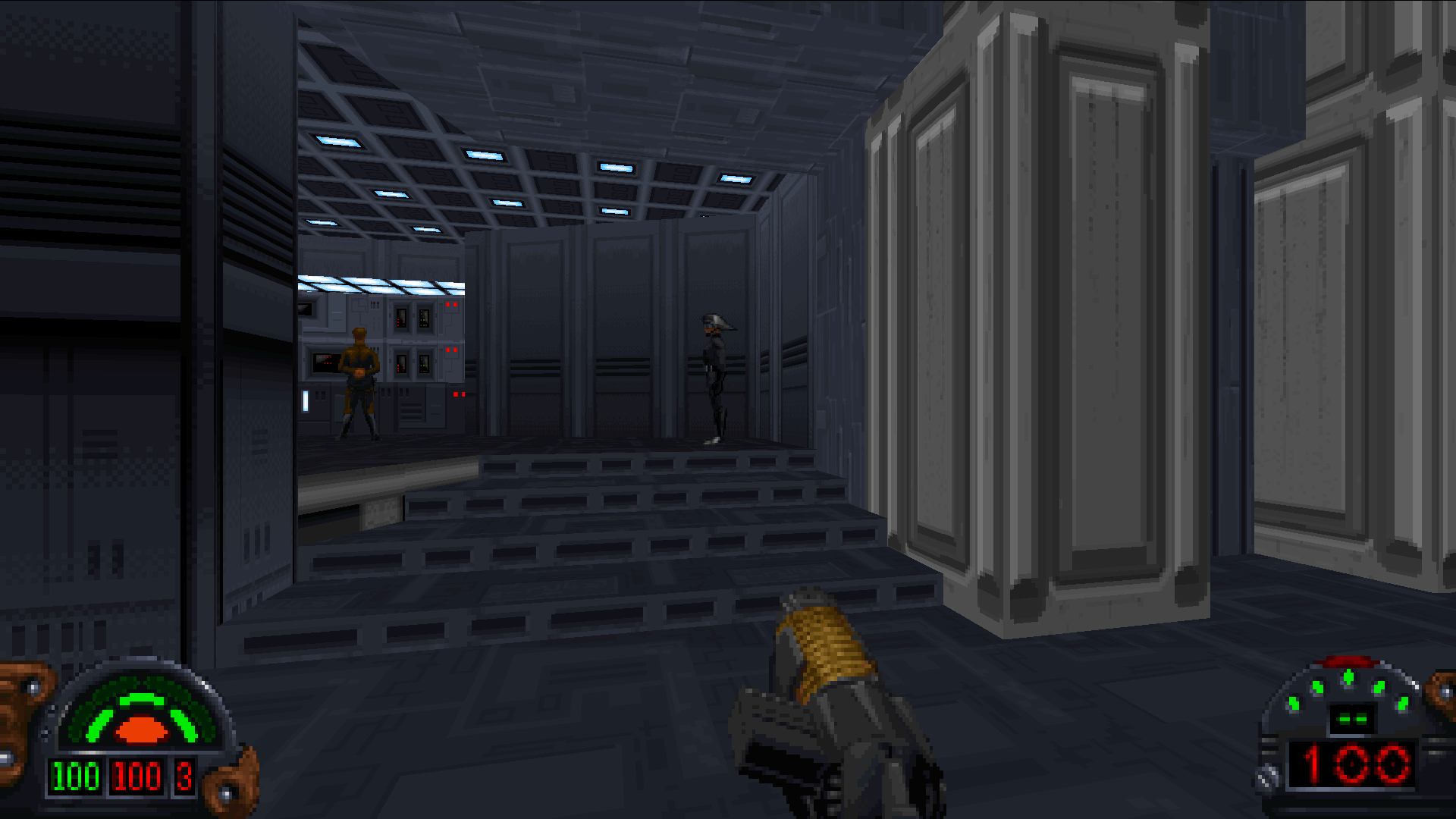

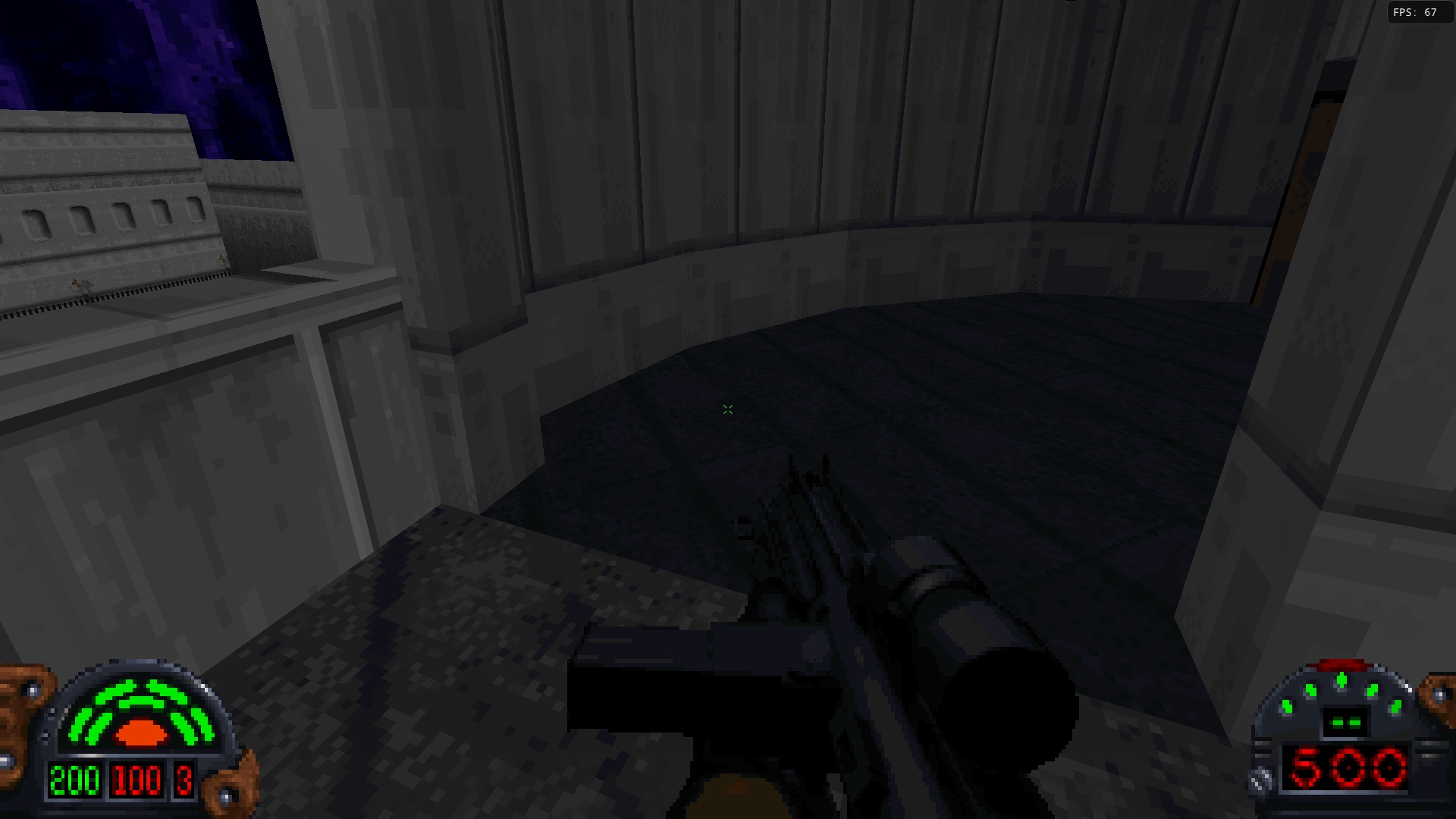

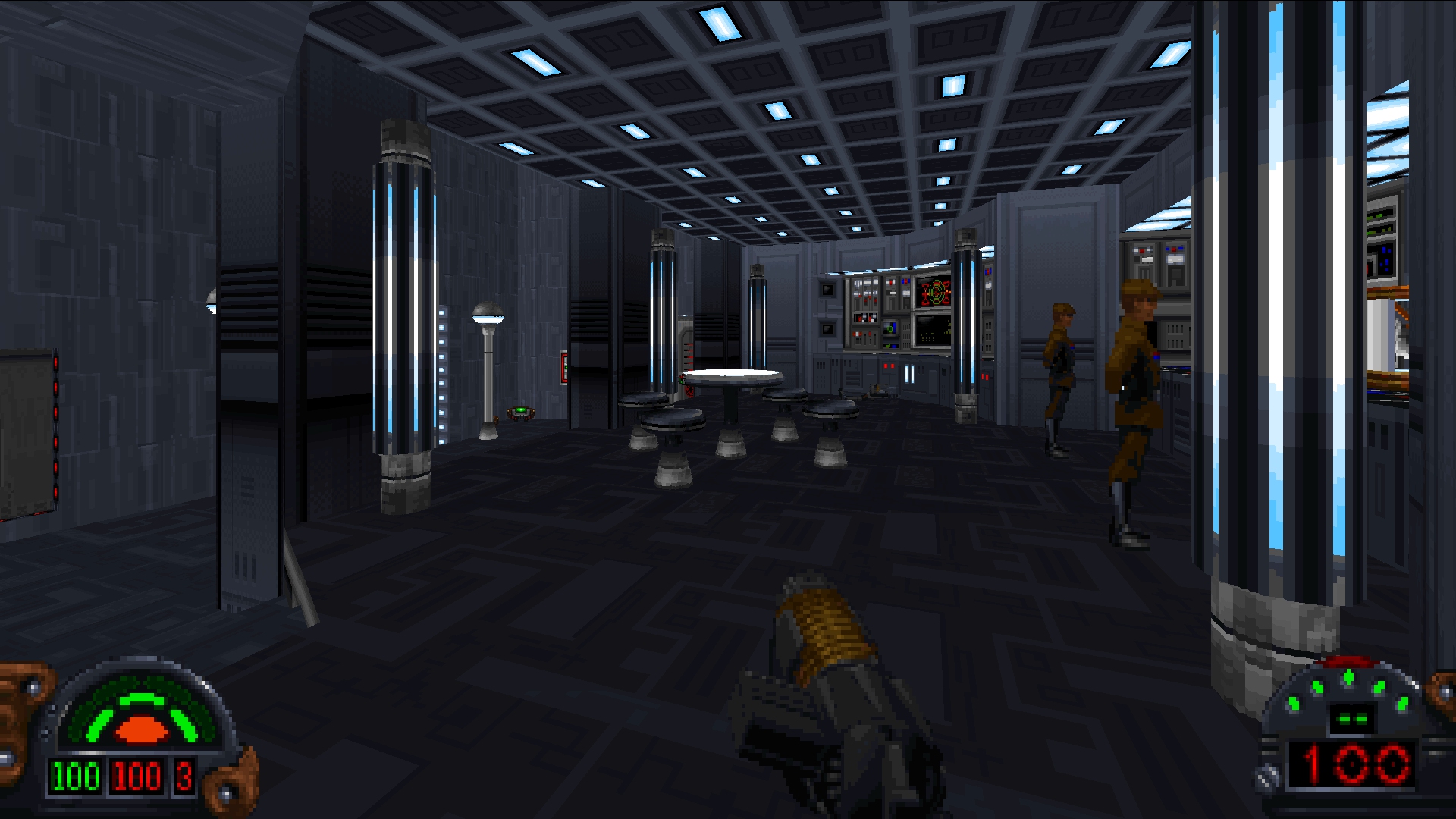

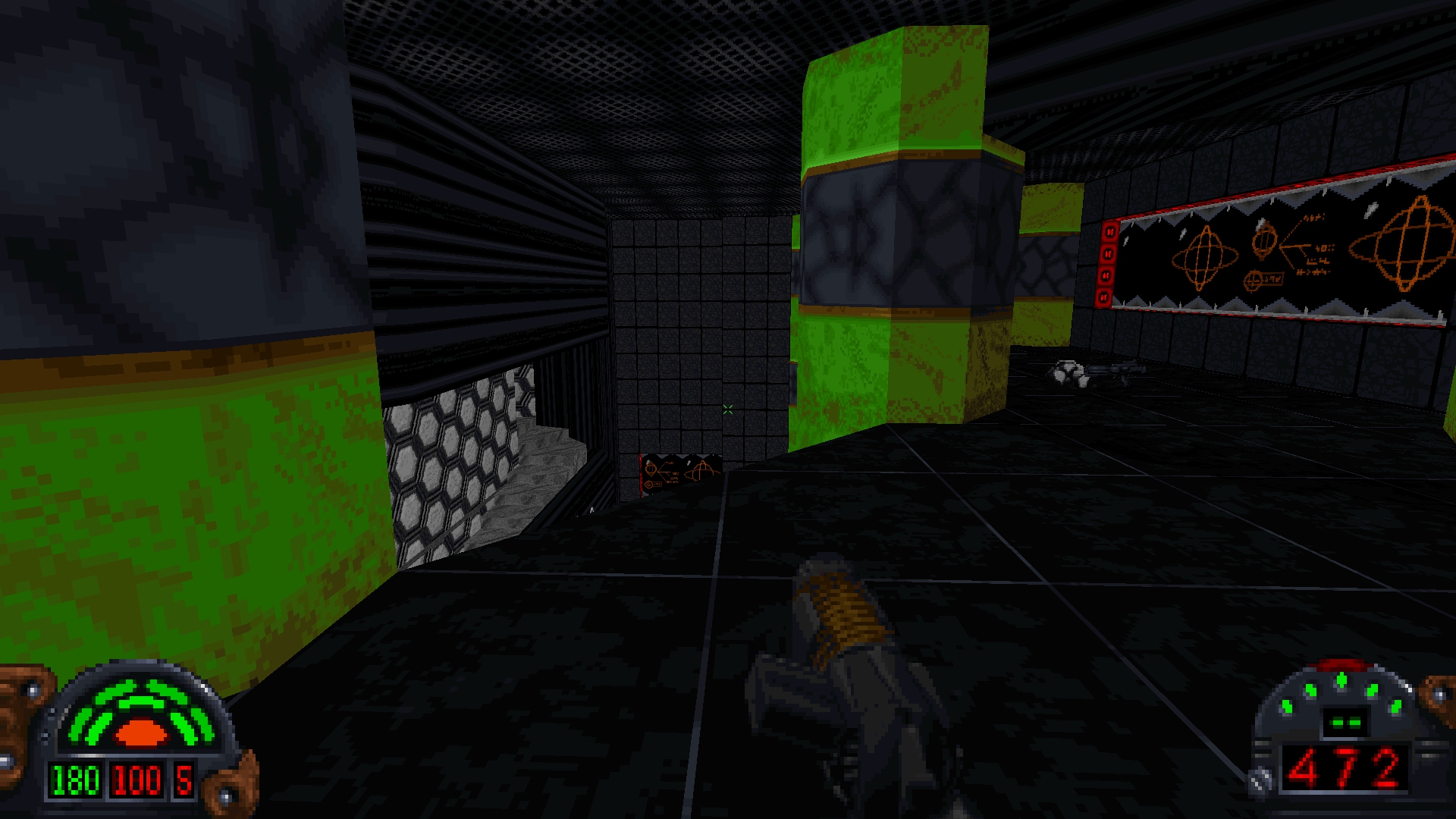

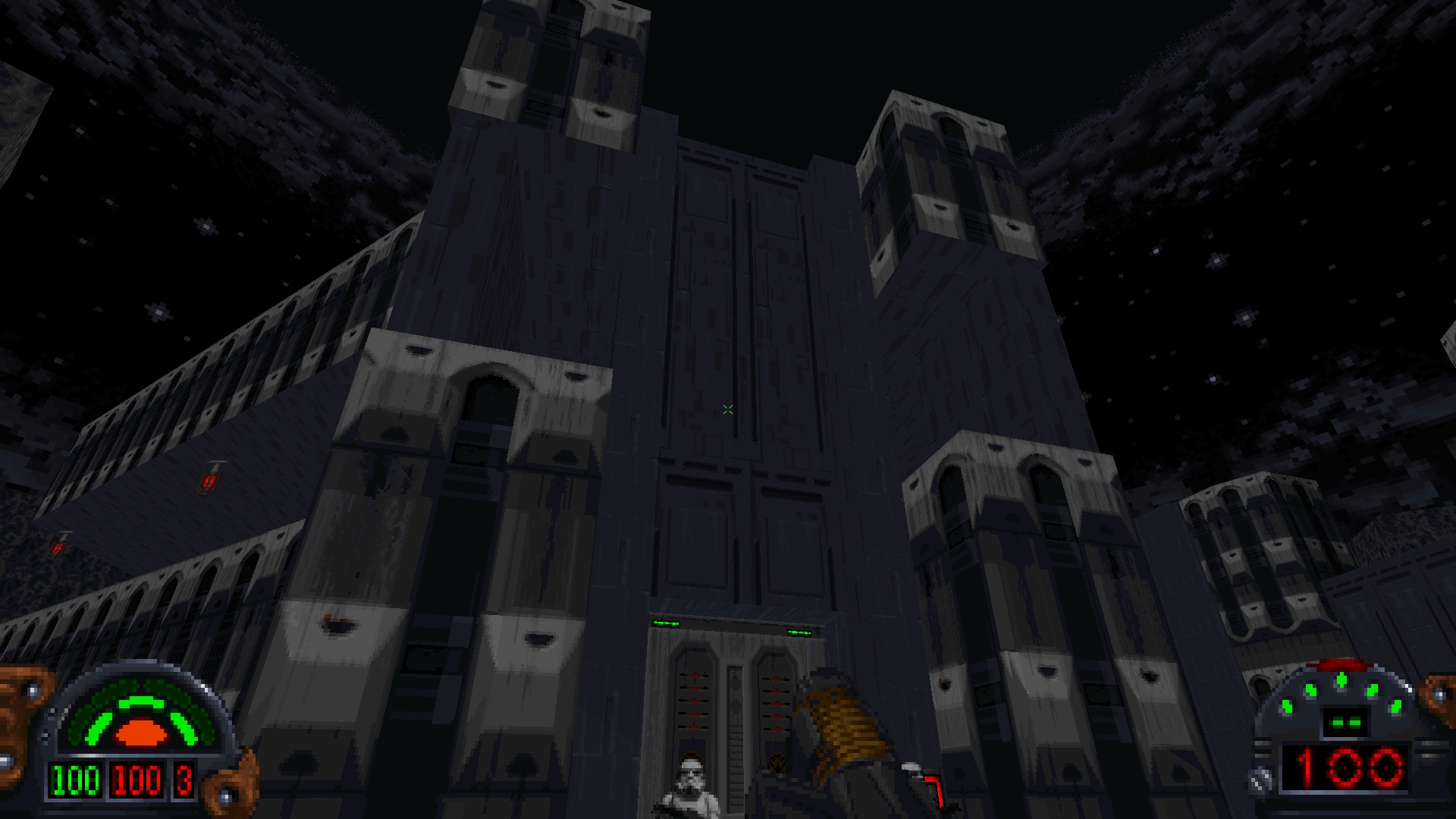

This release saw the addition of True Color Rendering, which included optional texture filtering Sharp Bilinear filtering, mipmapping, and approximated true-color to colormap mapping. It also, finally, added the option to adjust the field of view.

Kevin was also hard at work, adding support for custom caption files and fonts, UTF-8 support, and generally improving the Accessibility UI and Captions.

Version 1.09.52 - September 25 / Version 1.09.53 - 27th

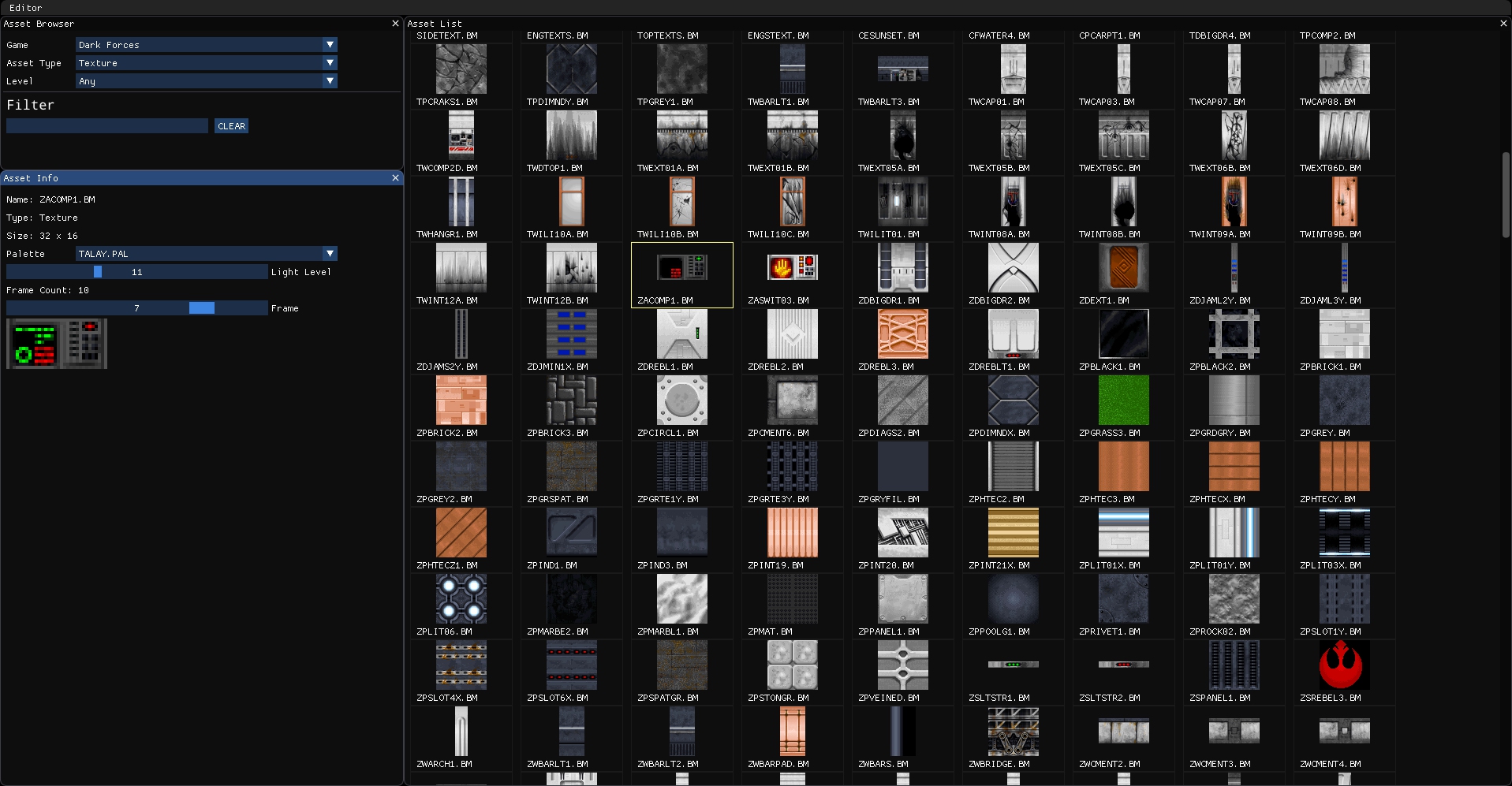

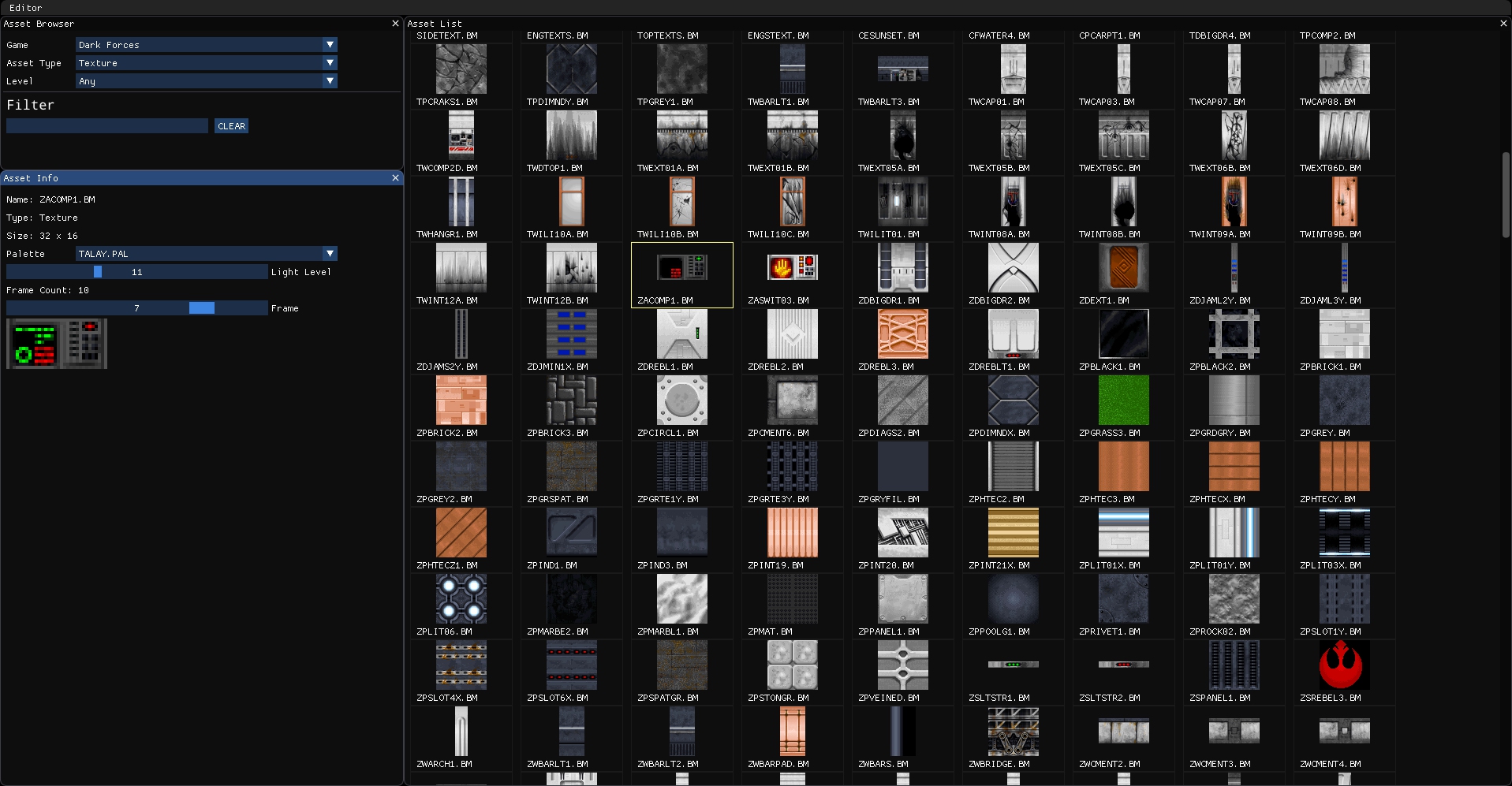

At this point I was mainly focused on bringing back the built-in tools that were removed early on due to large changes in the project. This release saw the return of the Editor option in the main menu, the initial pass on the Asset Browser, and better error handling. Kevin contributed to fixes in the Captions system, and Manuel continued to contribute fixes and to work on the Linux implementation.

Manuel Lauss contributed more improvements to the audio system, replacing RTAudio with SDL Audio - fixing a number of issues and reducing the number of dependencies. Kevin contributed to more accessiblity fixes, and I worked on improving the true color mode - making textures appear closer in coloration to 8-bit mode. This release also saw more progress on the Asset Browser, such as factoring out the Asset system.

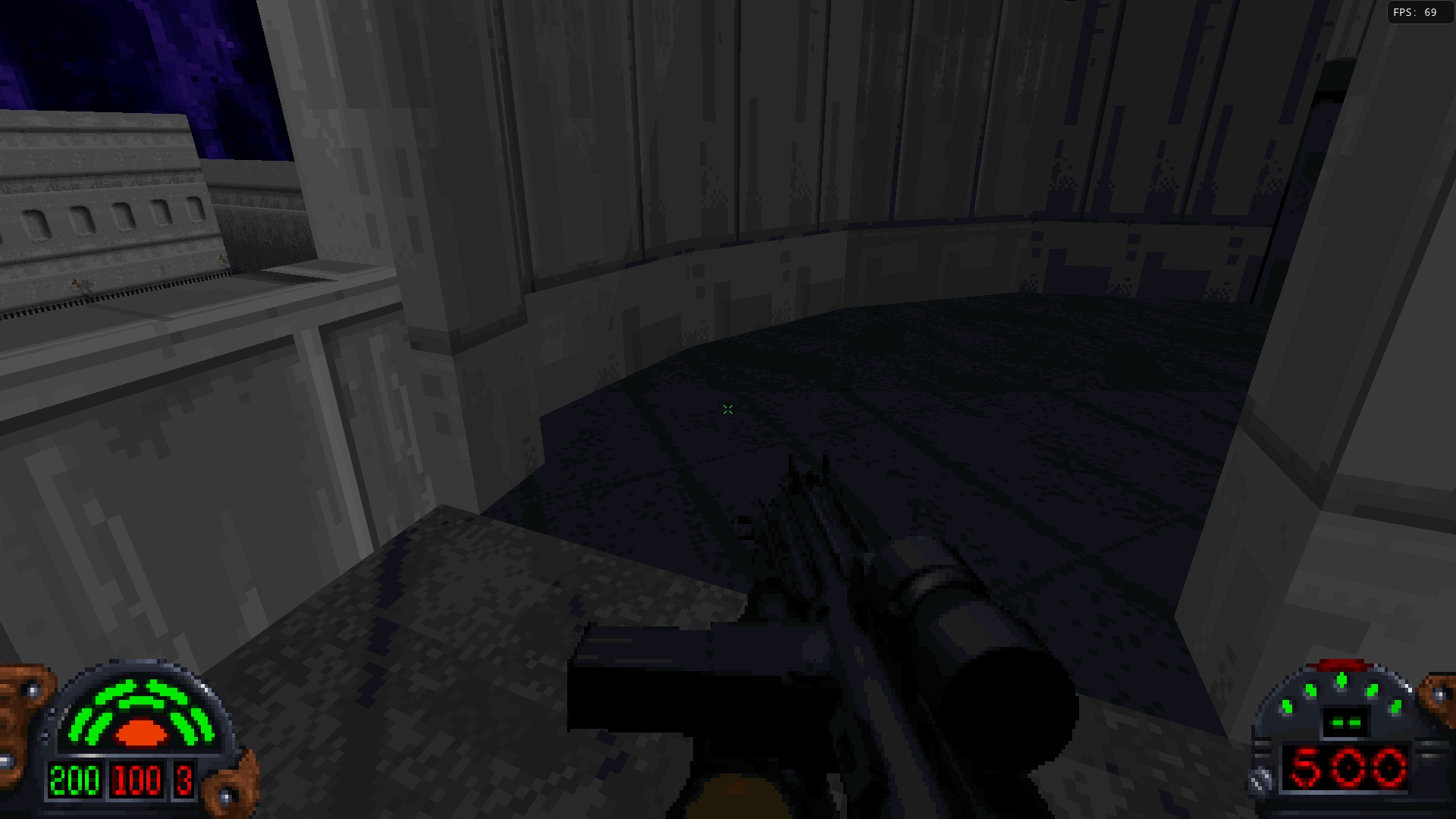

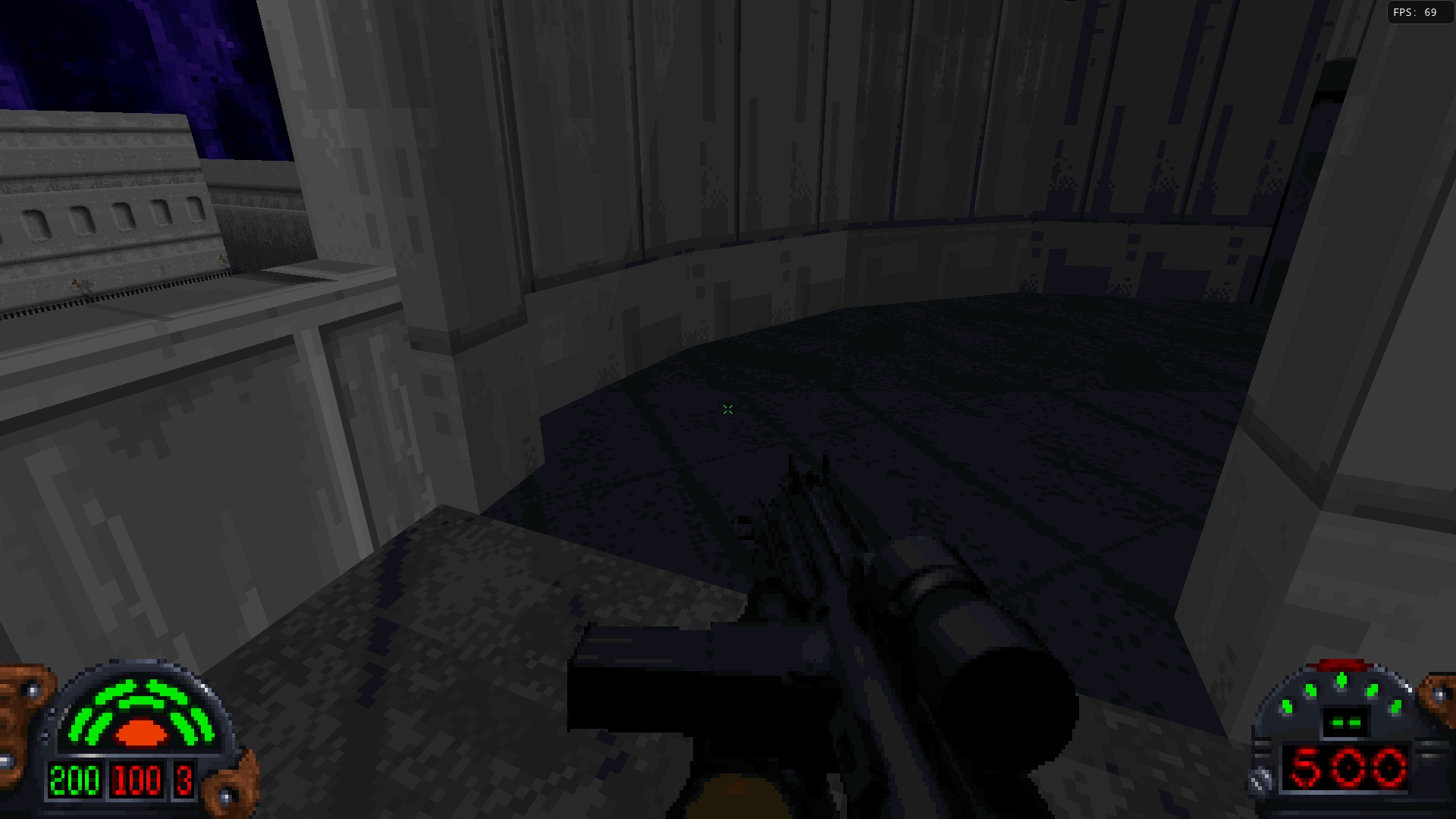

Color Improvements to True Color Rendering

Asset Browser

Version 1.09.54 - Final Release of 2023

In the final release, more work went into the editor - setting up for the Level Editor work that would consume the rest of the year. RtMidi was made optional on Linux thanks to Manuel, now possible with OPL 3 and SF2 support. After discussions, we also replaced DeviL with SDL Image to make future Linux packages easier.

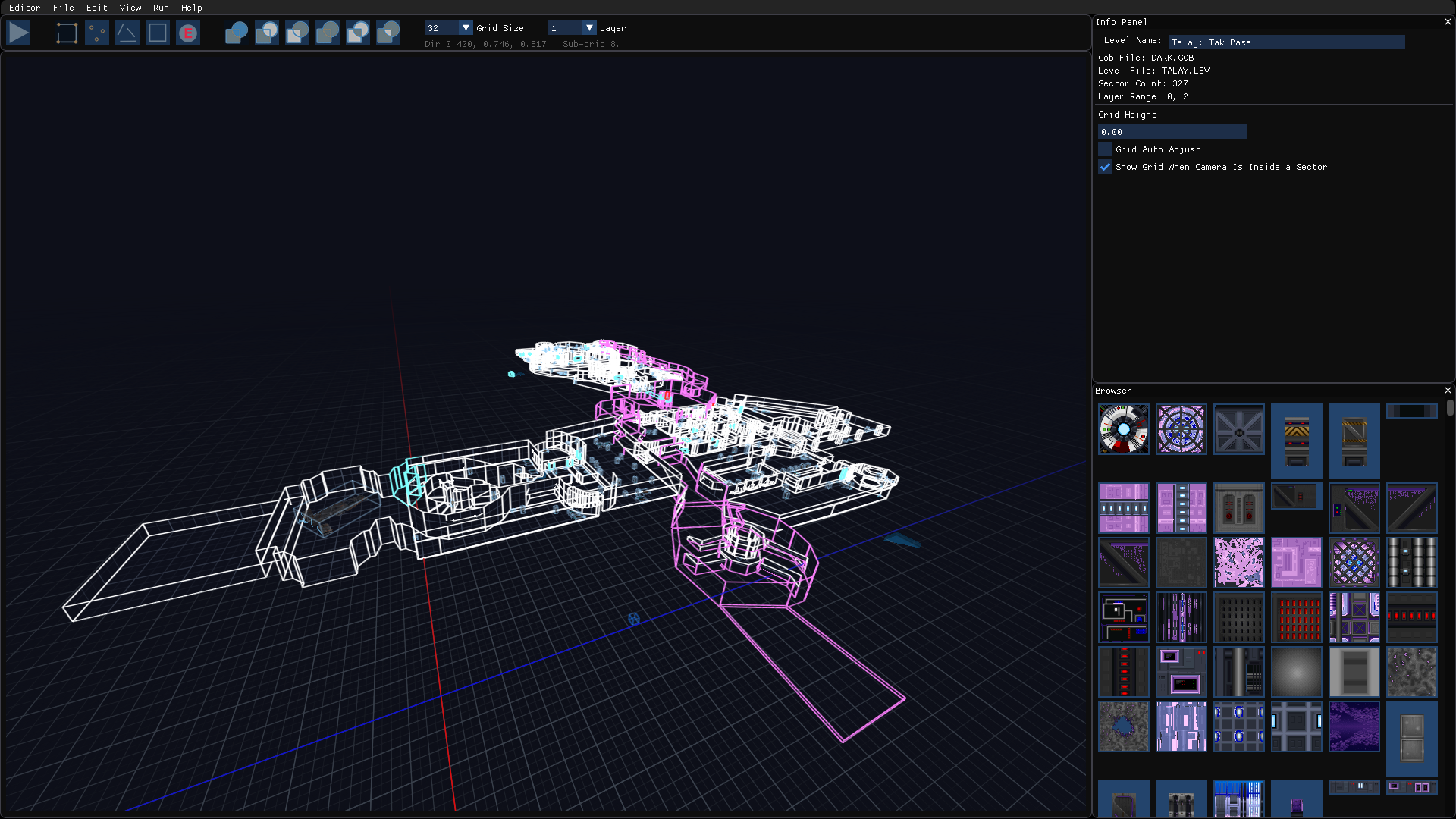

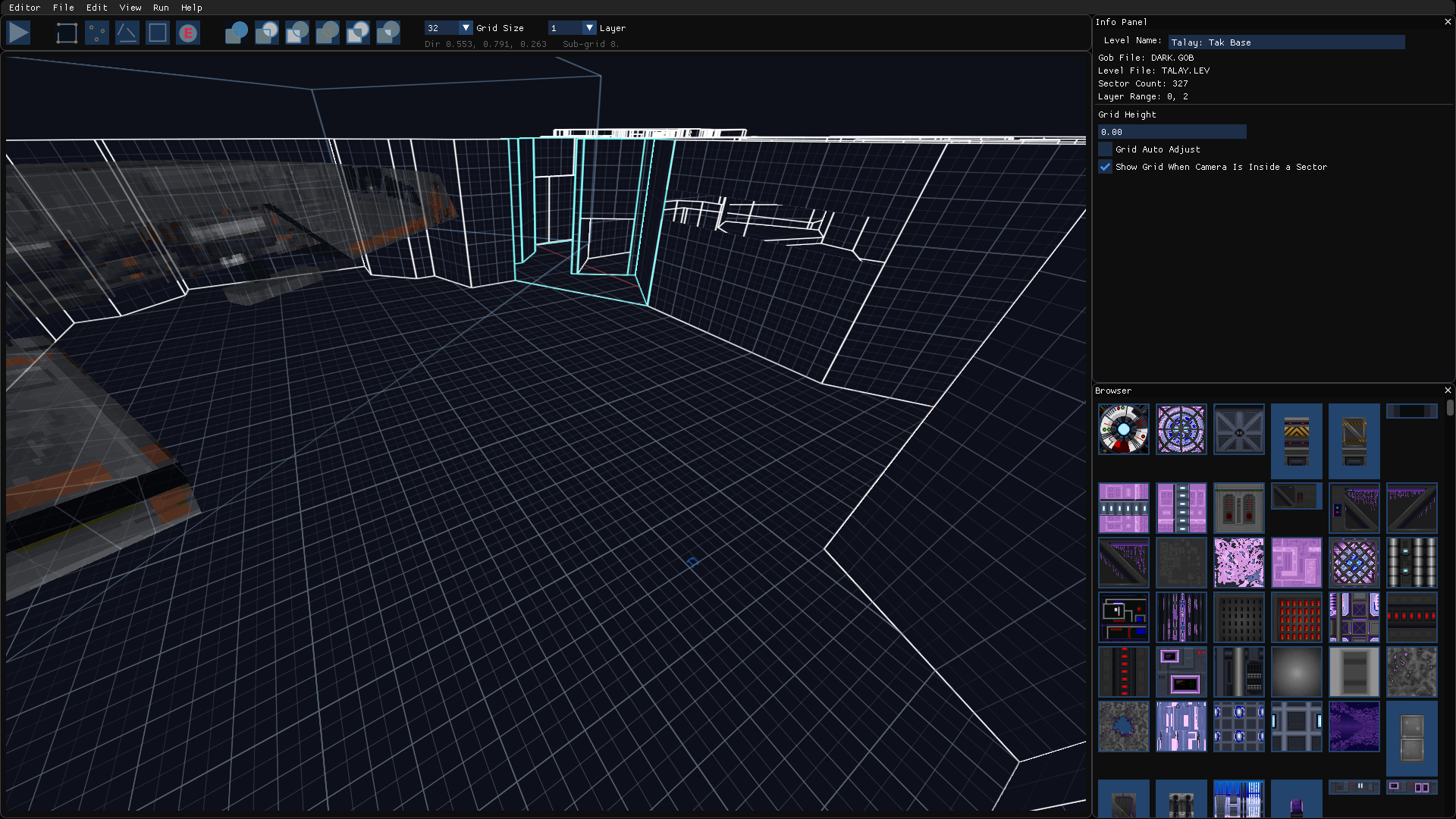

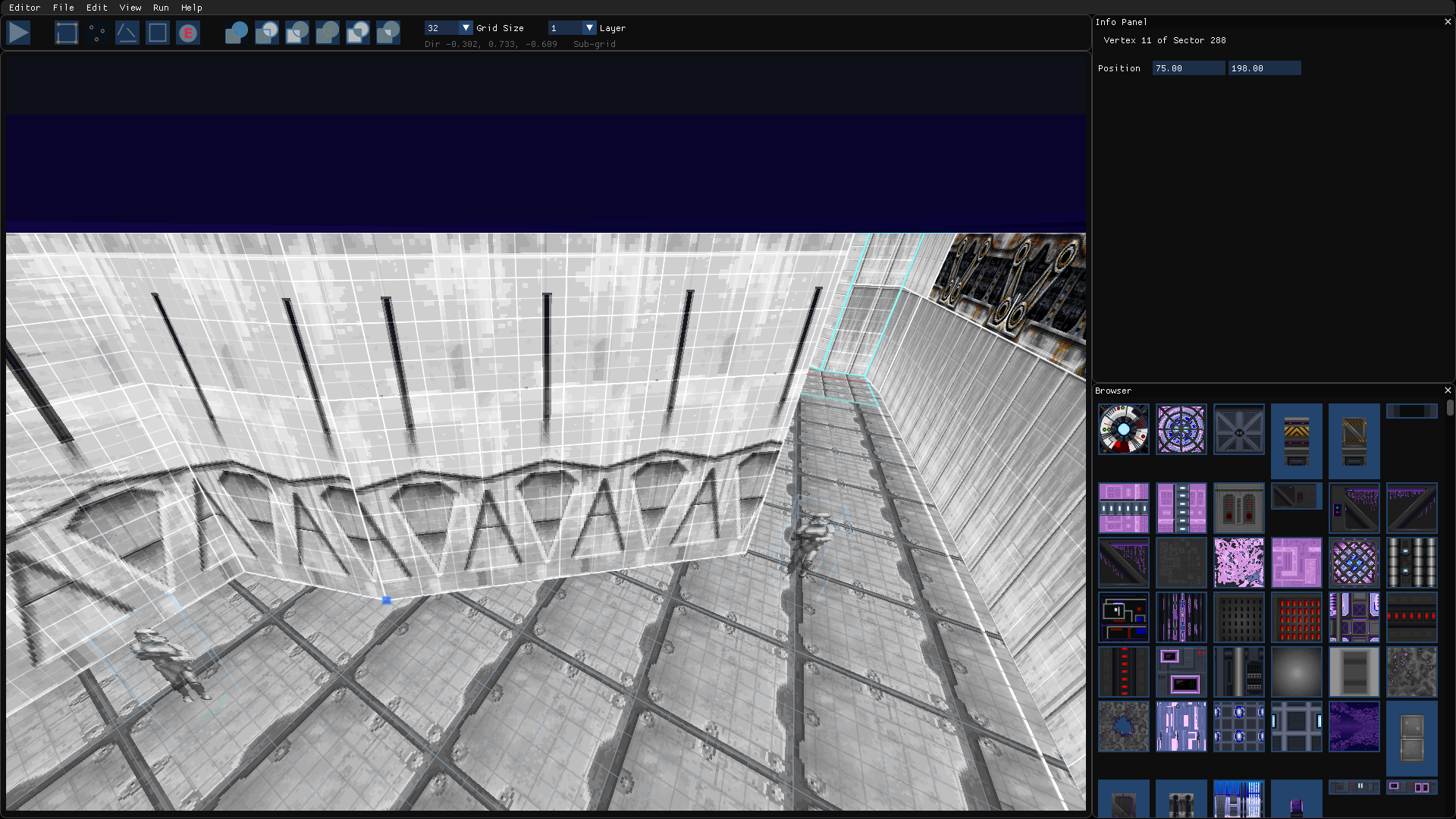

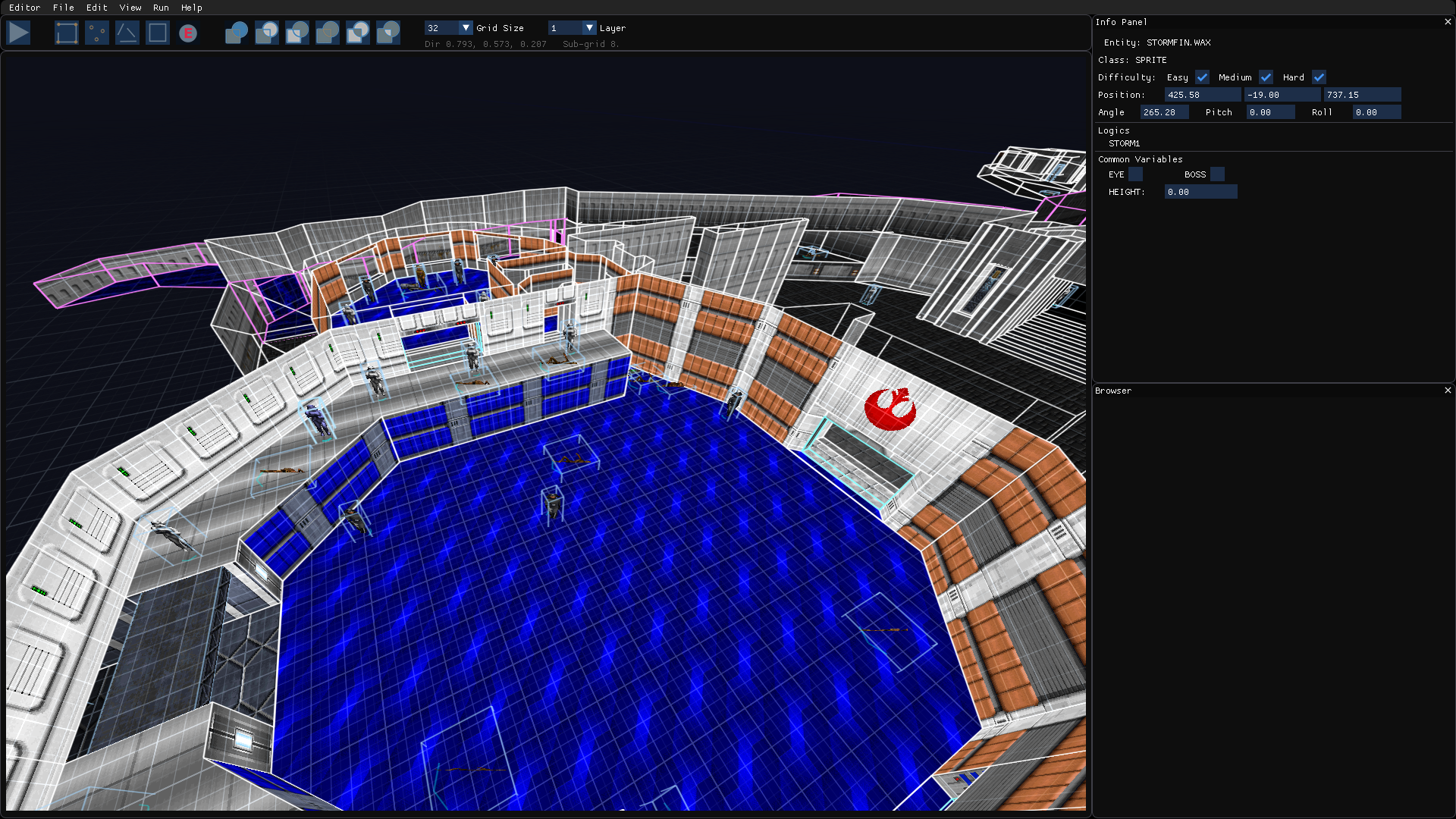

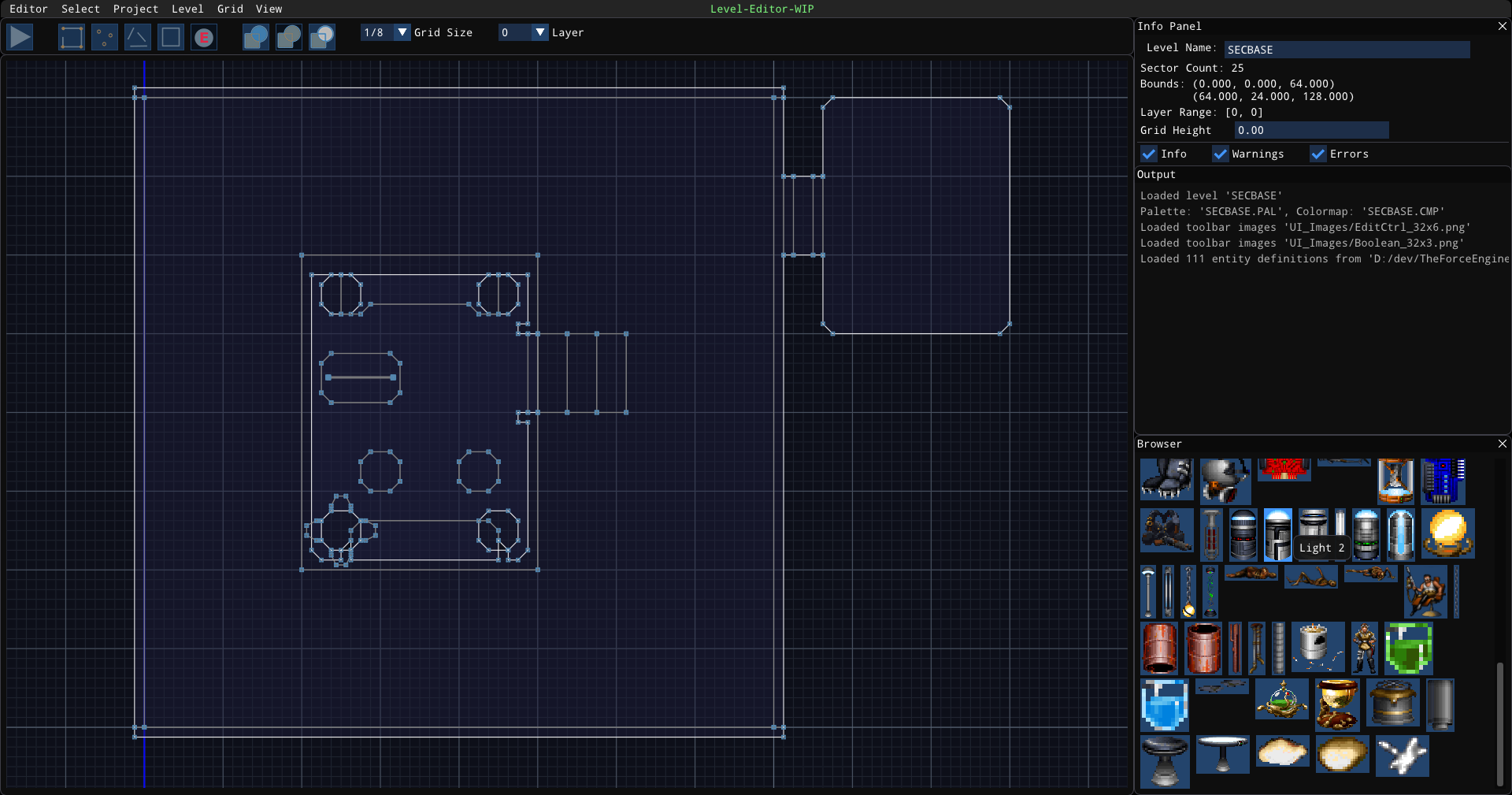

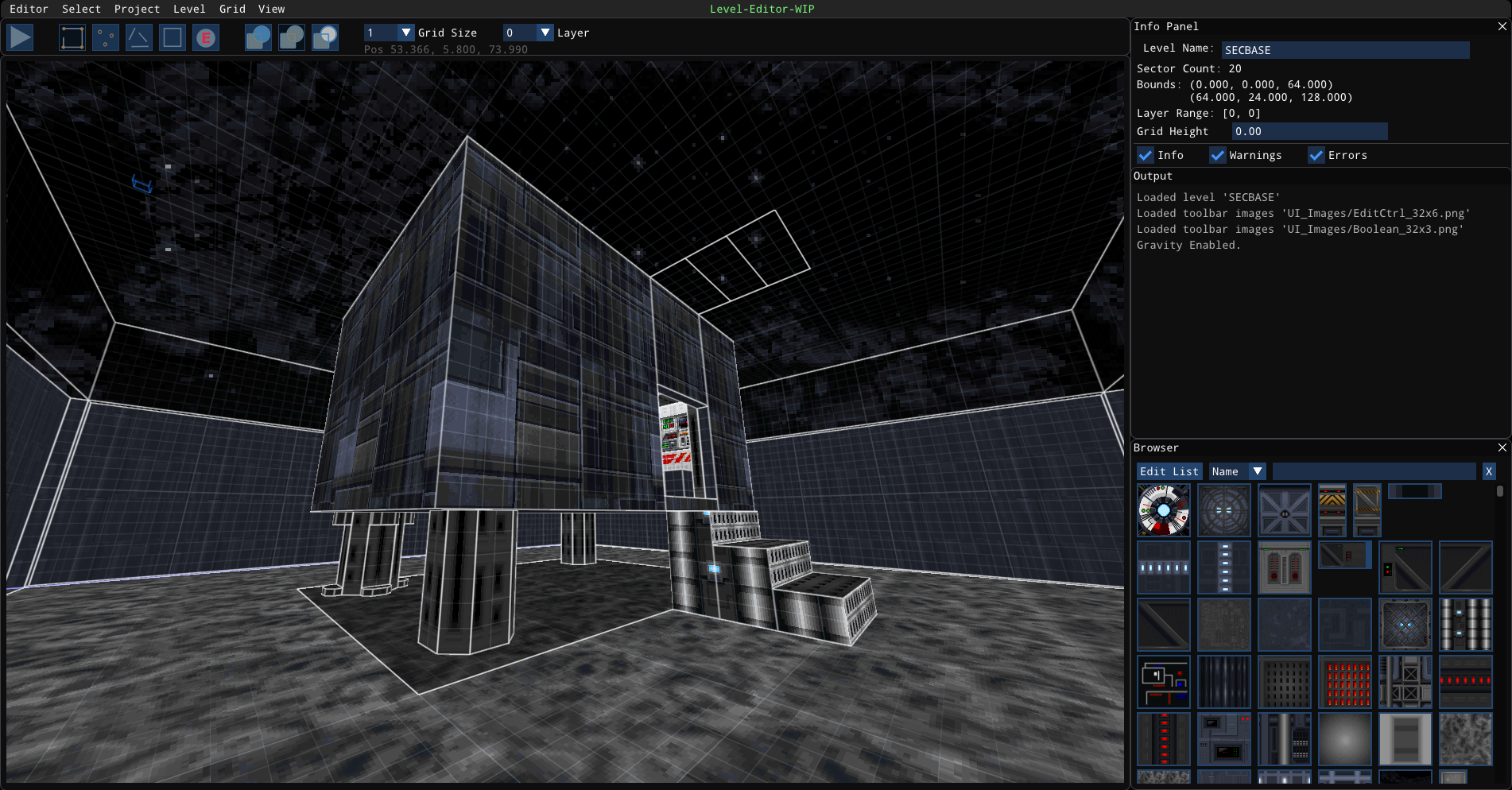

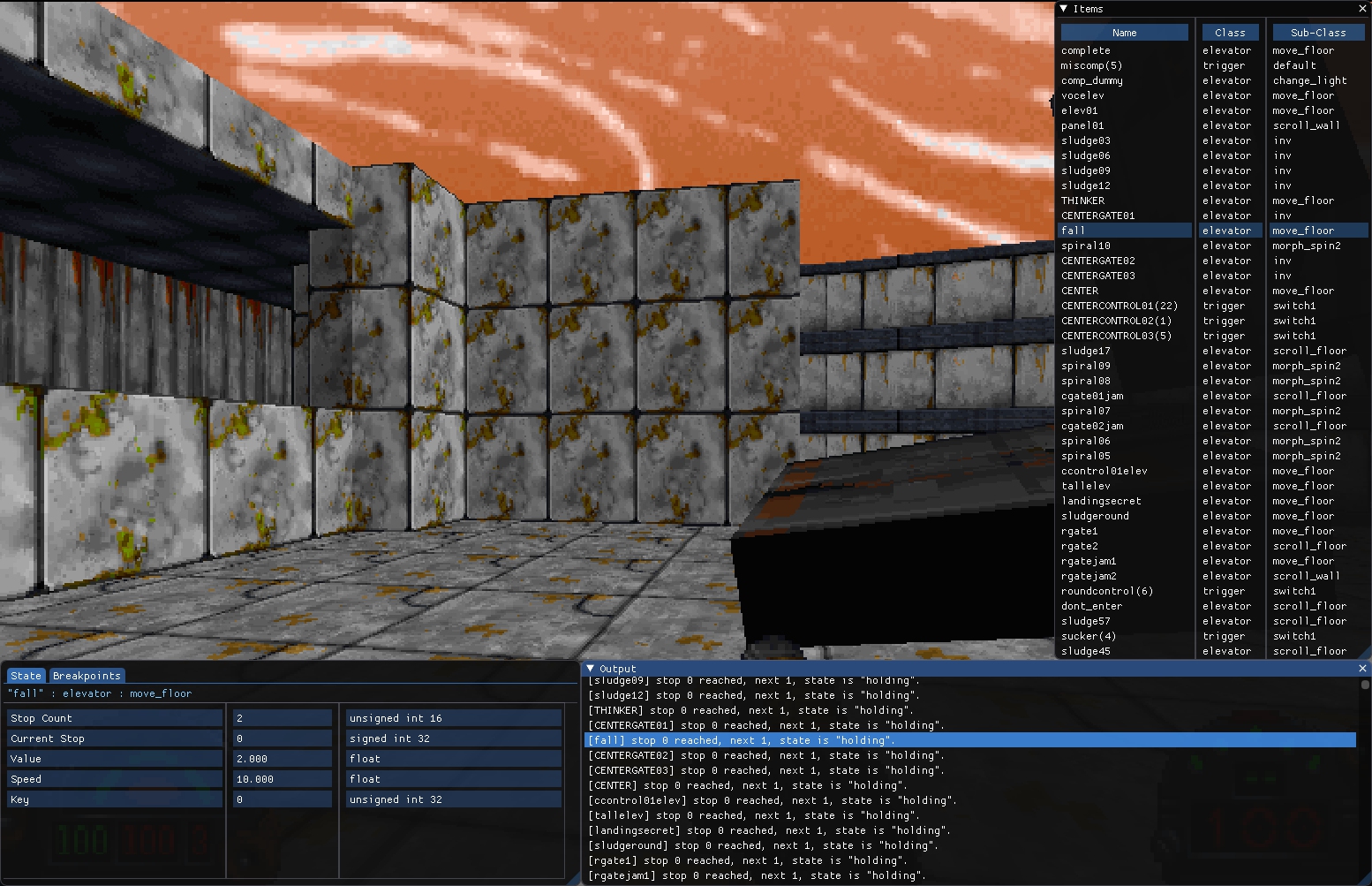

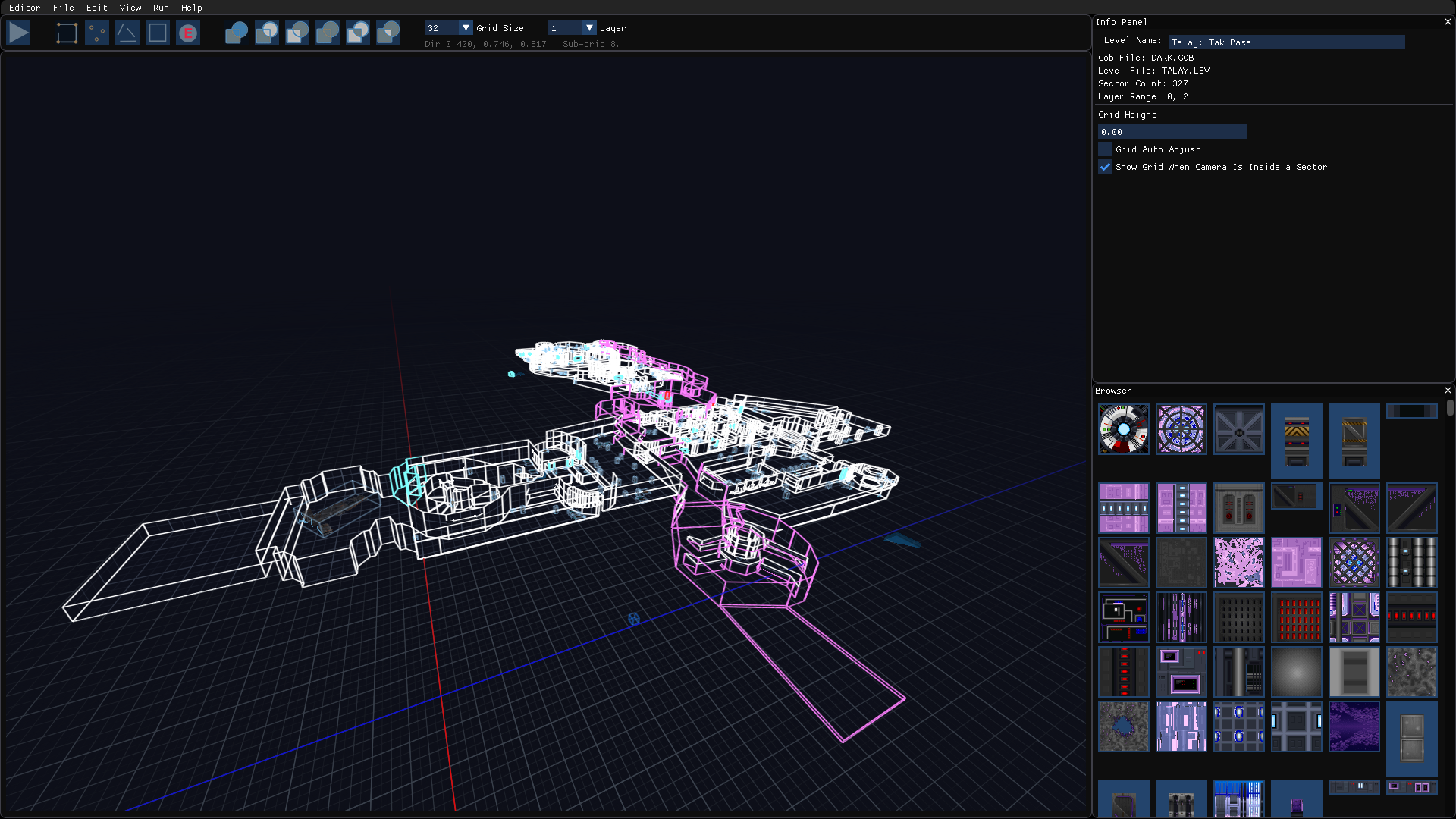

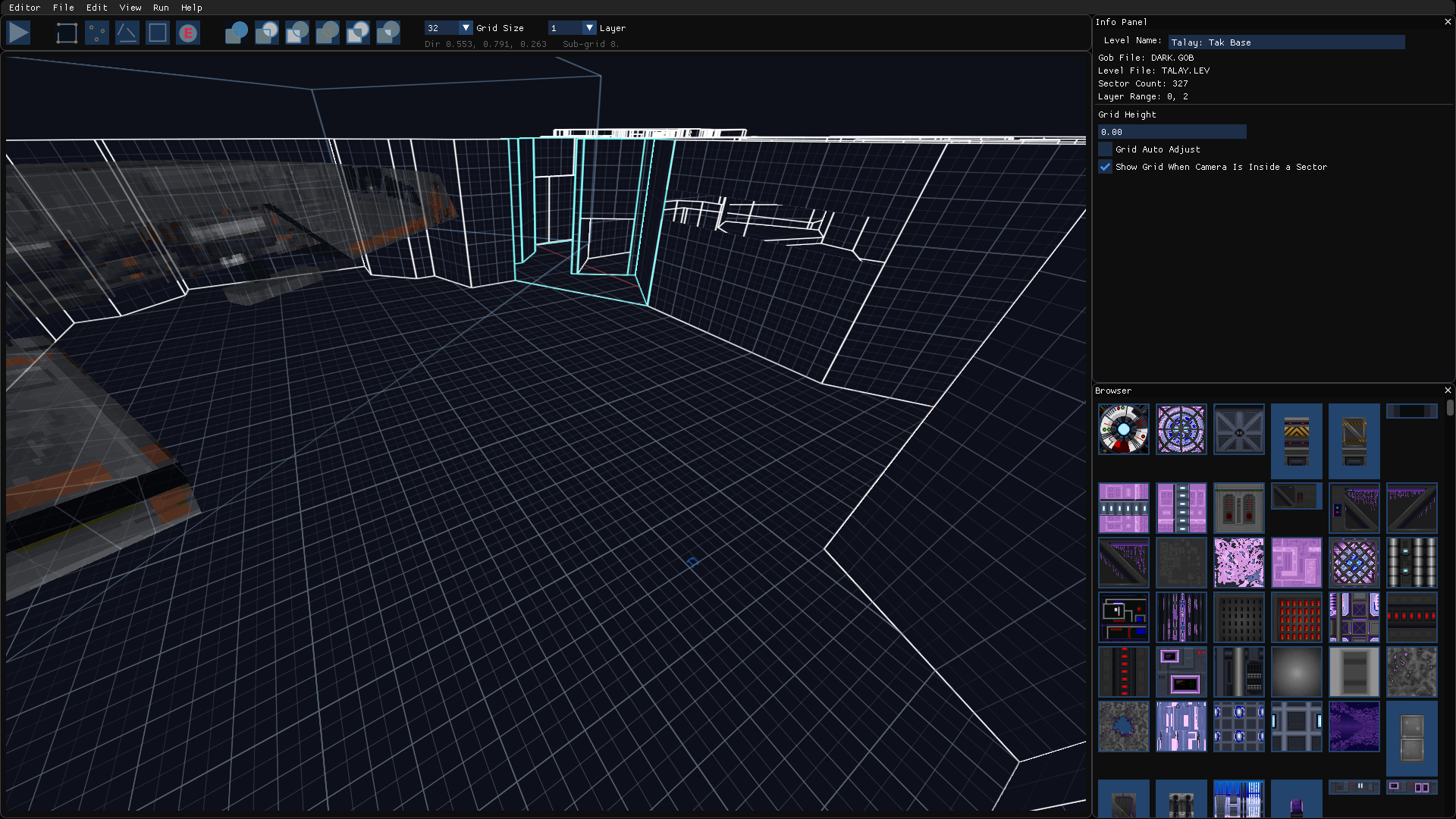

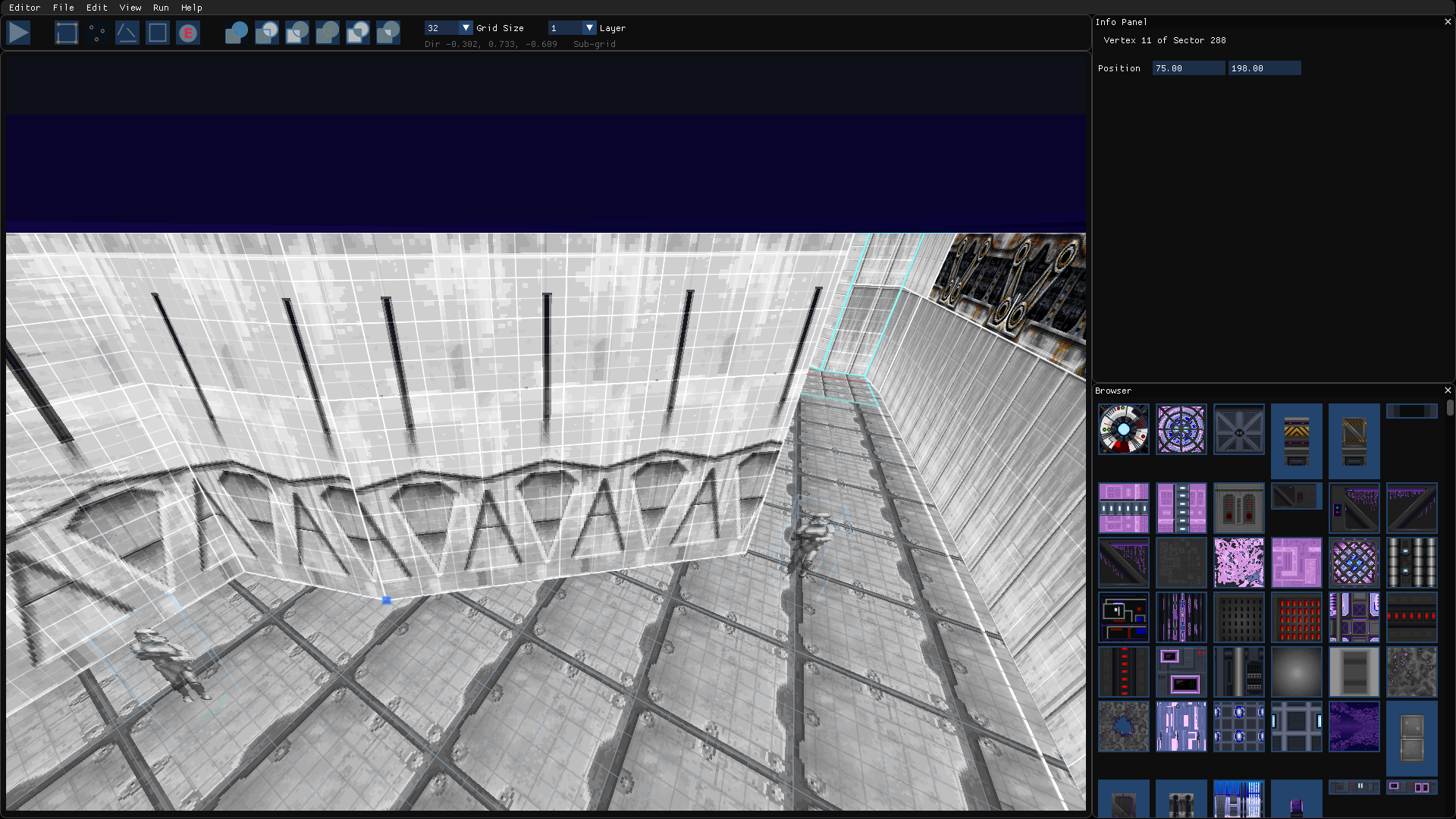

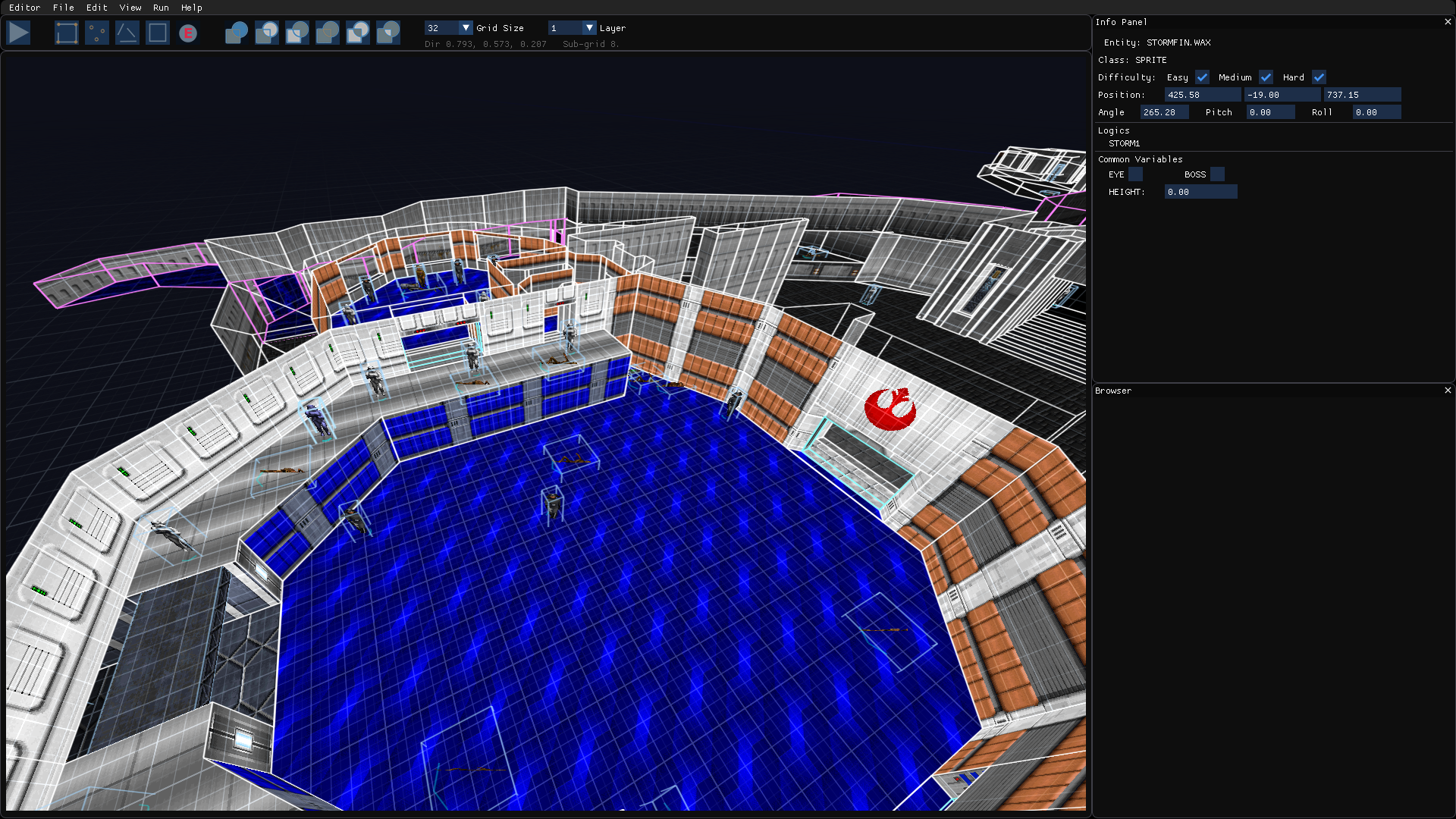

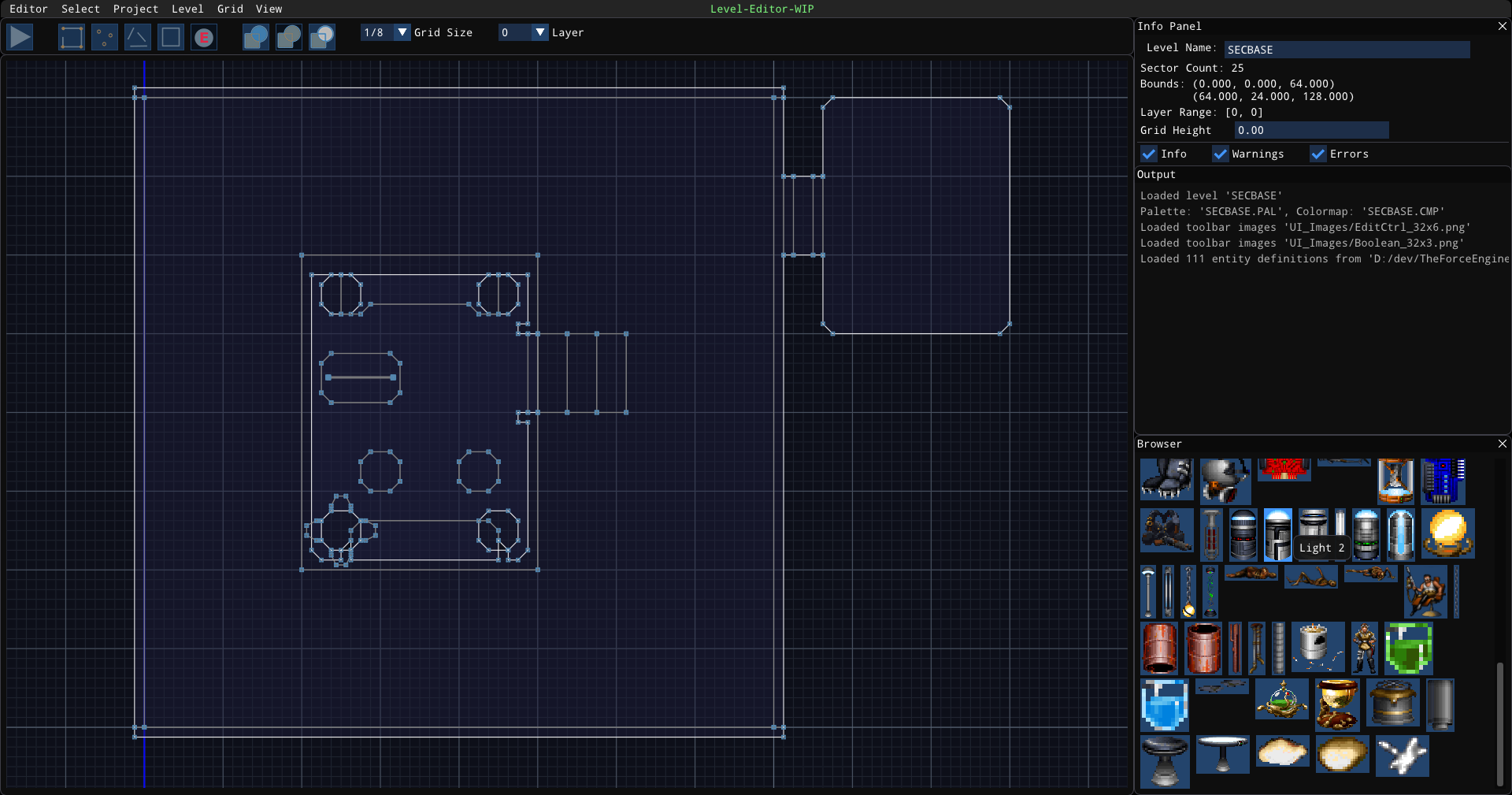

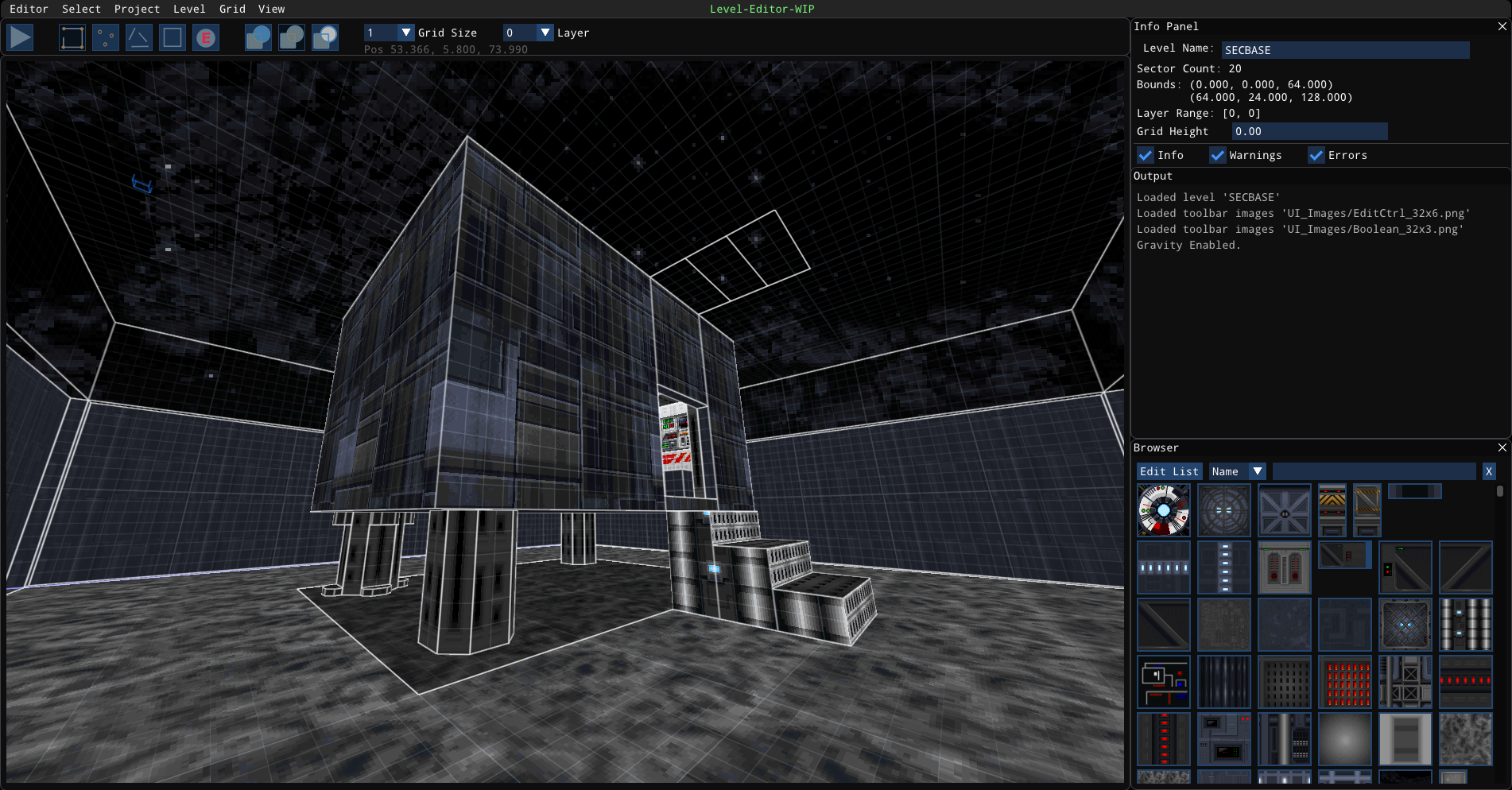

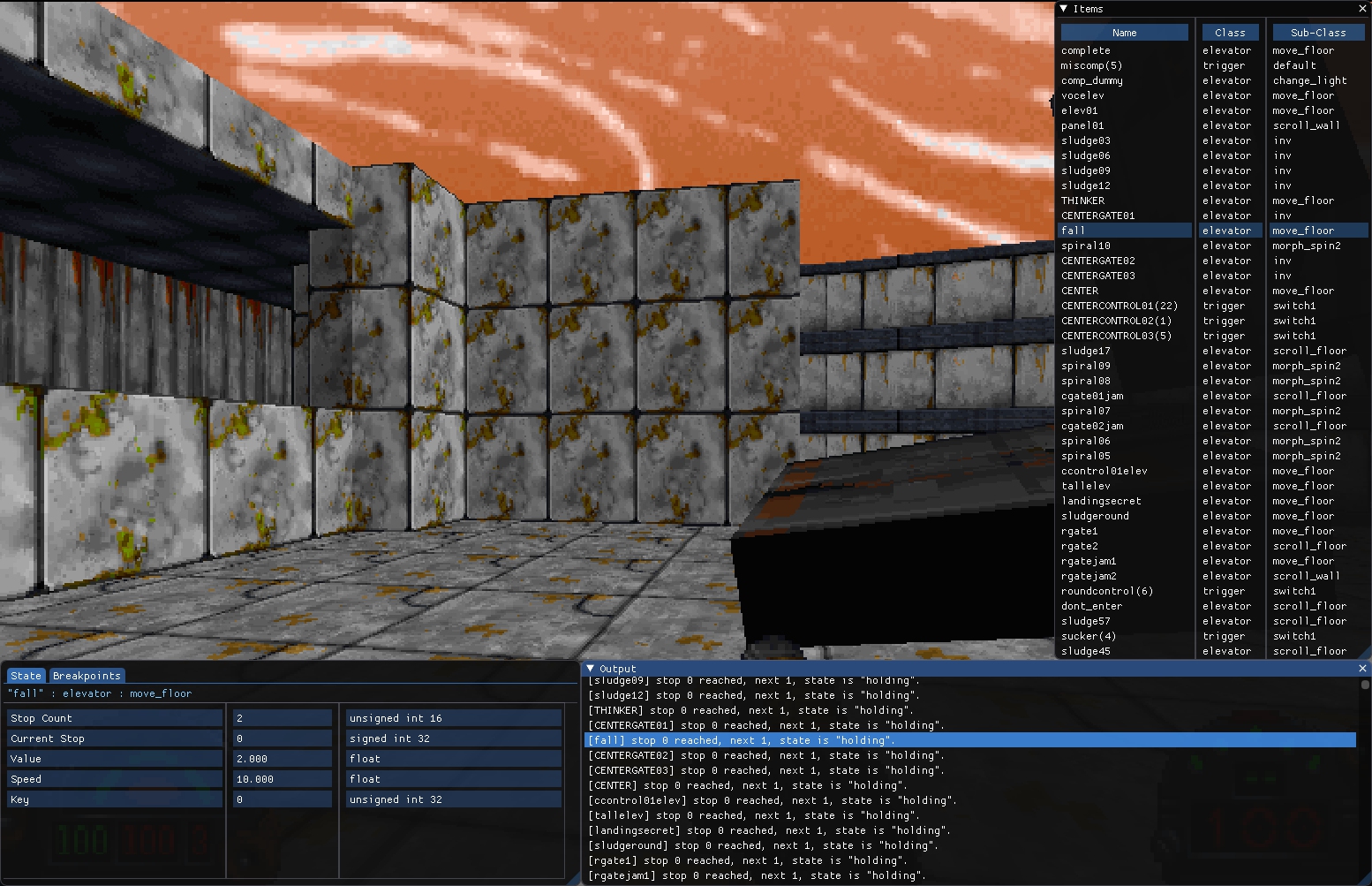

Level Editor

The rest of the year was spent on the level editor and supporting systems. While not yet useable, a number of features have been completed and, overall, it is shaping up well for a January release.

Level Editor

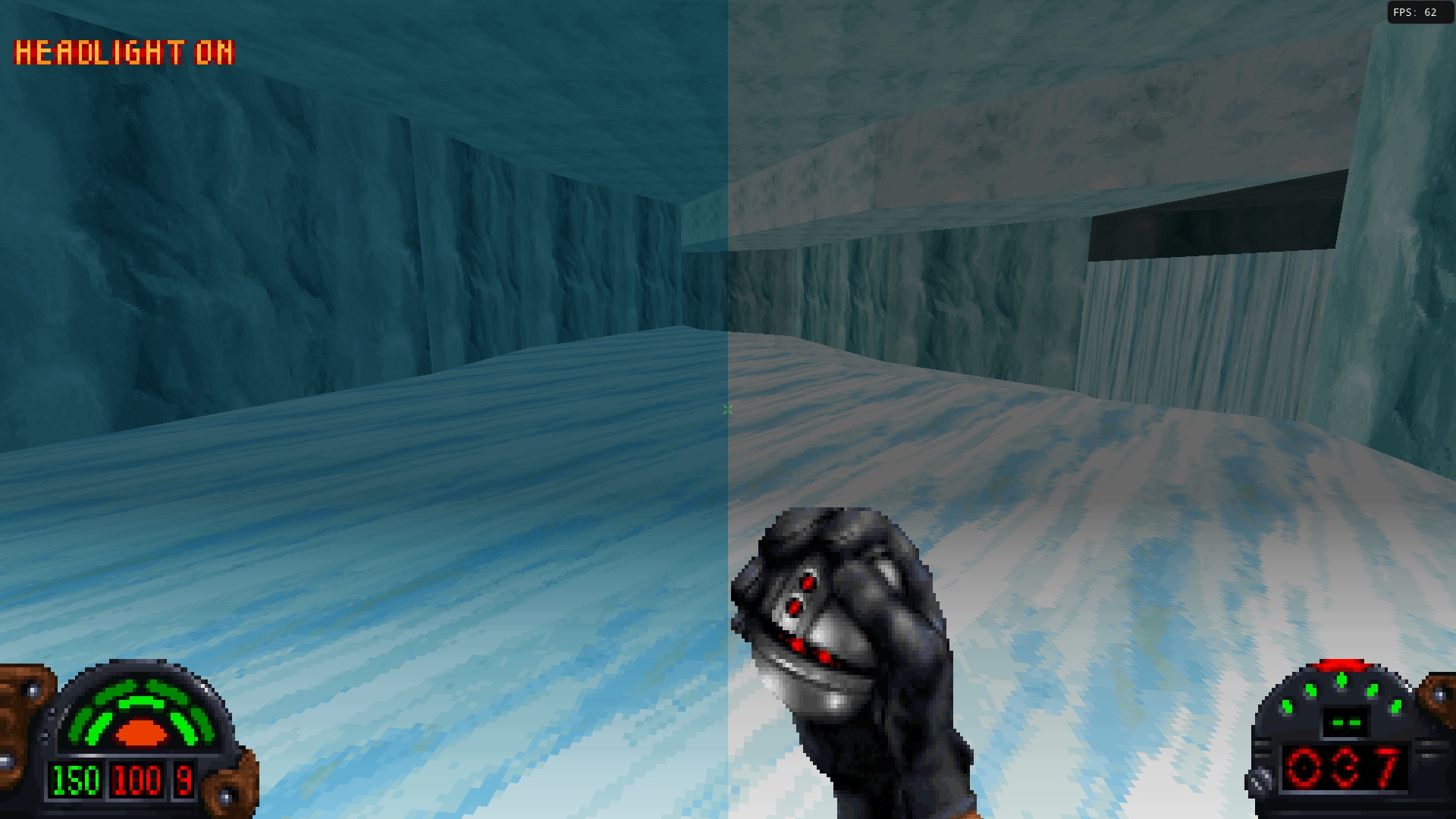

Outlaws

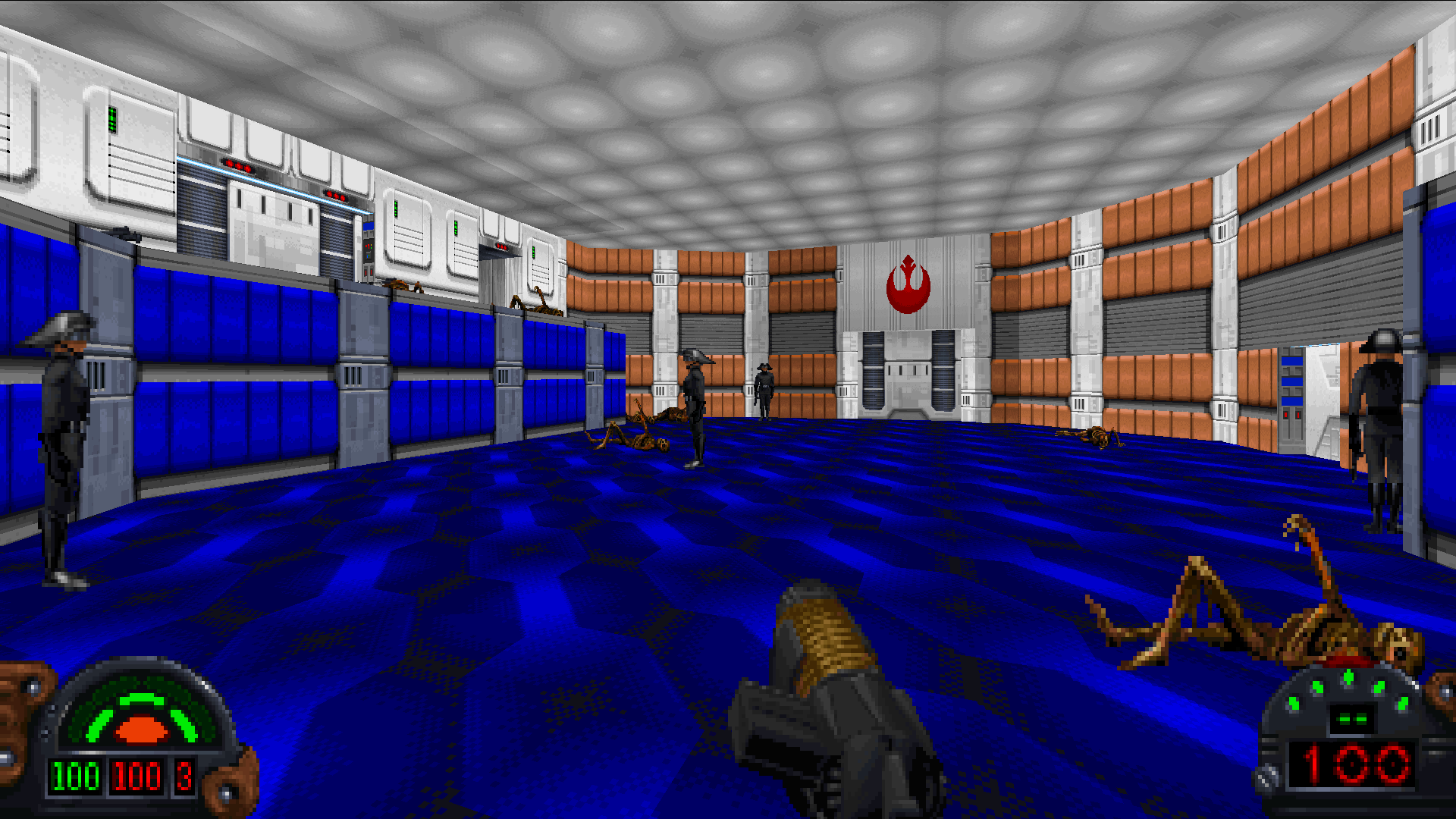

Behind the scenes, initial work on Outlaws was started leading to a good understanding of the renderer changes from Dark Forces as well as various other systems. This work will be leveraged in 2024 to both support Outlaws asset types and levels in the editor, but also to port over enhancements to Dark Forces to allow for the features to be used (slopes, dual-adjoins, etc.) but also to implement Outlaws support throughout the year.

Other

Another feature that was worked on, but not quite ready for prime time, is dynamic lighting. That will be ported from the branch and finished sometime in 2024.

2024 Plans

For 2024 Plans, I have split things up into a few projects.

Level Editor - Early 2024

Release a useable level editor that can be used to make complete levels with goals, INF support, editable entities, and everything else needed.

Asset Editor - Early 2024

Along with the level editor, I want to finish the Asset Browser. In addition to the formats currently supported, I want to add formats needed for cutscenes, and UI. And finally new assets - such as HD assets and voxels.

HD Asset Support - Early 2024

Now that the Asset Browser is available and editors and import/export functionality is available (for will soon be available), it will be possible to add support and easily test HD assets. HD assets include higher resolution / color depth textures, frames, and sprites, but also higher quality sound as well. My goal is to have this done for the Dark Forces Remaster release, so Nightdive’s new assets are supported.

Voxel Replacement Support - Early 2024

It is finally time to port over the experimental voxel code and make it “production ready.” The Asset Browser will be used to import voxel data, map hacks, and similar data to build voxel mods for Dark Forces and Outlaws.

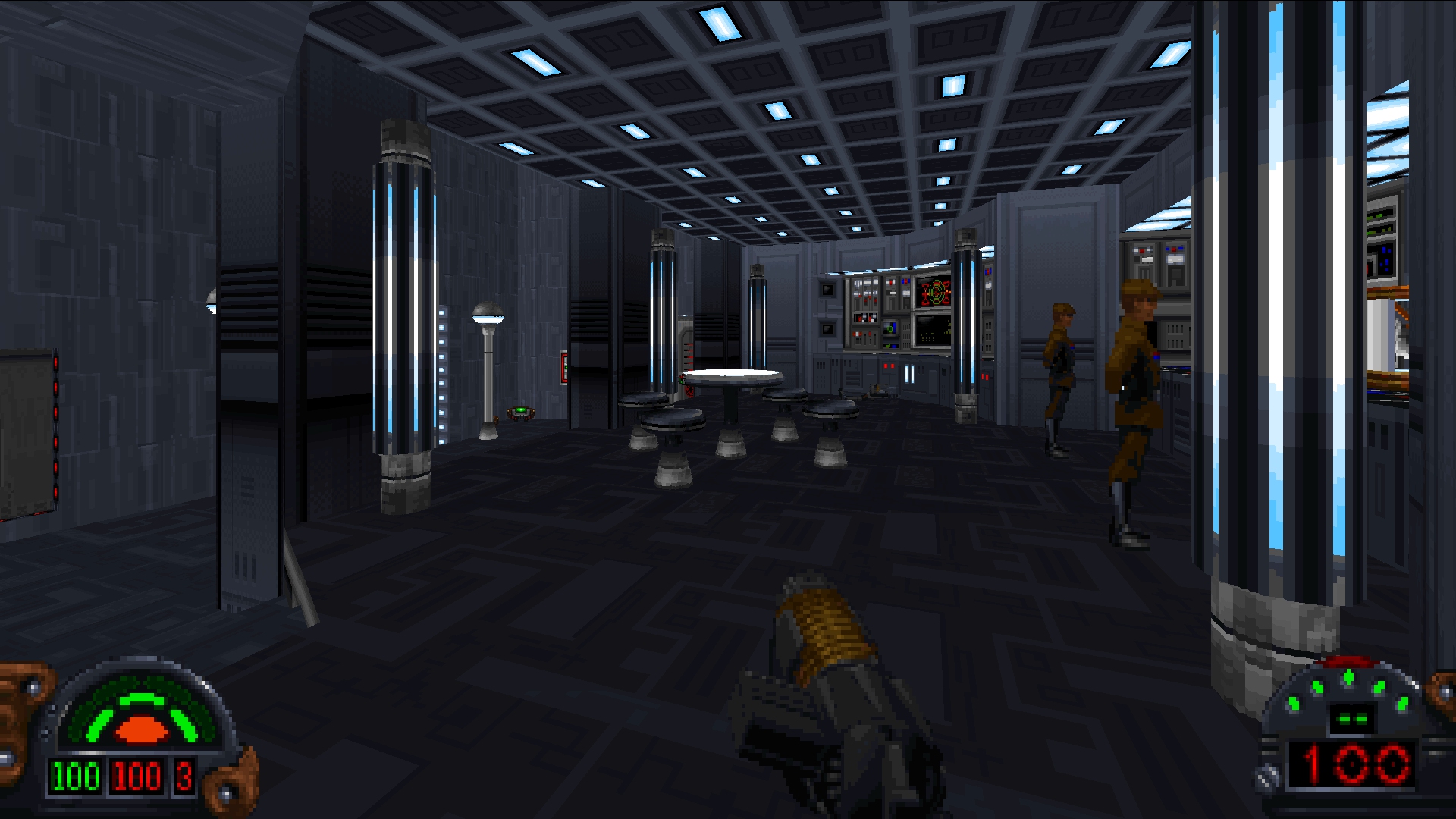

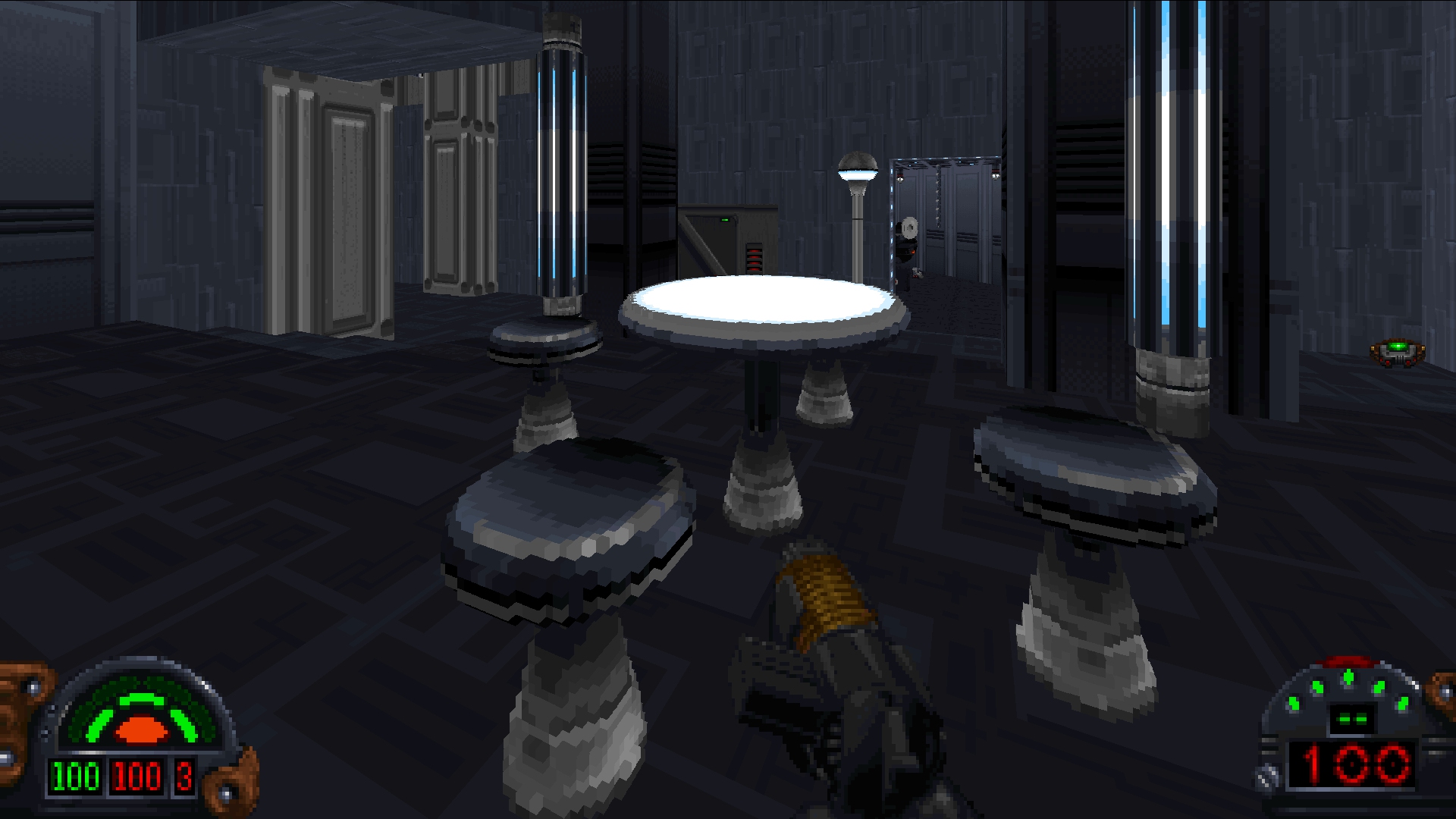

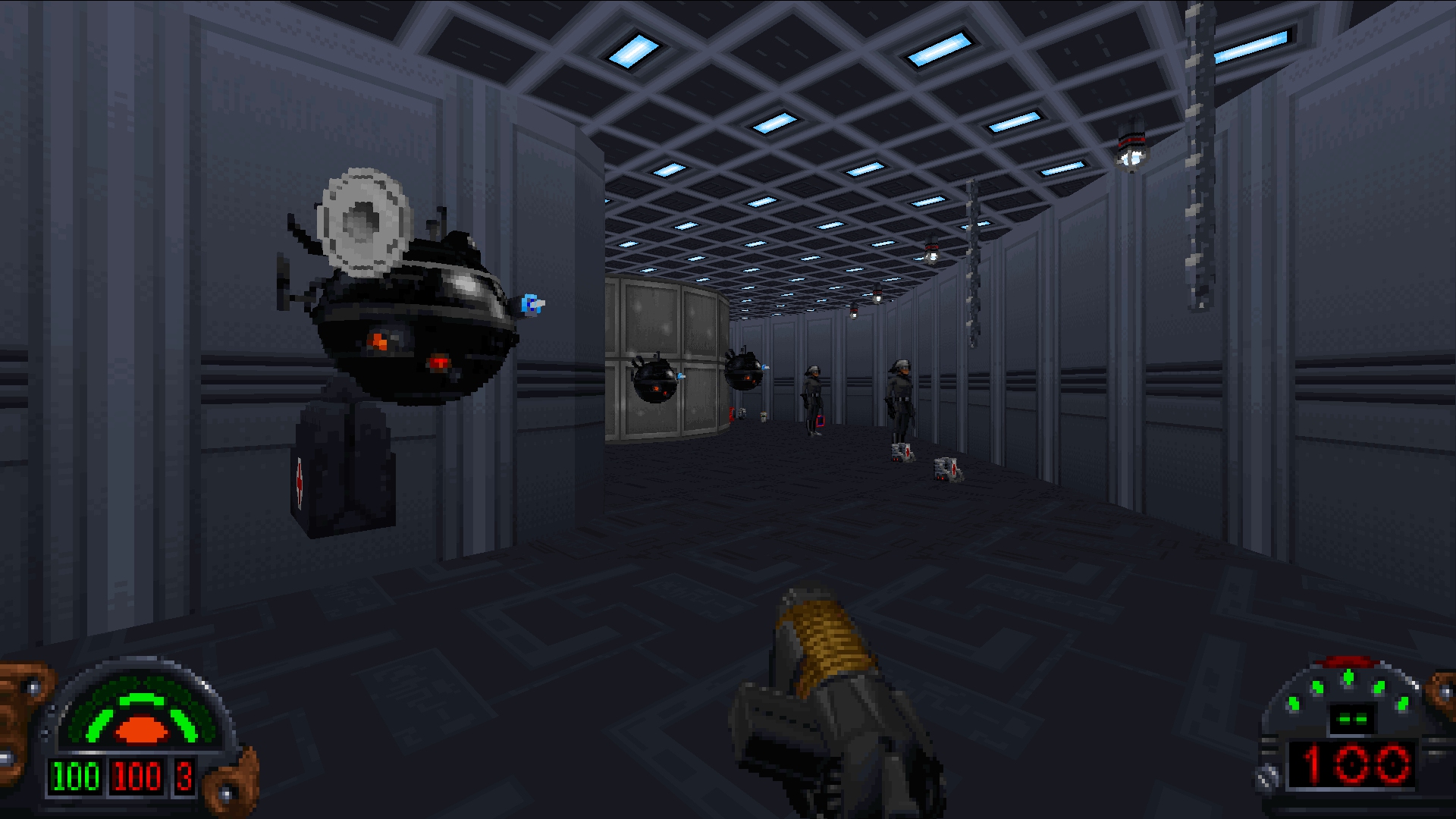

Outlaws - Late 2024

My ultimate goal is Outlaws support in TFE this year, even if it is only single player to start. This will start by adding Outlaws formats and level editing support to the tools. Then there will be a number of releases throughout the year as Outlaws support is implemented - similar to Dark Forces before version 1.0 was released. A number of new features will come with this that can be used with non-vanilla Dark Forces mods, such as digital music playback.

25 Sep 2023

Version 1.09.5

Recently, the True Color build was released. With it, several features were added such as the True Color mode, texture filtering - including sharp bilinear, the ability to adjust the field of view, enhancements to the captions/subtitles system - such as choosing between fonts and UTF8 support, as well as a variety of bug fixes.

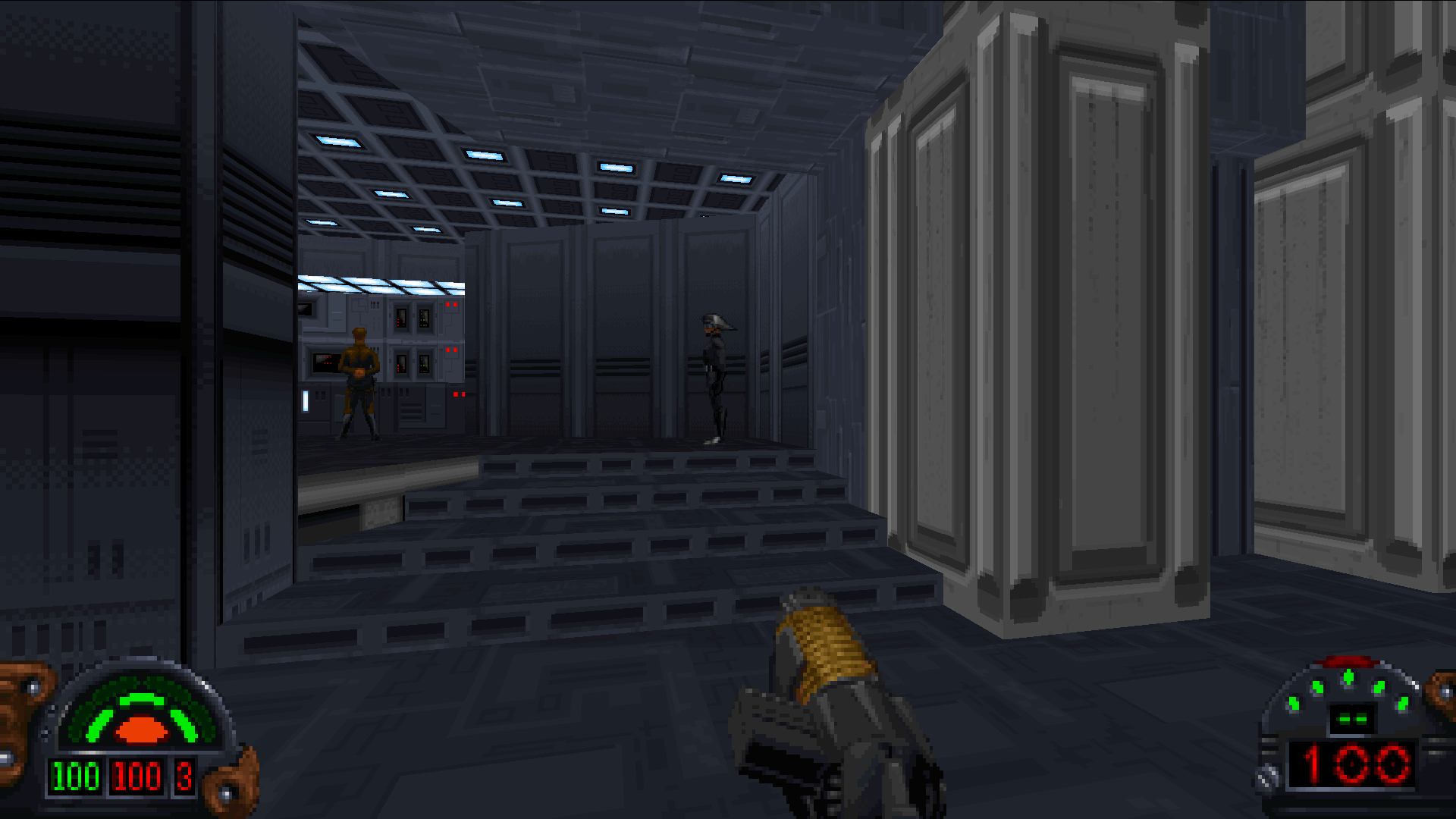

Upcoming Bug-Fix Release

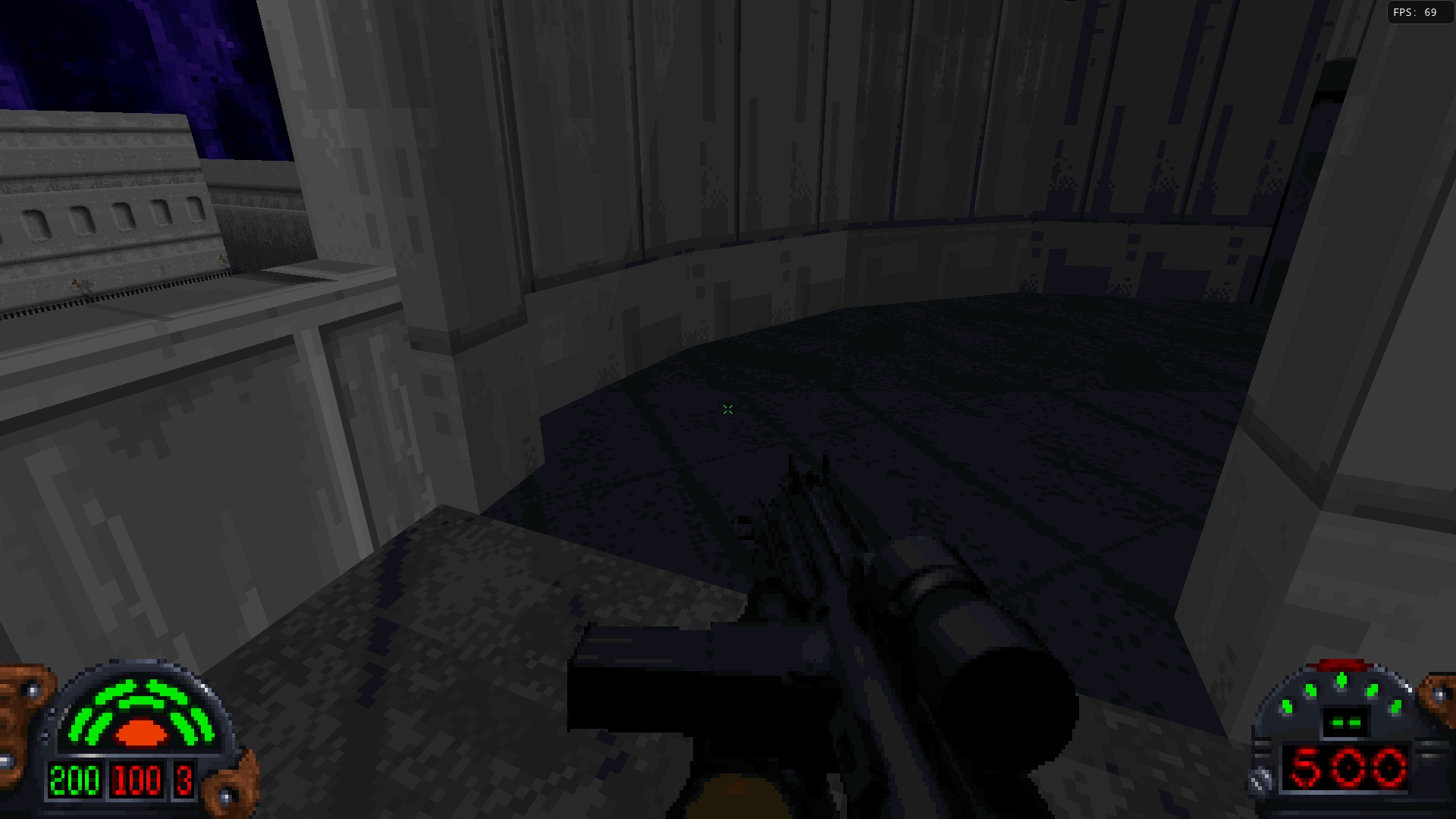

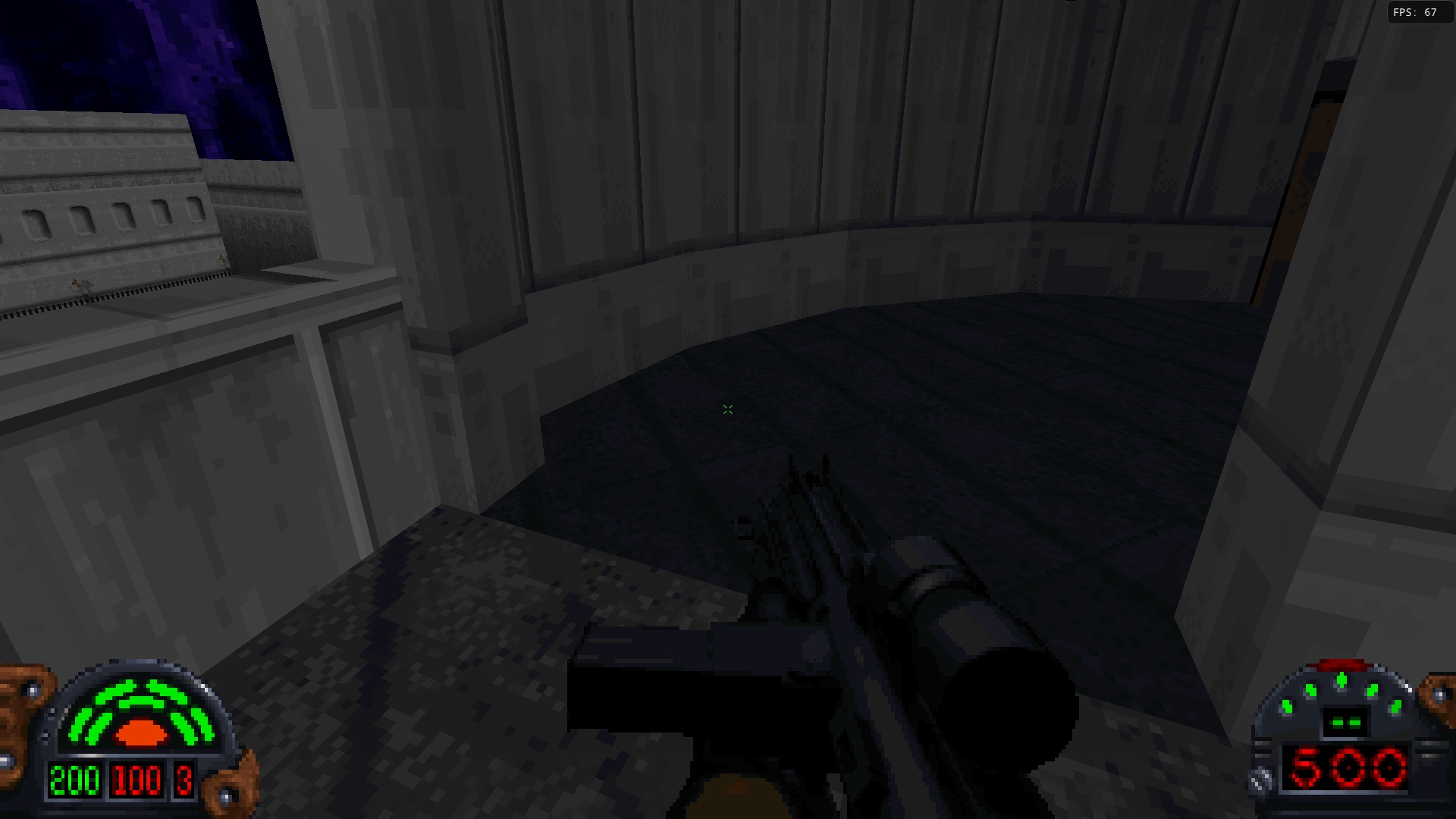

Since then, a number of bugs are been addressed. One large issue people found with the true color mode is that some textures did not have the correct hue and saturation at low light values (roughly half and lower). So I have been working on a new experimental automatic “per-texture” adjustment, which works well in most cases though there are some textures in some mods that still have issues.

Left is adjusted, and right is without the per-texture adjustment

Moving Forward

Moving forward, I plan on taking a break from feature work to instead focus on restoring and improving on the built-in tools. There are multiple reasons for this:

- Having tools will make it easier to implement new features such as voxel support and Outlaws engine enhancements.

- I can start getting the Outlaws assets loading, including levels, in preparation for Outlaws support.

- To improve forward momentum in terms of modding and Outlaws support.

- I can change up the work I am doing to help keep the project fresh.

Current Progress

I have restored the Editor option in the menu and have a first-pass implementation of the Asset Browser working, though only for textures and palettes at the moment.

- All textures in the base game can be viewed.

- Textures are automatically assigned a useful palette.

- The palette on textures can be visually changed.

- Textures can be viewed at different light levels to see how the colormap affects them.

- You can scrub through frames of animated textures.

- Palettes and colormaps can be viewed.

- Assets shown can be limited to those that are used in specific levels.

Current Plans

Rough editor plans going forward. This isn’t all of the work needed, but instead captures the initial chunk of

work to make the editors useable. Planning will continue once a decent amount of this has been done.

There are two main “work streams” listed here - the Asset Browser and Level Editor. However it is not intended that

the Asset Browser stream is finished before the Level Editor work begins. Most likely I will be jumping between

them as it makes sense for the work.

Note also that work already done for the Asset Browser is not listed here.

Asset Browser / Core

I. Core Systems

- Add Editor Config

- Editor Data Path

- Path to store editor settings, thumbnails, and other editor specific data.

- Export Path

- For generic, non-project based file exports and conversions.

- UI Font Scale

- UI Thumbnail Scale

- Compute default UI scaling based on monitor resolution.

- UI scaling should be adjustable.

- Separate thumbnails from Asset Info image(s).

- Generate thumbnails and store to disk.

- Load generated thumbnails if they exist, else generate.

- Do NOT preload textures, images, and other data except for thumbmails. Lazy loading.

- Handle font scale, set default based on monitor resolution.

- Handle thumbnail scale, set default based on monitor resolution.

- Add Export option for textures and palettes.

II. Resources

- Add the ability to add and remove resources (zips, gobs, folders).

- Add the ability to ignore or hide core game resources.

III. Functionality

- Frame browser.

- Refactor code based on having three different asset types working.

- Split into shared and unique parts.

- Factor out common UI patterns.

- Sprite browser.

- 3D Object browser.

- Level browser.

- Final refactor step.

Projects

I. Core

- Project system.

- Name, Description, Path, Type (Level, Resources), Game (Dark Forces, Outlaws), Feature Set (Vanilla, TFE).

- Menu options - New, Open, Close, Recent.

- Create project from existing GOB/Zip/Folder.

II. Import/Edit

- Add Import features for each asset type.

- Convert from true-color, etc.

- Create complex types - such as Wax data.

- Create voxel model editor to handle import, settings, and setting up animations.

- Port software voxel renderer over from the branch.

- Add GPU-based voxel renderer.

- Add “map hacks” equivalent for adjusting voxel models

(though it might be good to add support for this to the level editor, so it can be done visually).

III. Object Templates

- Auto-generate object/logic templates based on the original game.

- Add the ability to edit and create new object templates.

Outlaws

I. Initial Work

- Add support for Outlaws formats - (PCX, LVT/LVB, NWX, etc.).

Level Editor

Plans here are a little more unclear, the plan is to get the basics working again and then plan from there.

I. Restoration / Refactor

- Basic UI - ported to new UI patterns and supporting UI scaling.

- Add support for bindable level editor hotkeys (similar to the game).

- Restore level editor rendering.

- Implement new triangulation based on constrained delaunary triangulation

(much more robust then the library I was using, removes any dependencies for triangulation since I will just write it myself).

- Use Project system to allow for creating new levels and editing existing levels.

- Restore basic editing.

- Basic Outlaws level loading / support.

22 Aug 2023

As many of you have seen, Nightdive has announced their Dark Forces Remaster. They have partnered with me to help them in a technical advisory role to help with the project, which includes exchanging information and providing some TFE code. So I have written a FAQ to answer likely questions.

-

What impact does this announcement have regarding The Force Engine (TFE)?

This announcement has no negative impact on TFE, existing development plans - including the level editor and Outlaws support - are continuing as-is.

-

What sort of Dark Forces assets (art, etc.) does Night Dive have access to?

As seen below, I am not a Night Dive employee and so cannot say.

-

Are you a Night Dive employee or getting paid by Night Dive?

I am not a Night Dive employee, no money has changed hands, and I have no inside information I can share regarding schedules, other games in development and so forth.

-

Does TFE and the Night Dive version share code?

While it is true that some TFE code has been shared to help in development, such as iMuse, these projects use different code bases and use different implementations for many components - such as very different GPU renderers. The Night Dive project uses Kex, TFE uses its own framework. I have no access to the Night Dive code base.

-

Were contributor assets or code shared with Night Dive?

Only code reverse-engineered or written by myself has been shared with Night Dive. I have not shared any code or assets contributed by others with Night Dive.

-

When did this partnership begin?

While I cannot give exact dates, the reverse-engineering process for TFE and version 1.0 were nearly complete.

- Why agree to this partnership? Did TFE development benefit?

-

There were a number of reasons to agree, and it seemed like a good idea to help TFE be seen in a positive light (and I still think this is true). TFE has also benefited from the exchange of information (such as one of the developers pointing out a bug in iMuse).

-

Night Dive will also be helping me to support the new content they are creating in TFE - though you will be required to purchase the new version of Dark Forces in order to use that content.

-

In other words, this partnership was beneficial for all parties involved.

- Why not mention this before?

Mentioning this before the announcement would leak information about the project which I agreed not to do. It’s that simple.

31 Jul 2023

General Update

Some time ago, I started on the “True Color” release for TFE - originally planned to be version 1.20, and then moved to 1.10. During development the amount of time available for TFE shrunk dramatically due to various events and obligations. As a result work slowed to a crawl, and honestly the True Color branch became a mess of partially implemented features.

Recently things have settled on a new normal and time for TFE opened up again. However, given the mess that version 1.10 turned into, I needed a plan to get the features I had worked on finished and released, to increase the release cadence once again. So I decided that I would split the 1.10 release into multiple smaller releases, and that I would port the code for each release to master as needed, and finish the features there.

Version 1.10 Plan

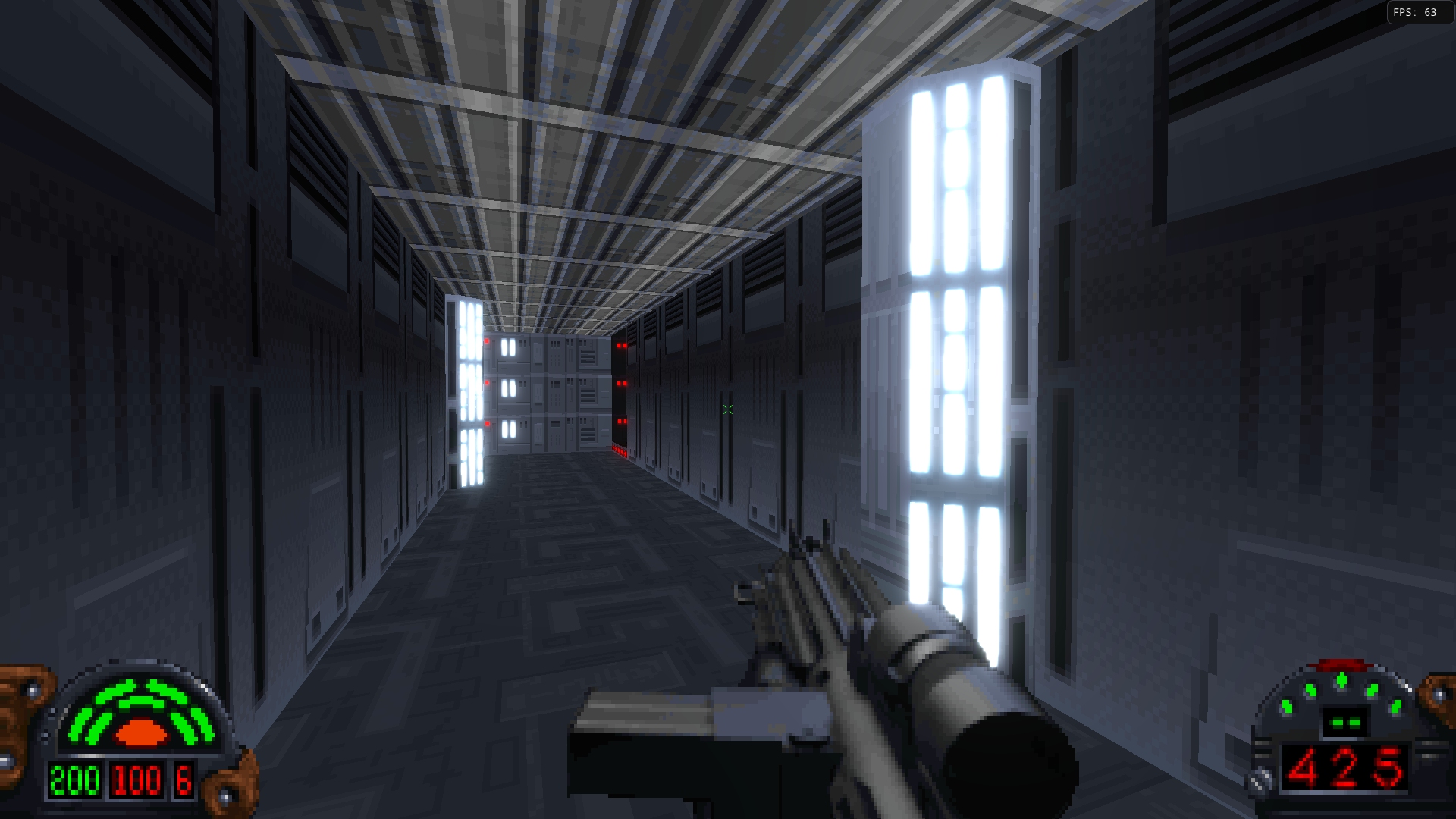

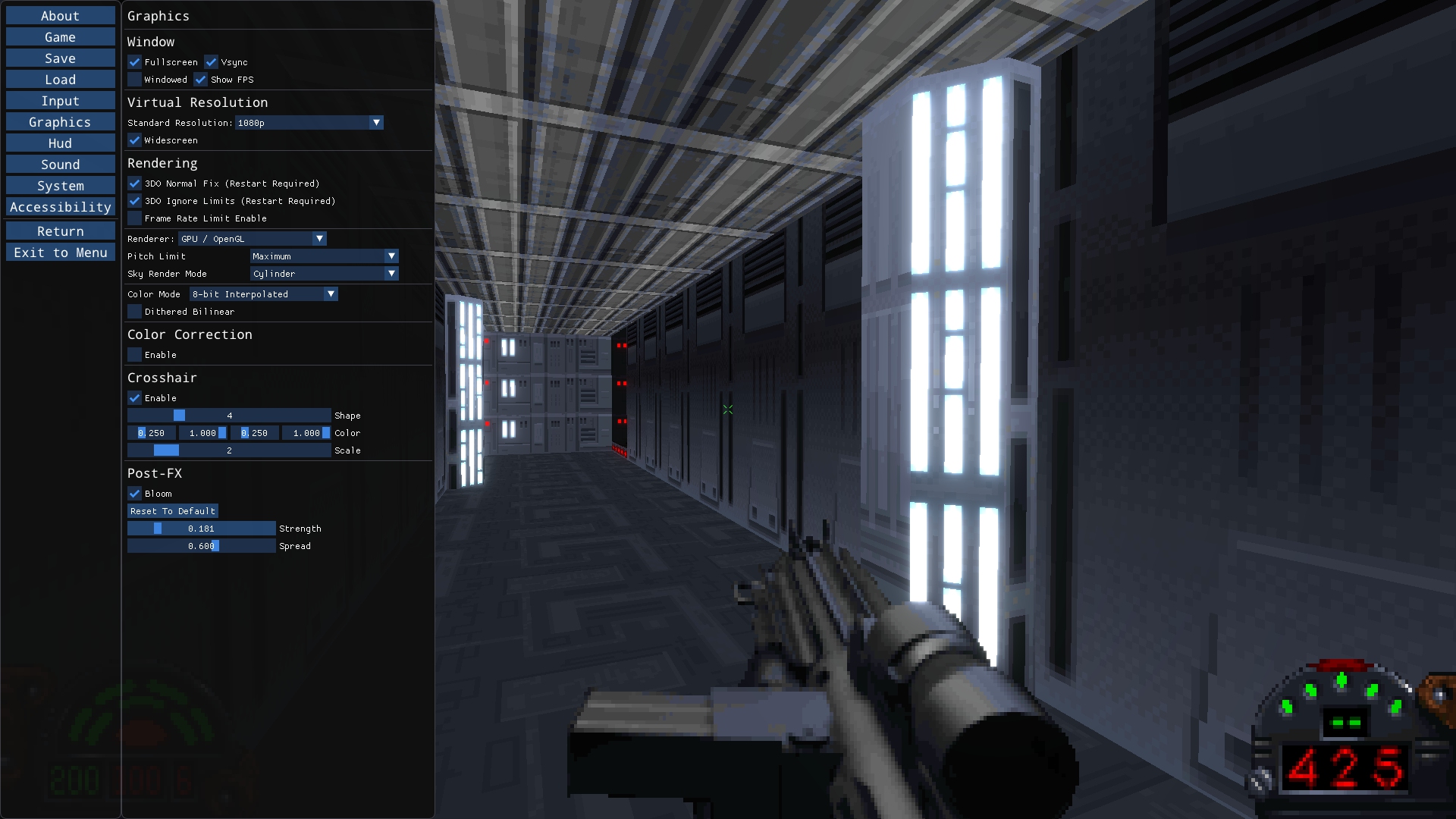

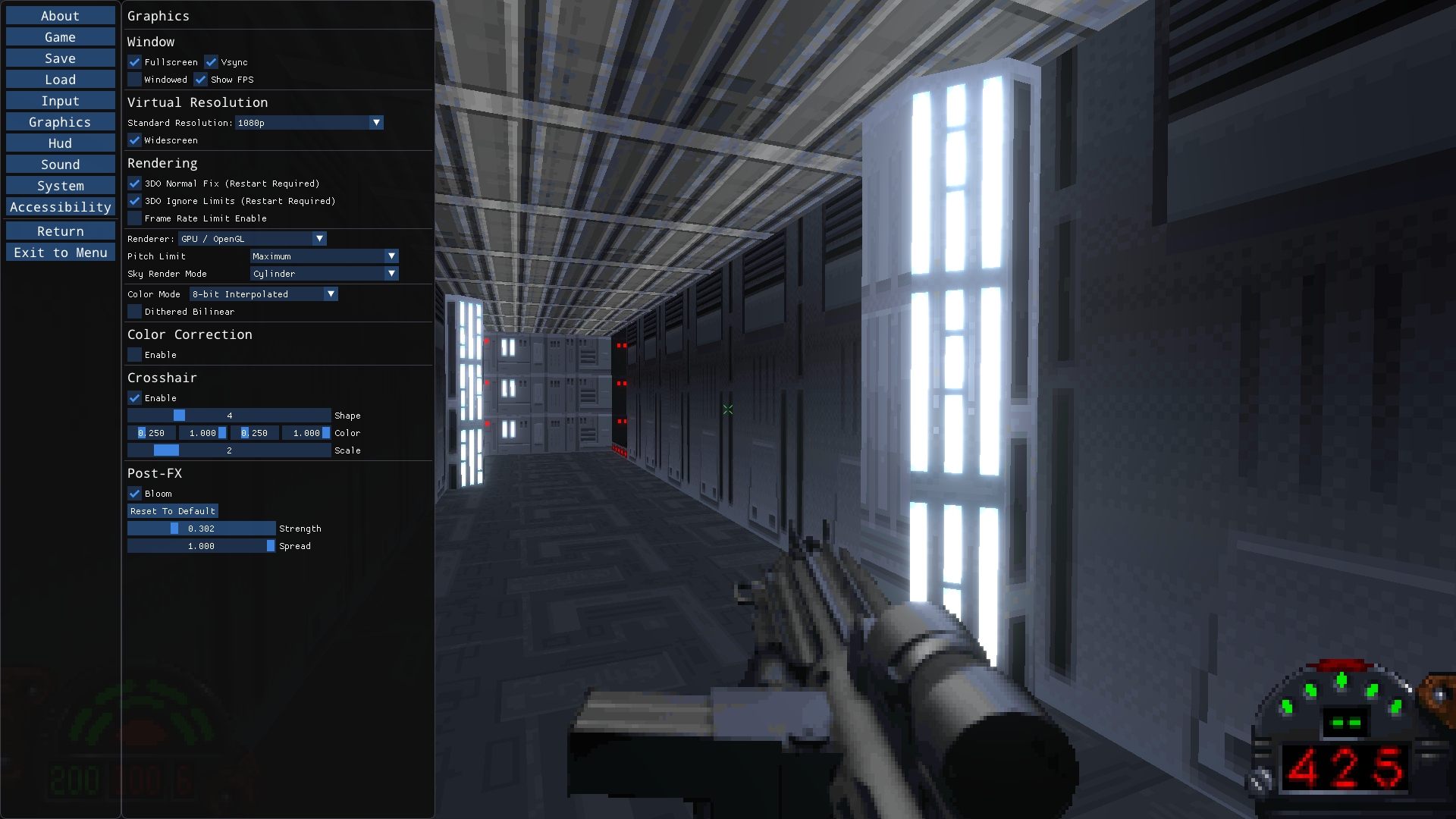

Version 1.09.4 - Bloom

This release, see below.

Version 1.09.5 - True Color

- True-color mode (textures are pre-converted to 32-bit color on load, the “material” texture is another texture generated or loaded).

- Bilinear and Sharp Bilinear texture filtering options (previously shown in a screenshots).

- Mipmapping and Anisotropic filtering options.

- Colormap handling in True-Color (simple method initially).

Version 1.09.6 - Lighting

- Dynamic light support.

- Per-object light settings using text-based definition files.

- Automatic light generation for objects based on emissive values, if no definition is specified.

Version 1.10 - True Color Assets

- Support for true-color sprites and frames.

- Support for true-color textures.

- Subtitles/Captions for cutscenes and in-game (@kevin_foley).

Version 1.09.4

Version 1.09.4 is the first of those releases. It was meant to focus on getting the “Bloom” feature implemented, but as it turns out a couple of other large features made it in as well. Below is the full change list, but I will go through the more impactful features below.

Changes

- Smooth Vue Animations (optional).

- Fixed color flashing when switching between levels.

- Ported over GPU Renderer portal fixes - now supports up to 65536 visible portals as originally intended.

- Fixed GPU Renderer issues when using more than 65536 vertices for sector or sprite geometry in a frame.

- Fixed Wireframe so it works correctly in release mode, and made 3DOs solid color when in wireframe.

- Added a new “Retro” settings template which matches the “Modern” template from previous versions, where “Modern” enables new features, such as Bloom by default.

- Added an “8-bit Interpolated” color mode, which smooths out colormap-based shading and removes most of the banding.

- Added a new console command exportTexture that will export the texture on the surface the camera is currently pointing at.

- Added a bloom option, with the ability to adjust its strength and spread.

- Added Accessibility options, starting out with Closed Captions / Subtitles (Beta).

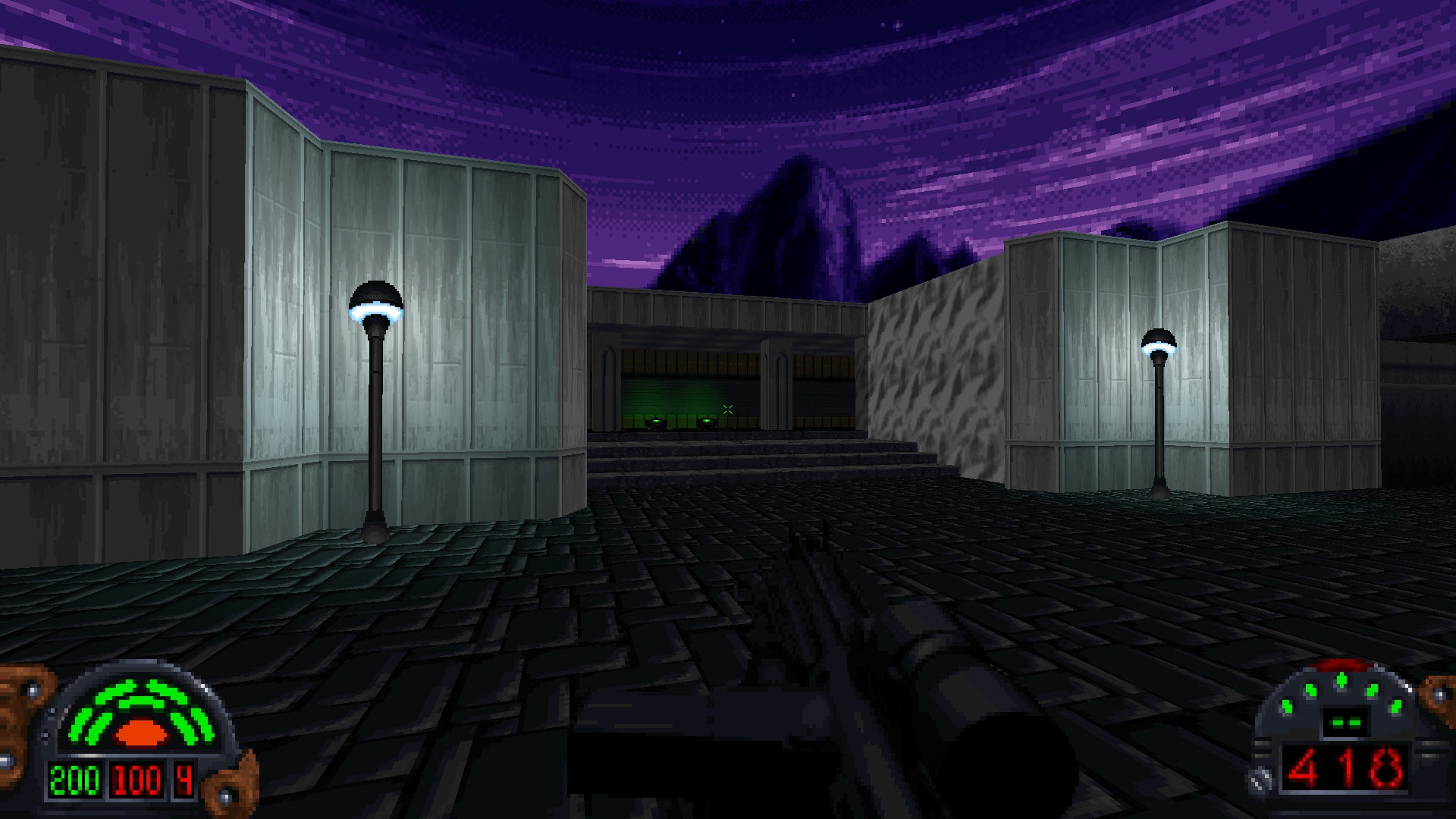

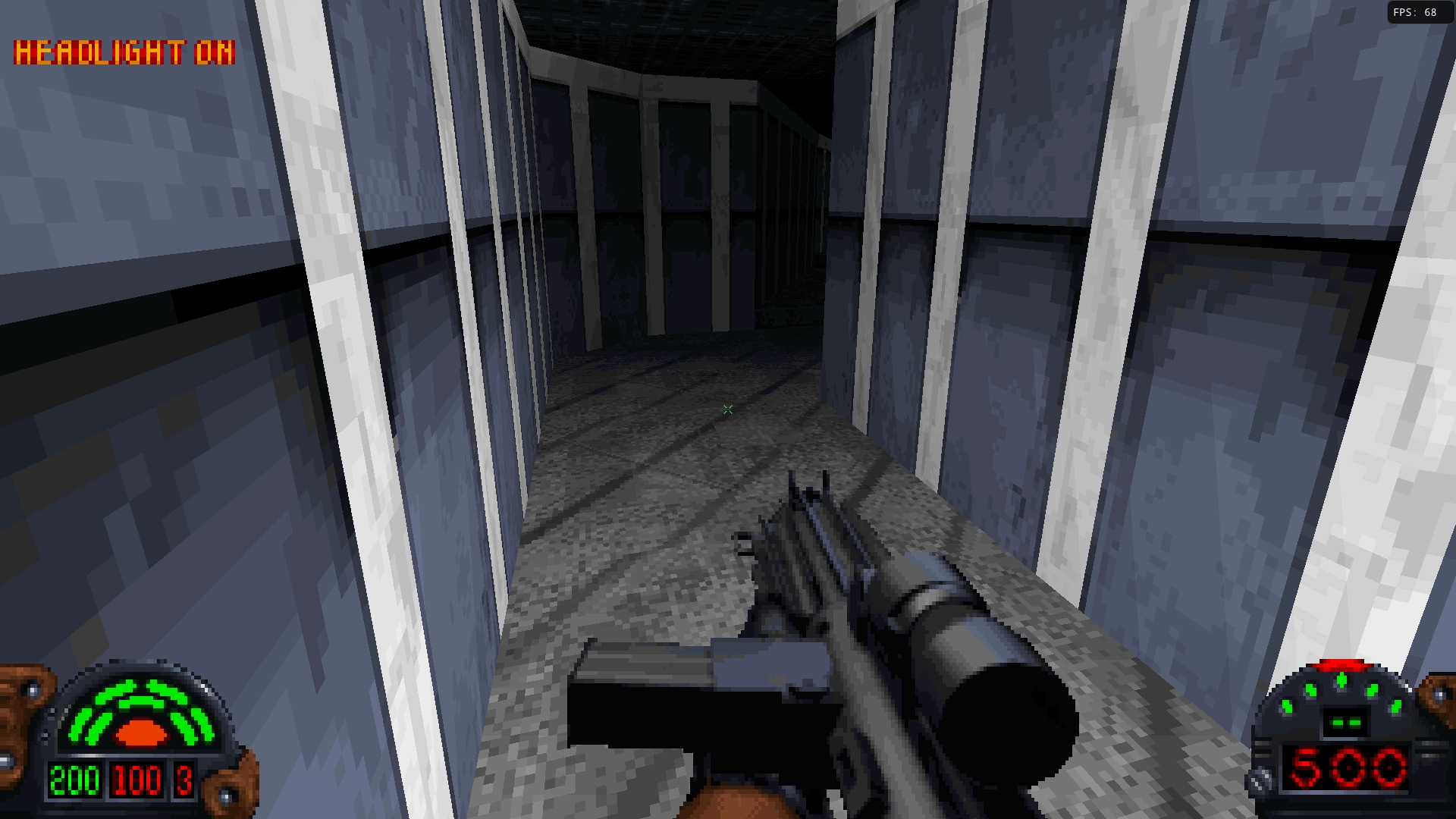

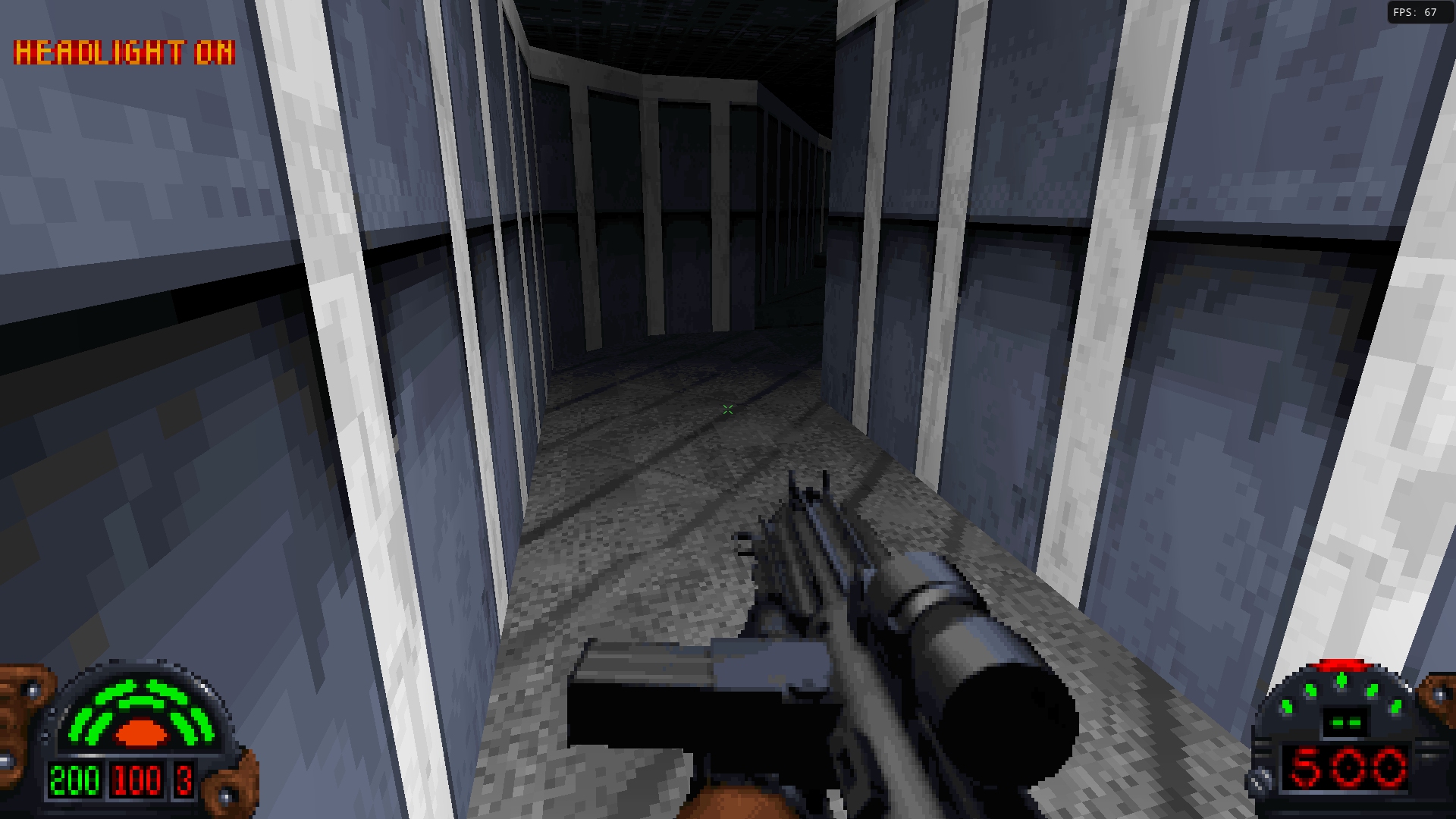

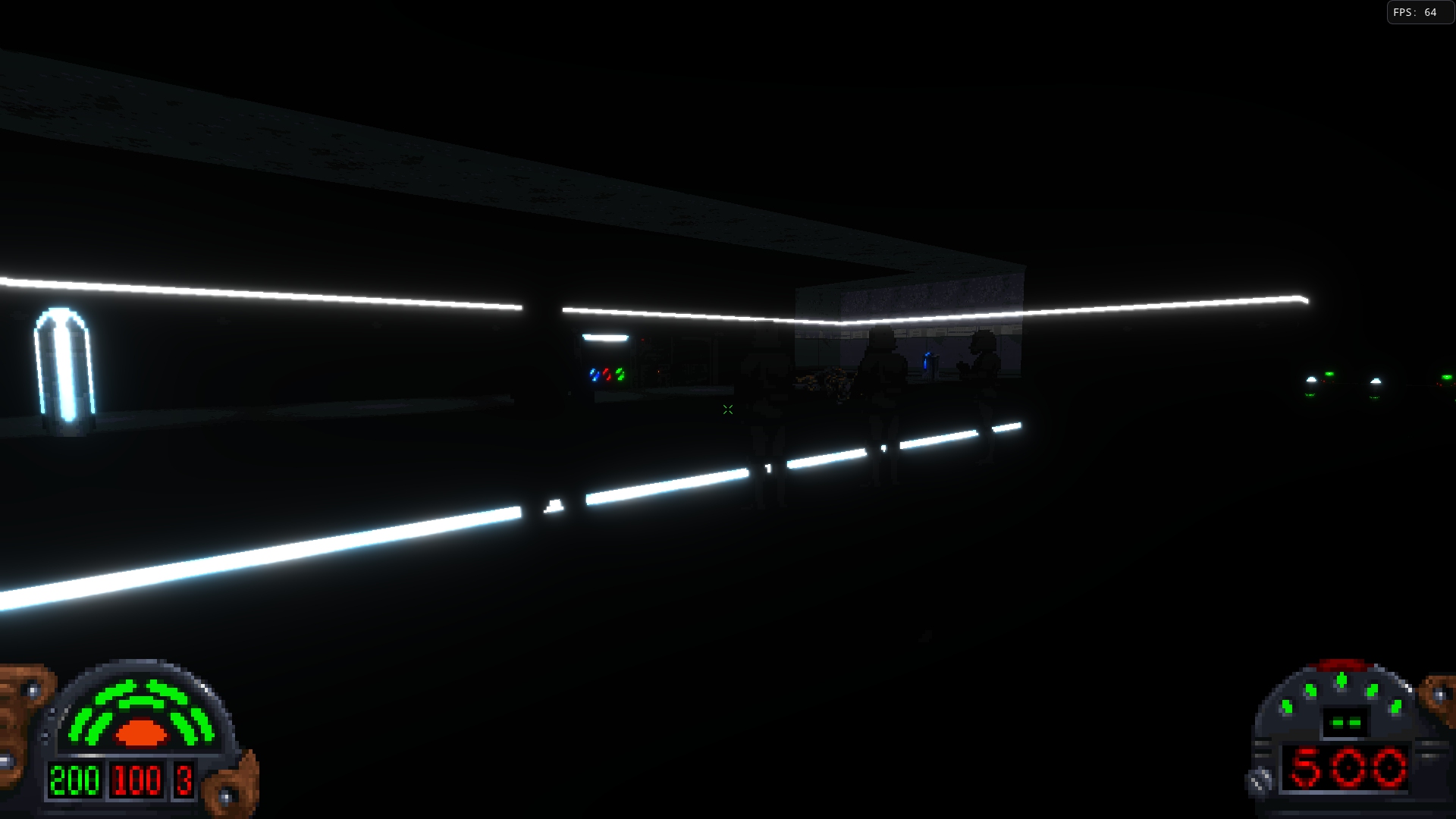

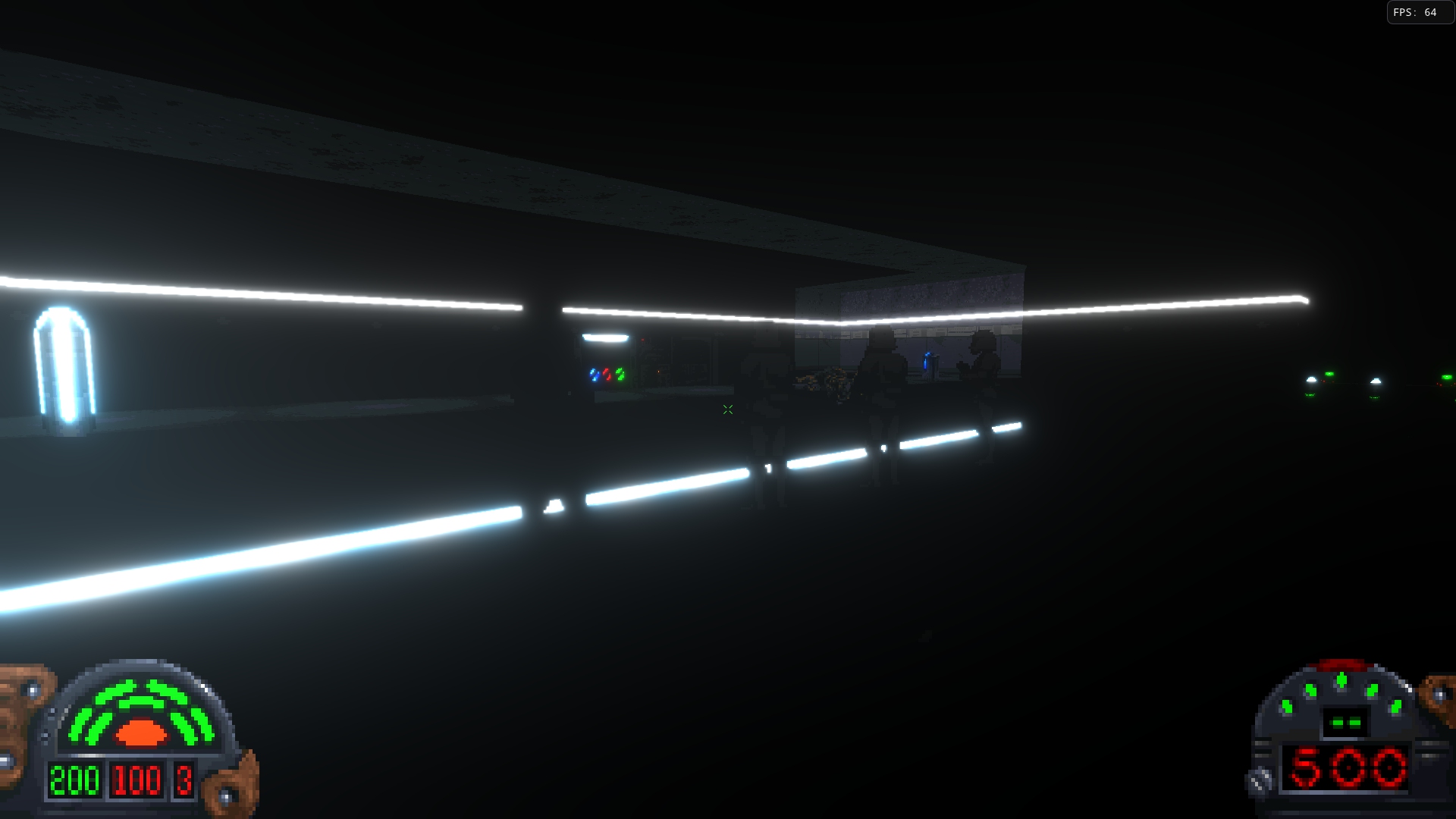

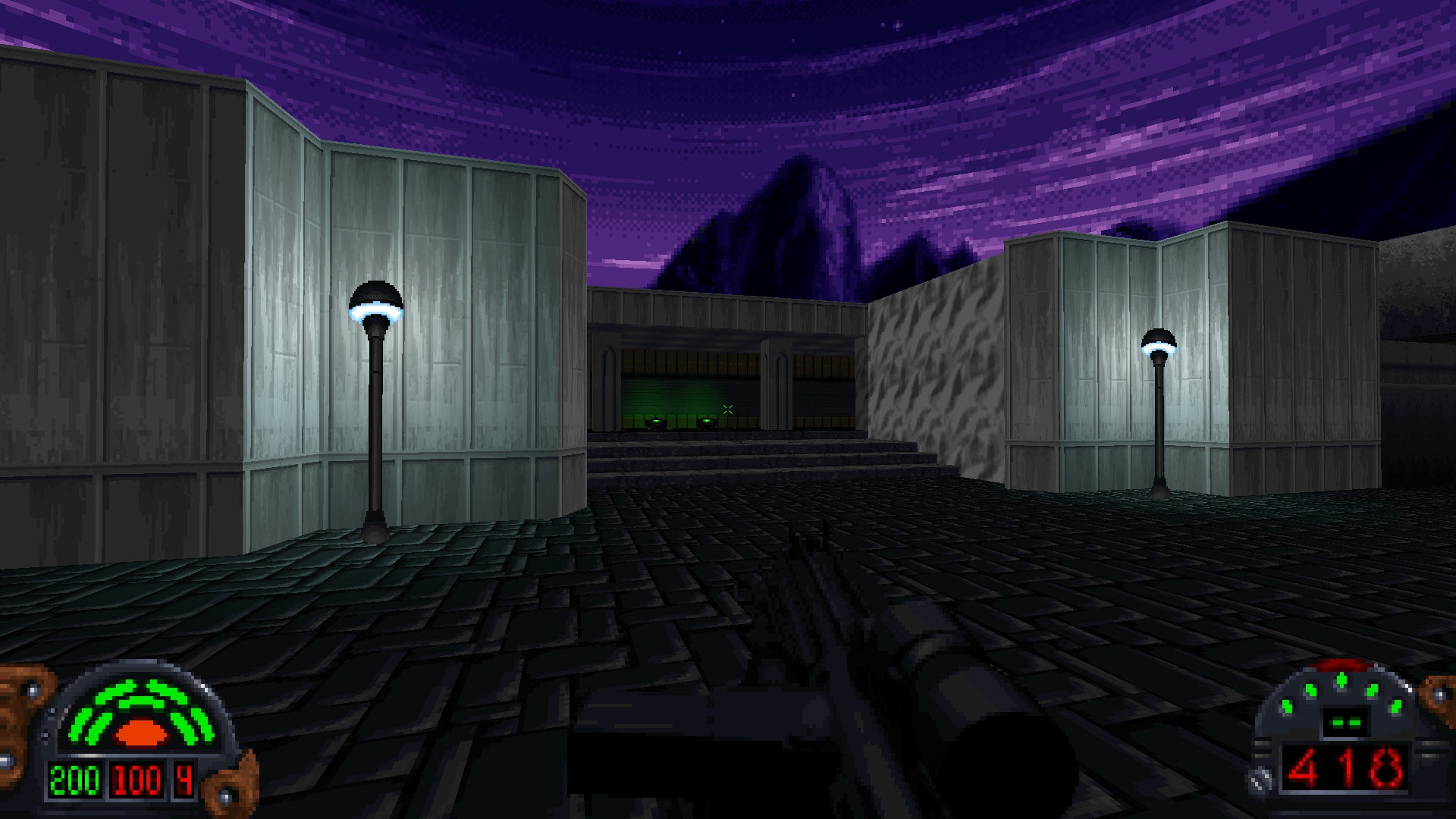

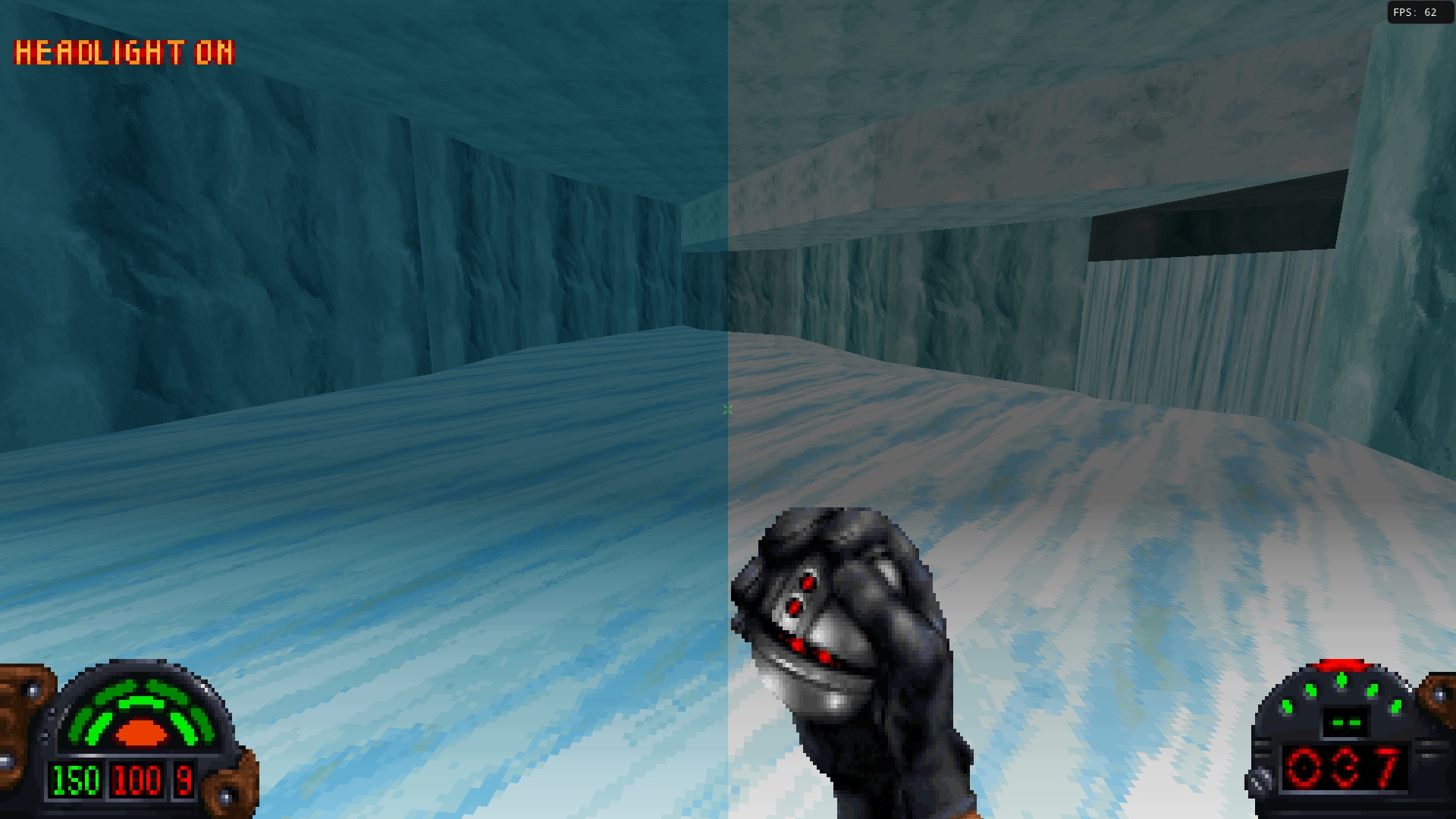

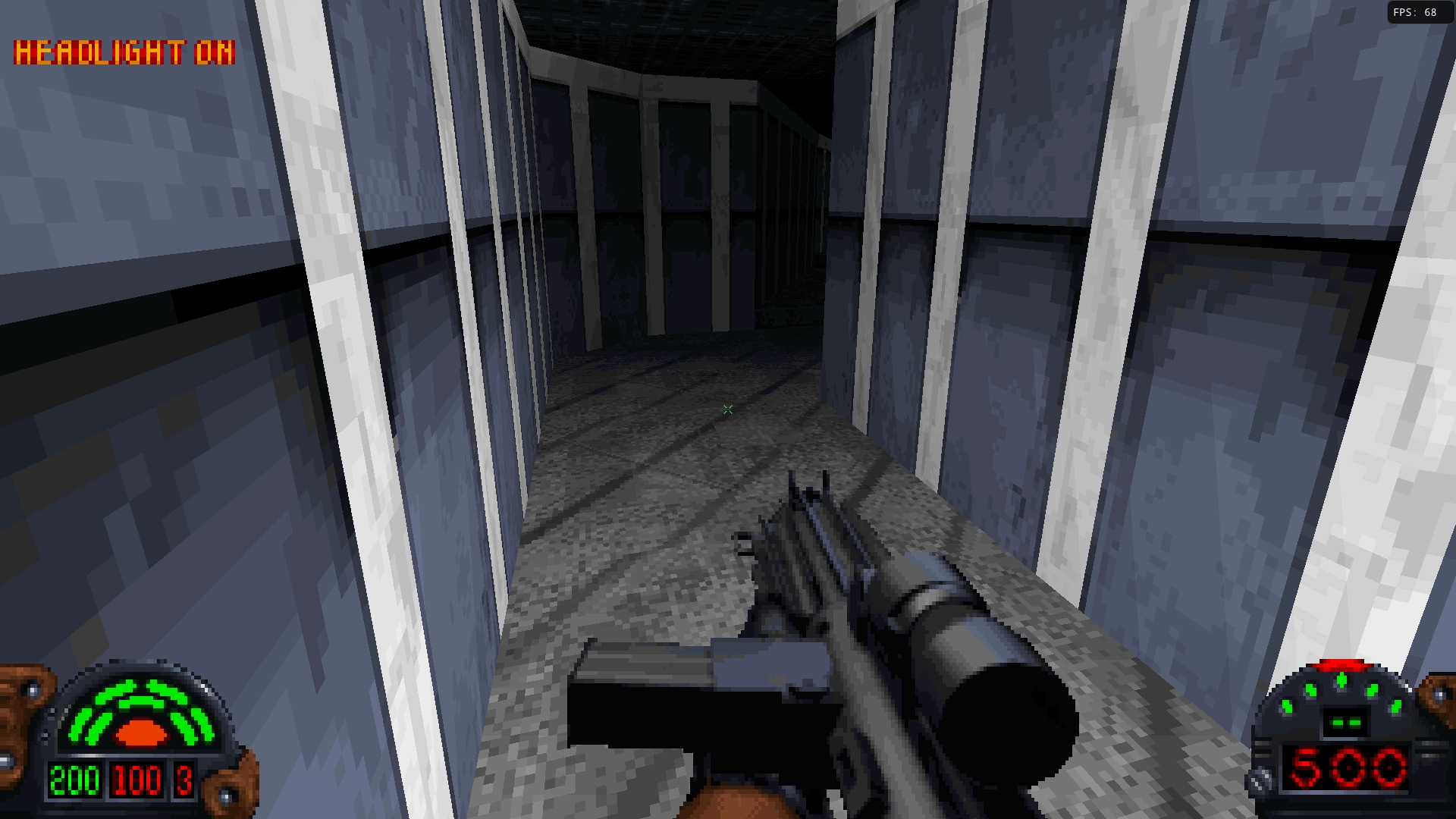

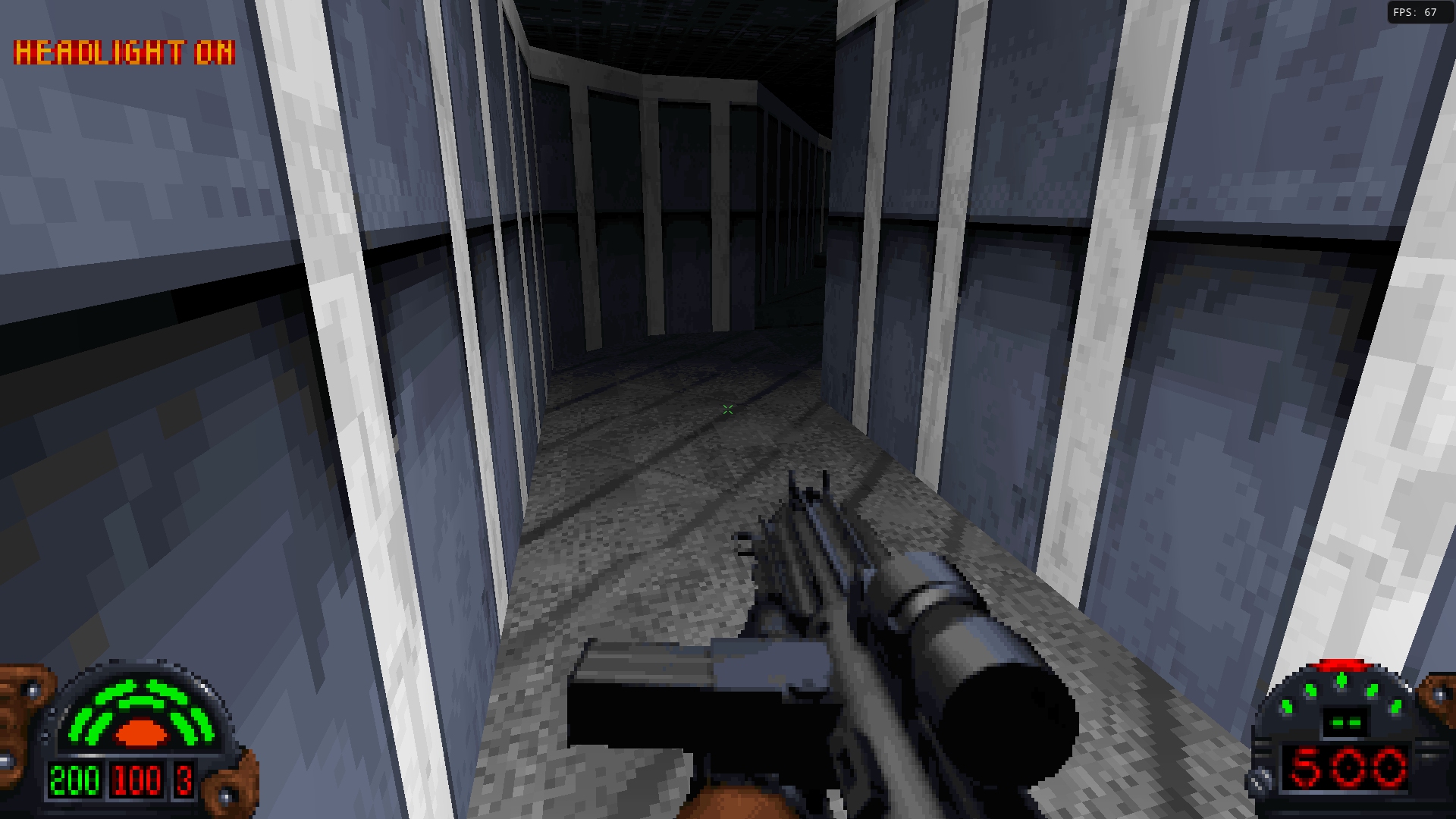

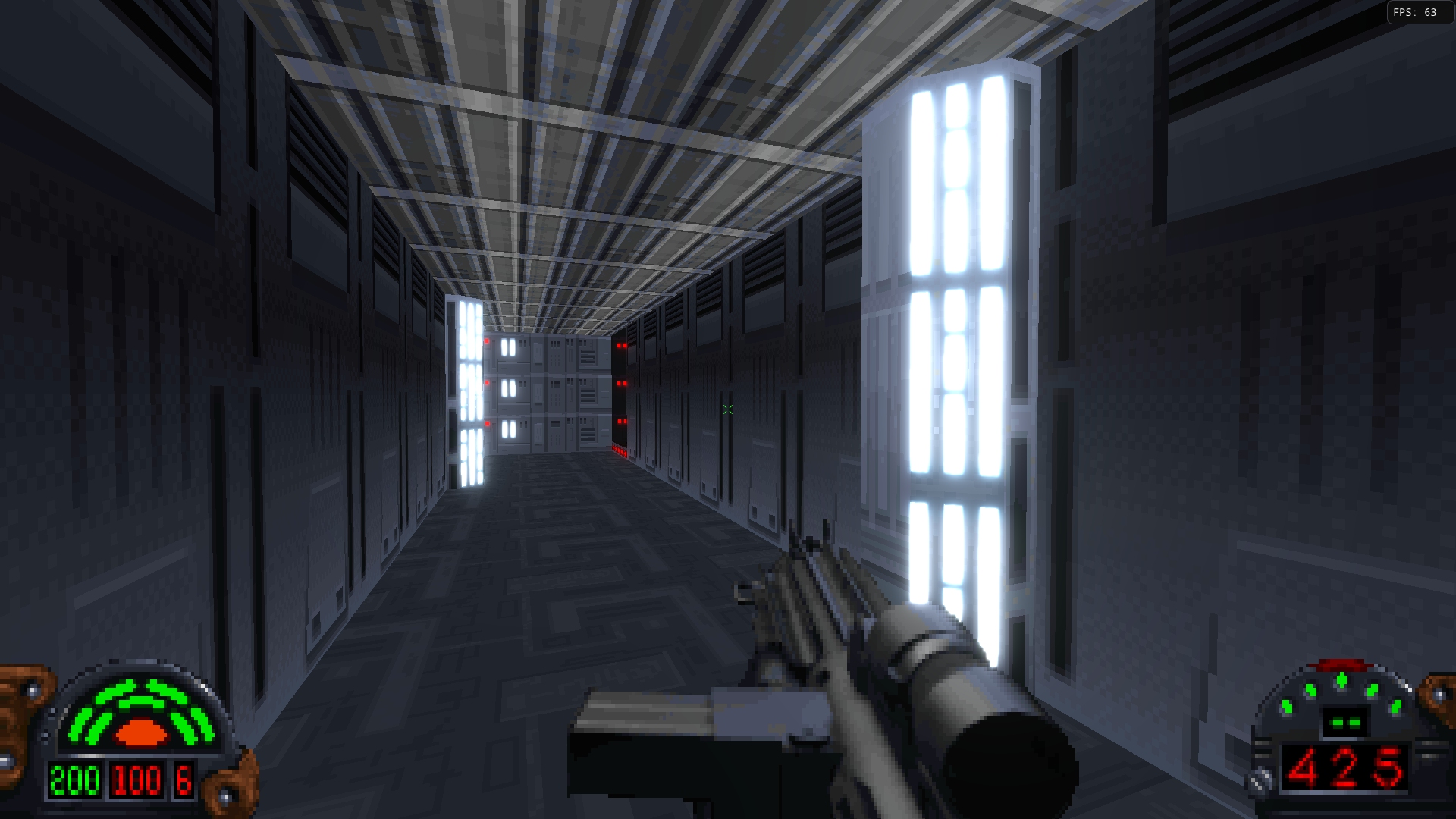

8-bit Interpolated Color

Previously, TFE only supported direct 8-bit colormap based shading producing the same results as the original game. While Dark Forces looks pretty good at higher resolutions, hard banding can be seen in many cases - due to the limited number of shading steps.

Examples:

Another option is to remove the quantization in the shading calculation - to generate a smooth result. The issue is, how do you use this smooth result with 8-bit colors and colormap? TFE will use the shading value to lookup the nearest colormap results, and then use the fractional component to blend between them.

Doing this smooths out shading falloff with distance (where the color gets darker or more foggy farther away), but banding can still be seen when using the headlamp or during weapon fire. The reason is that a 128-entry ramp is used to map between z-distance and ambient value. The issue is that this map contains duplicate values - so direct interpolation still leads to hard banding.

The solution that TFE uses is to generate a smoothed version of the light ramp on the CPU when the feature is enabled that contains fractional ambient values, and the shaders interpolate between the nearest light ramp values to generate the final result.

Comparison Images:

Vanilla / Interpolation

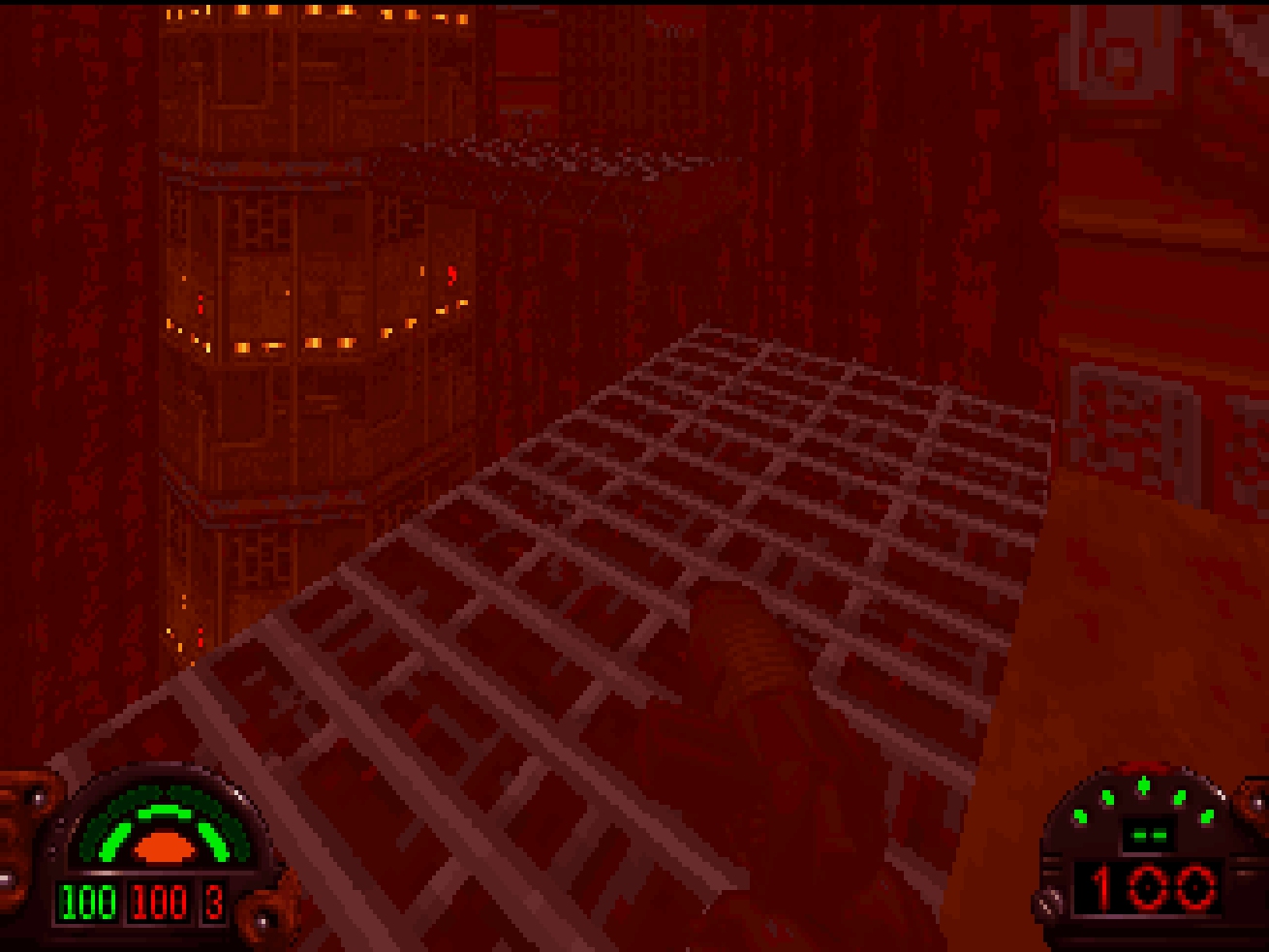

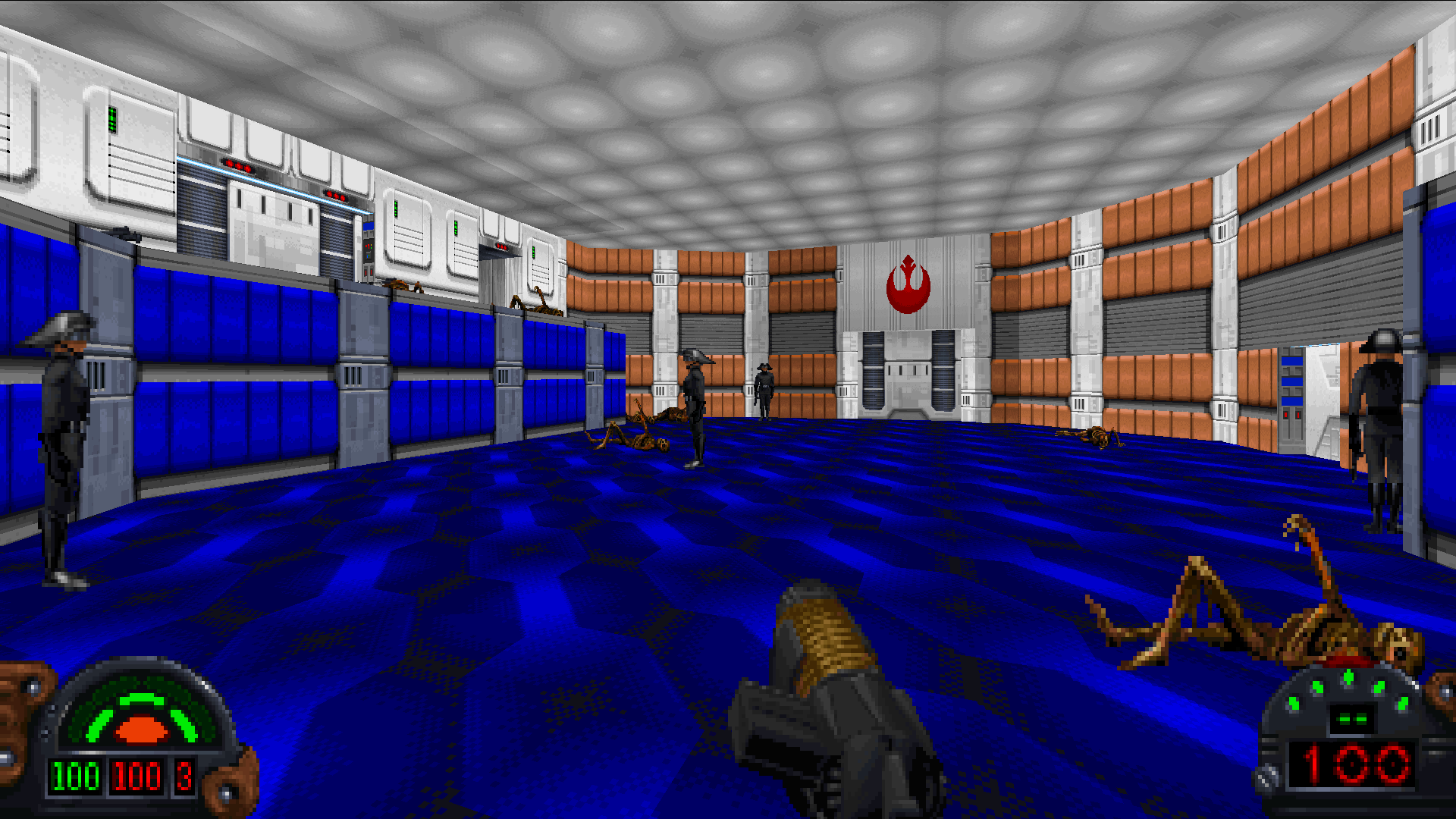

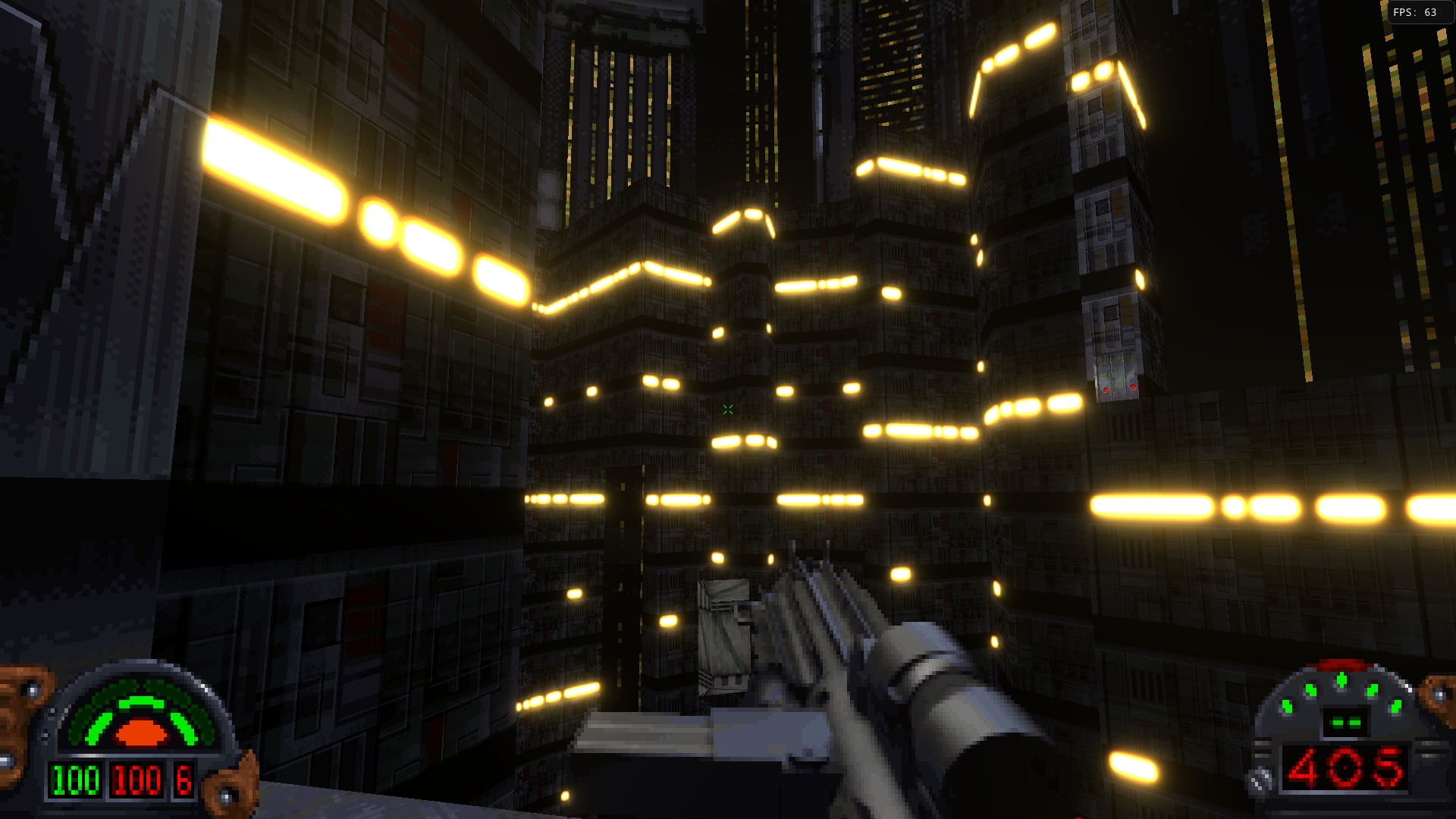

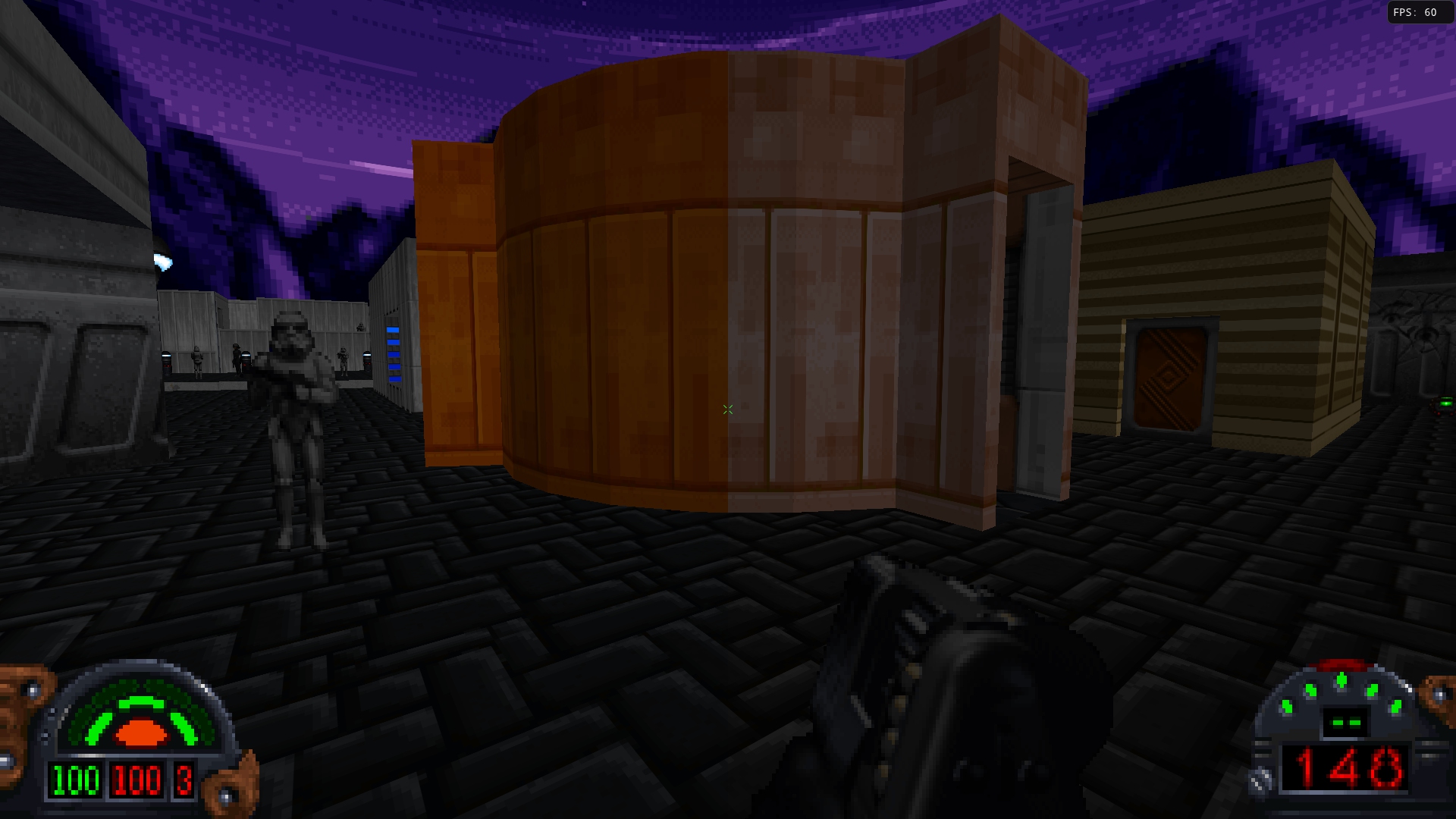

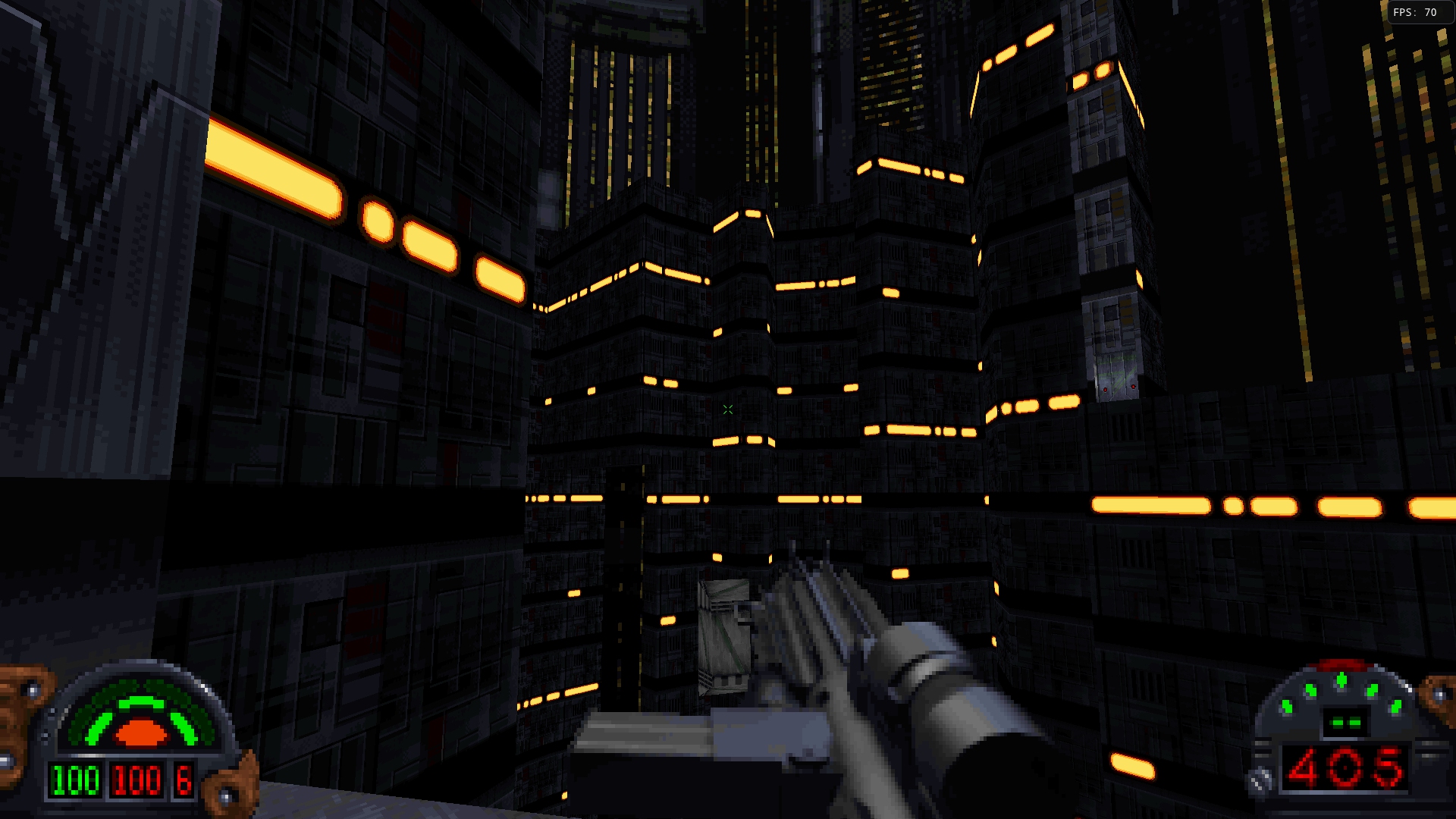

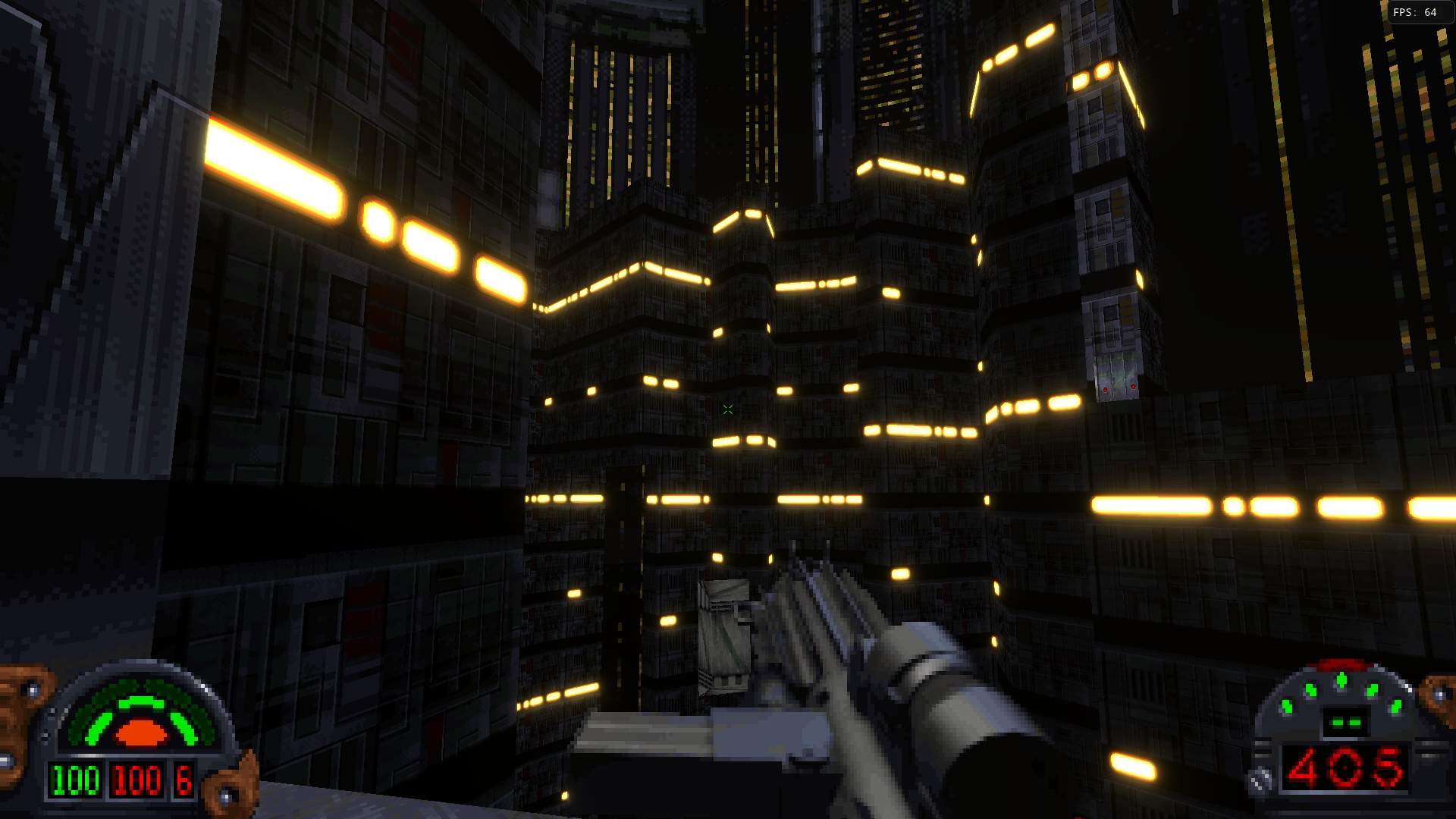

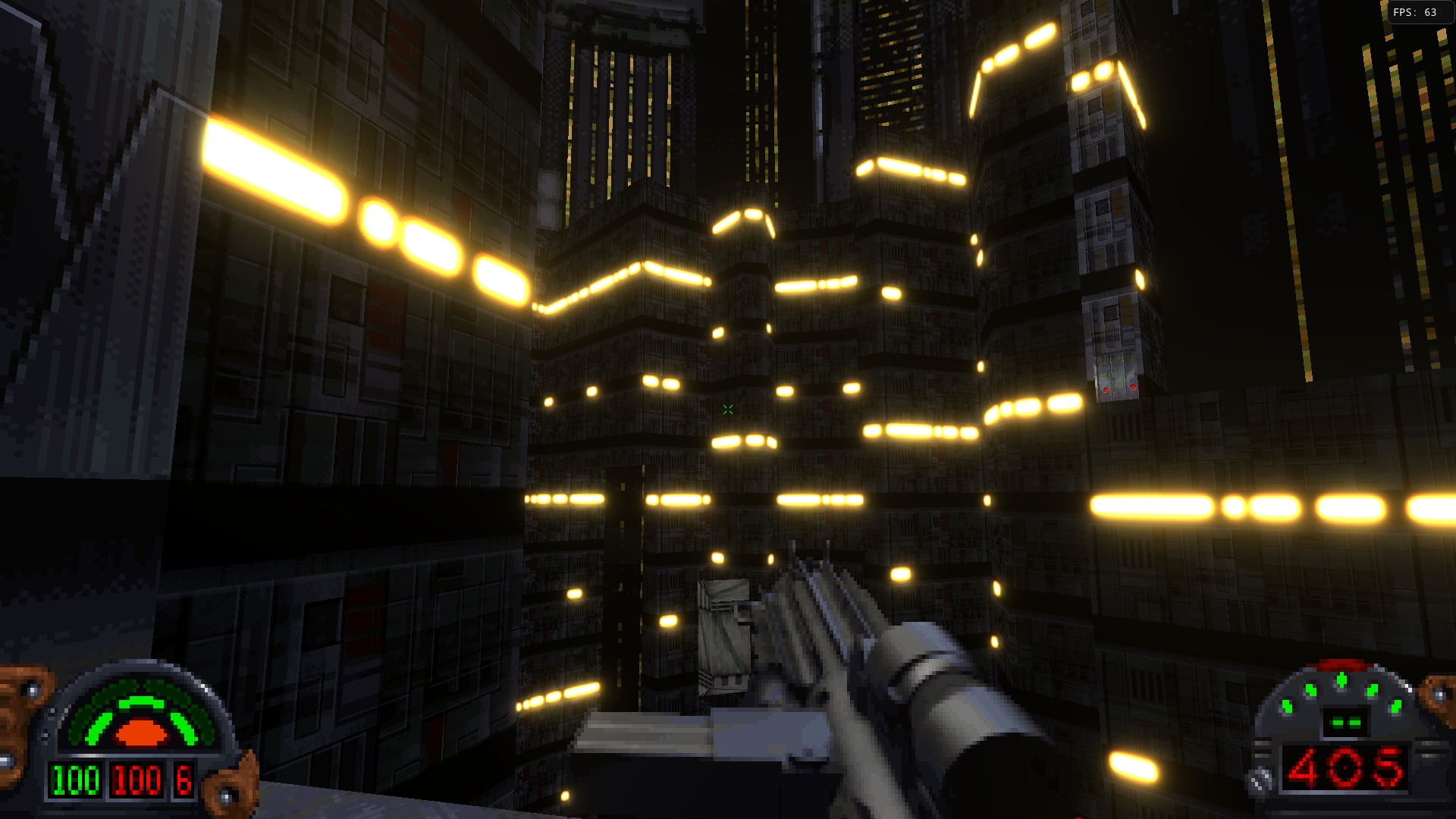

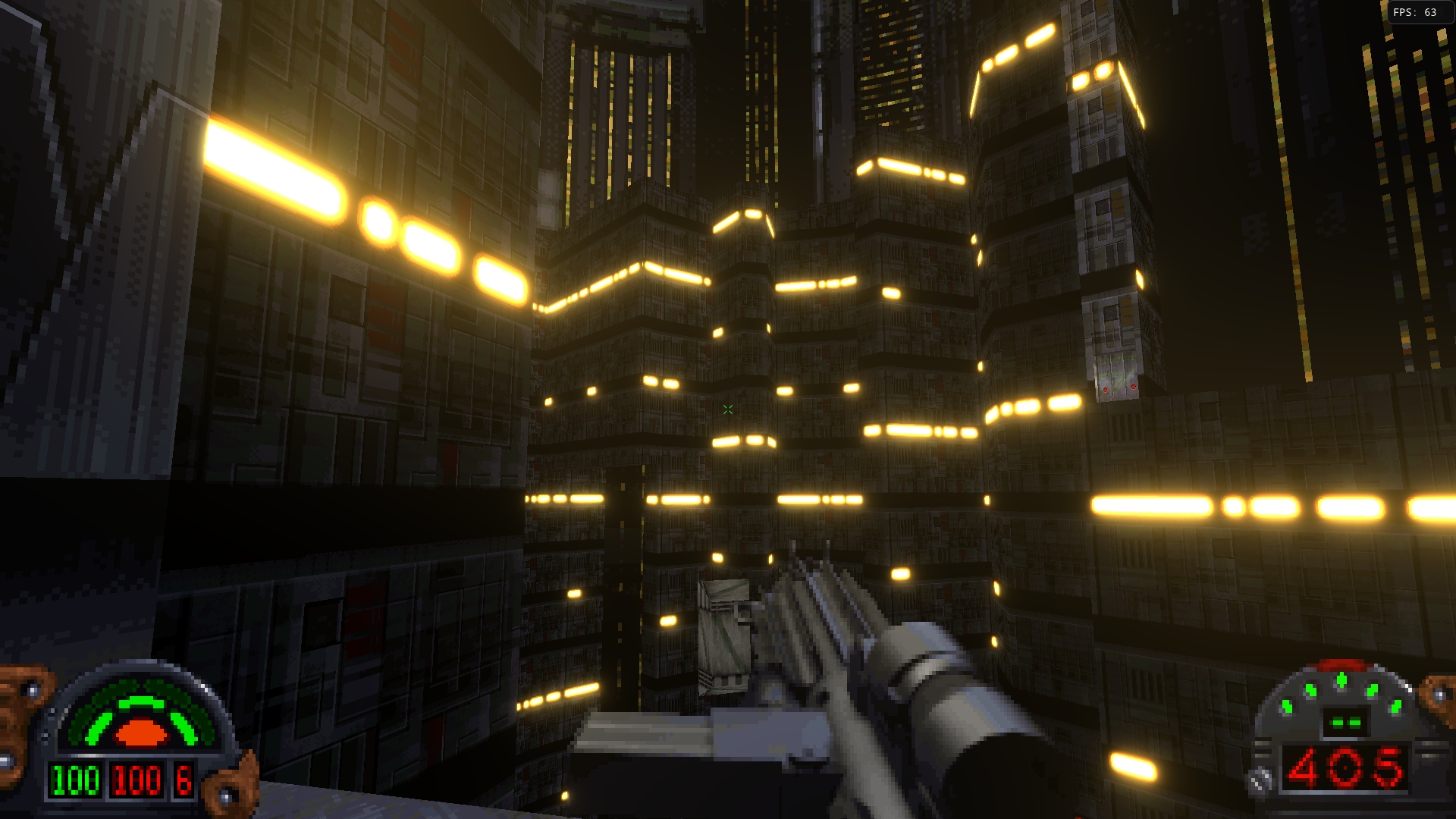

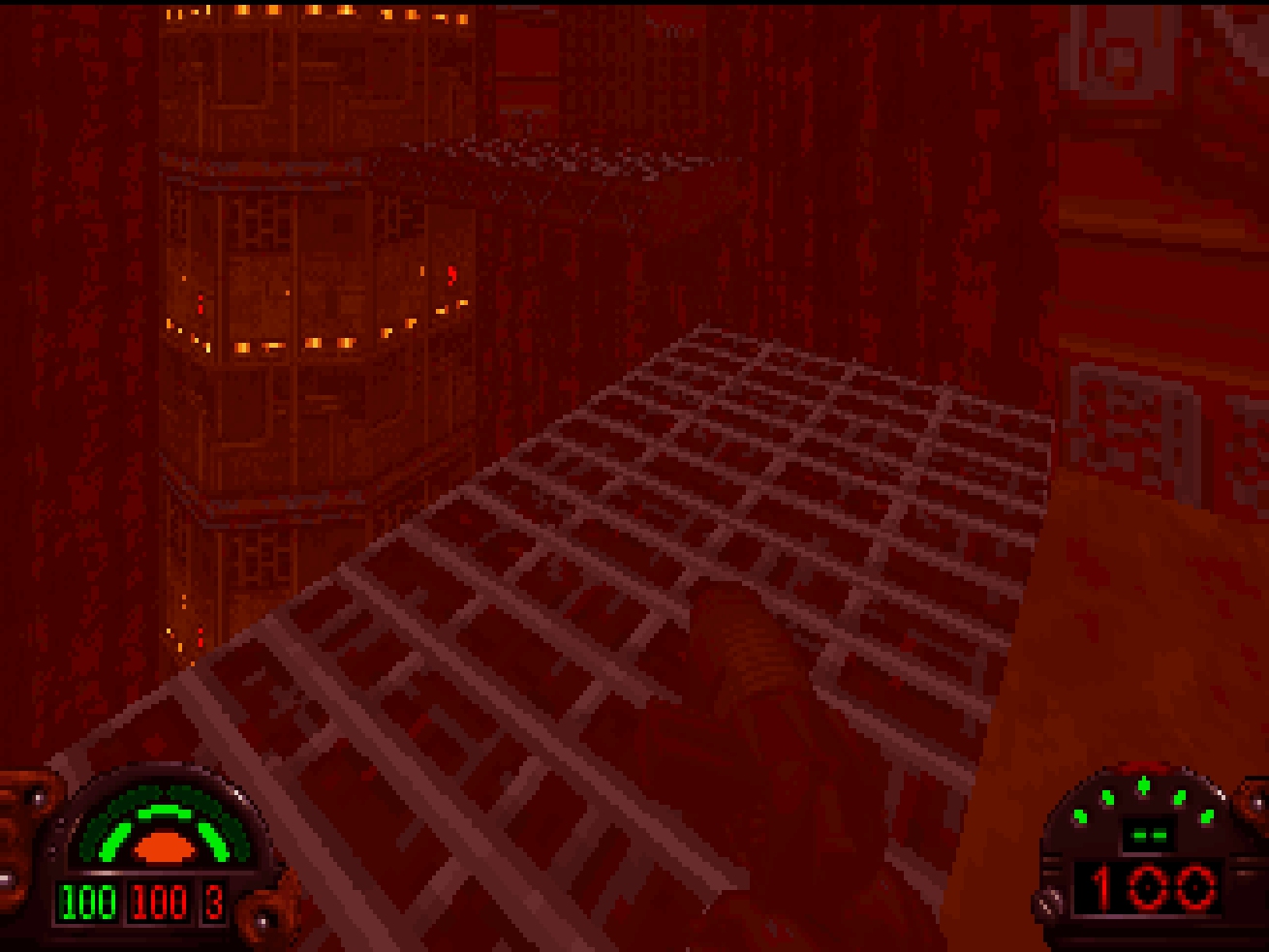

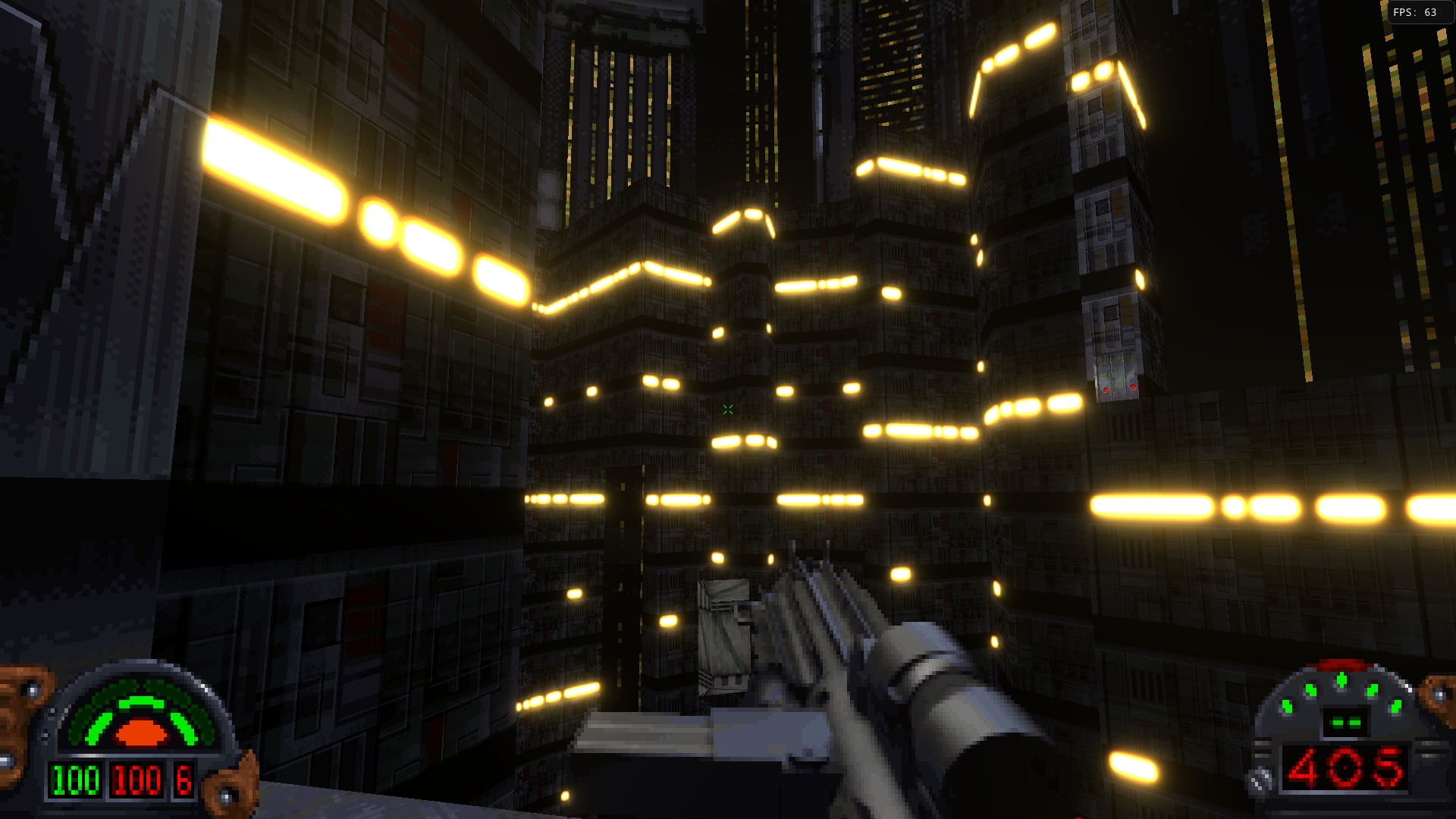

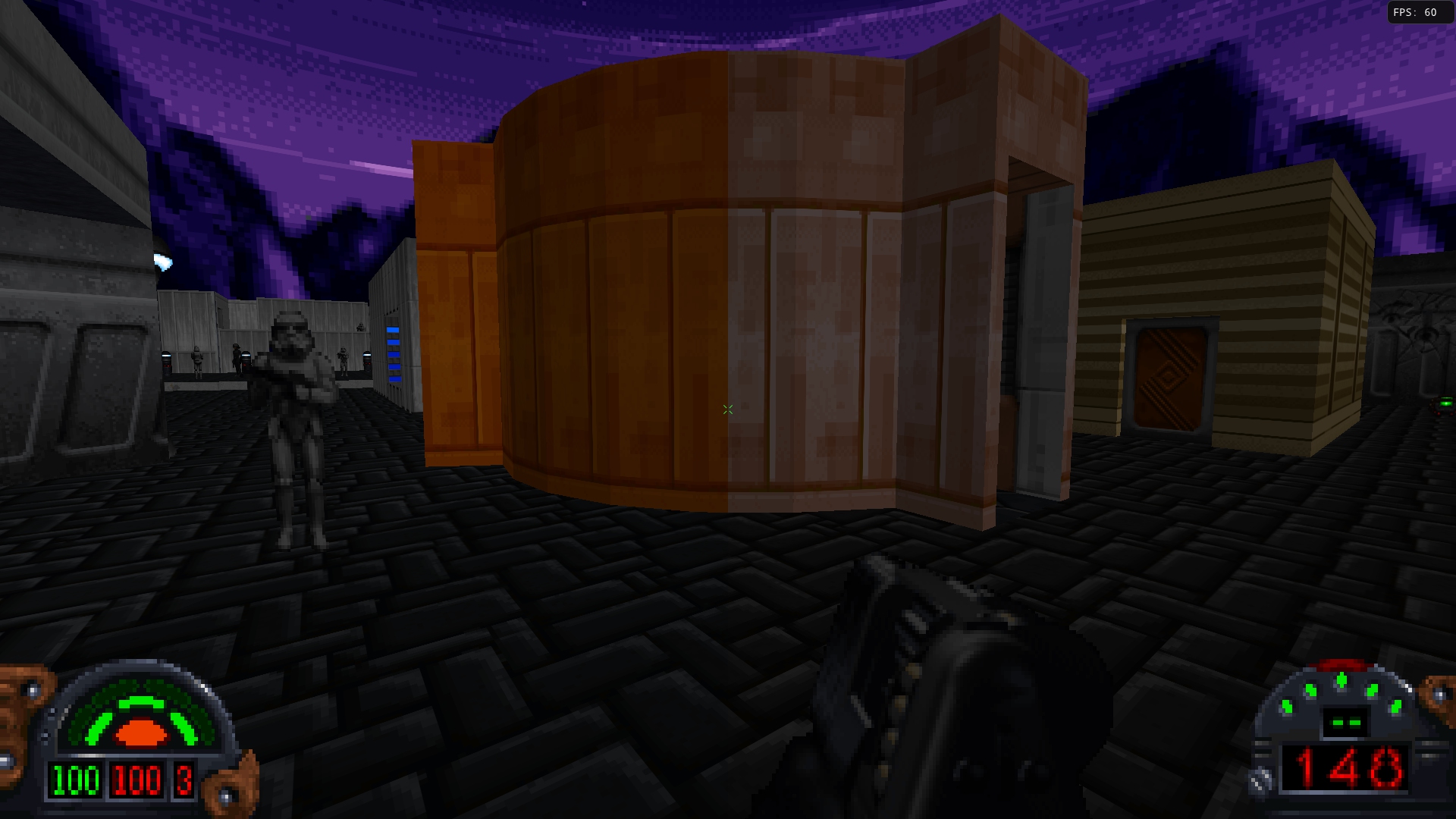

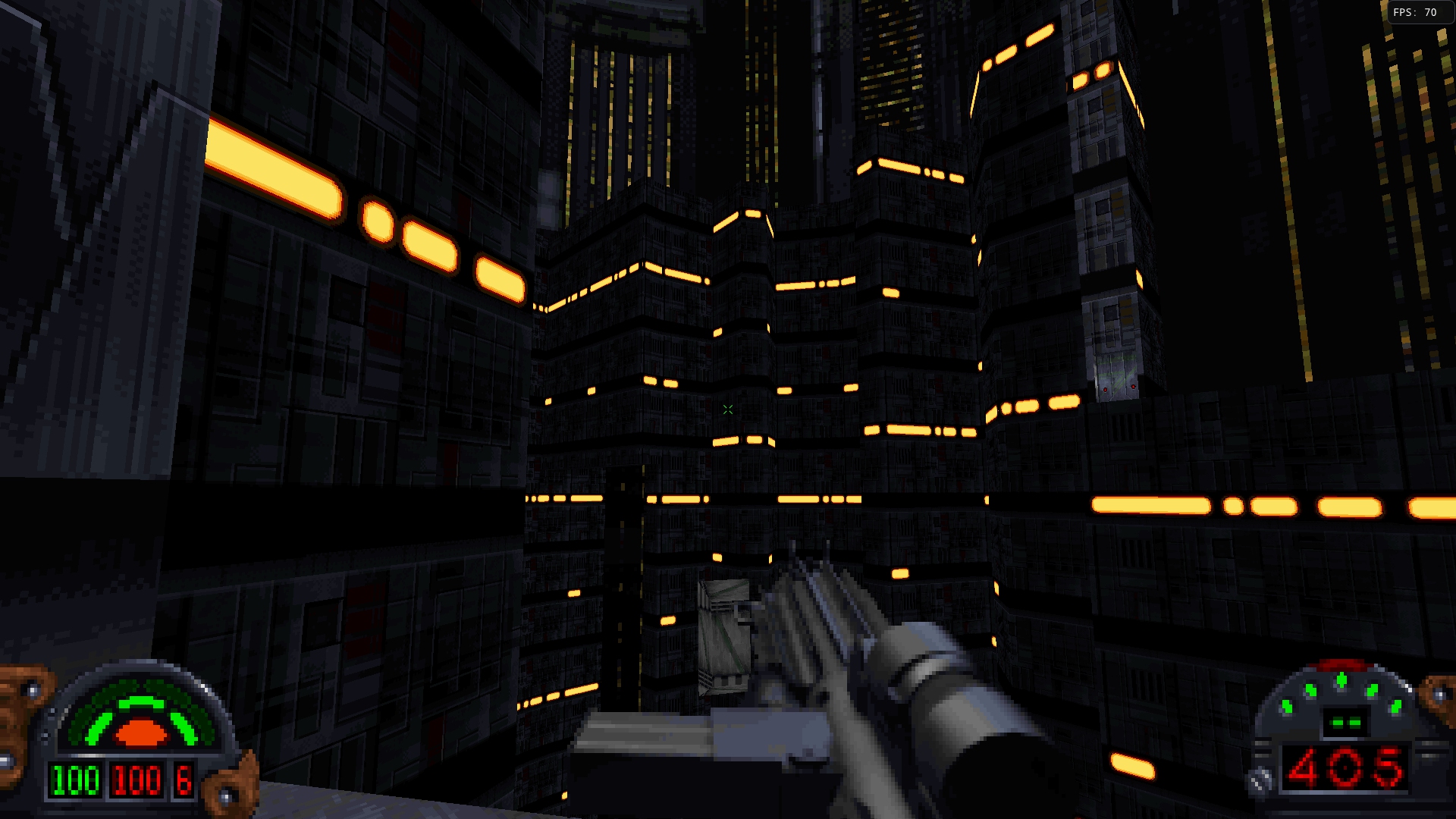

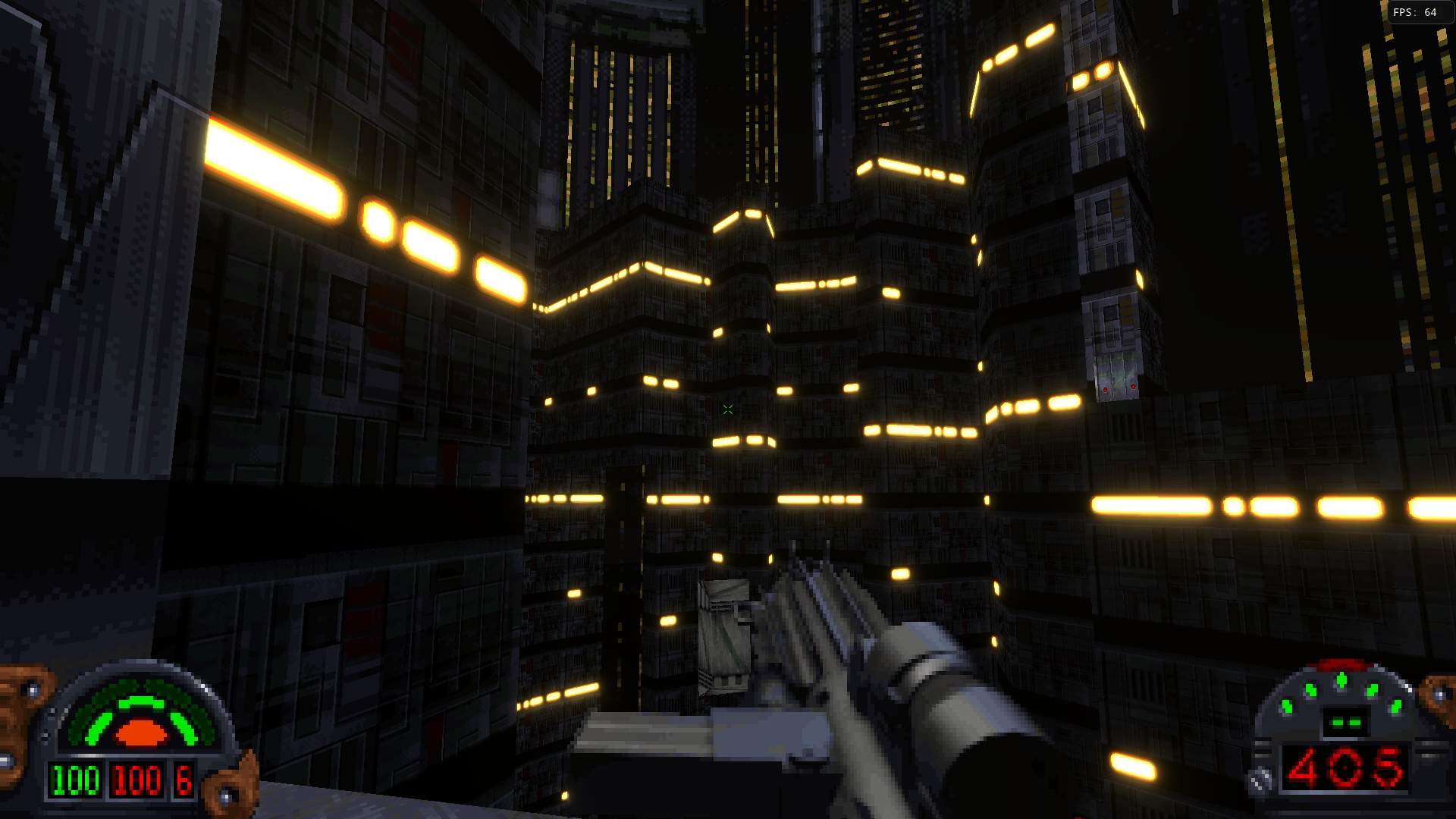

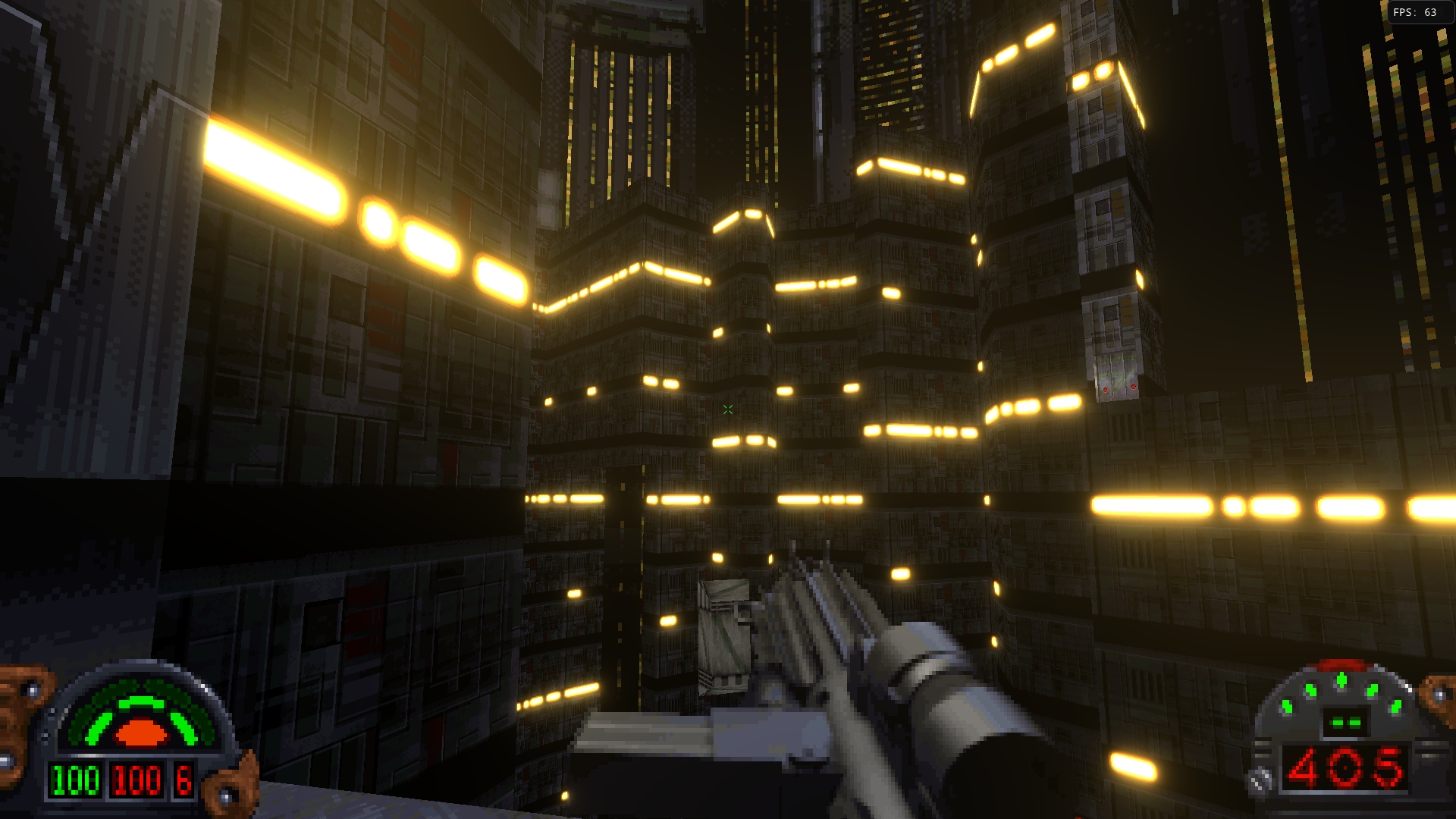

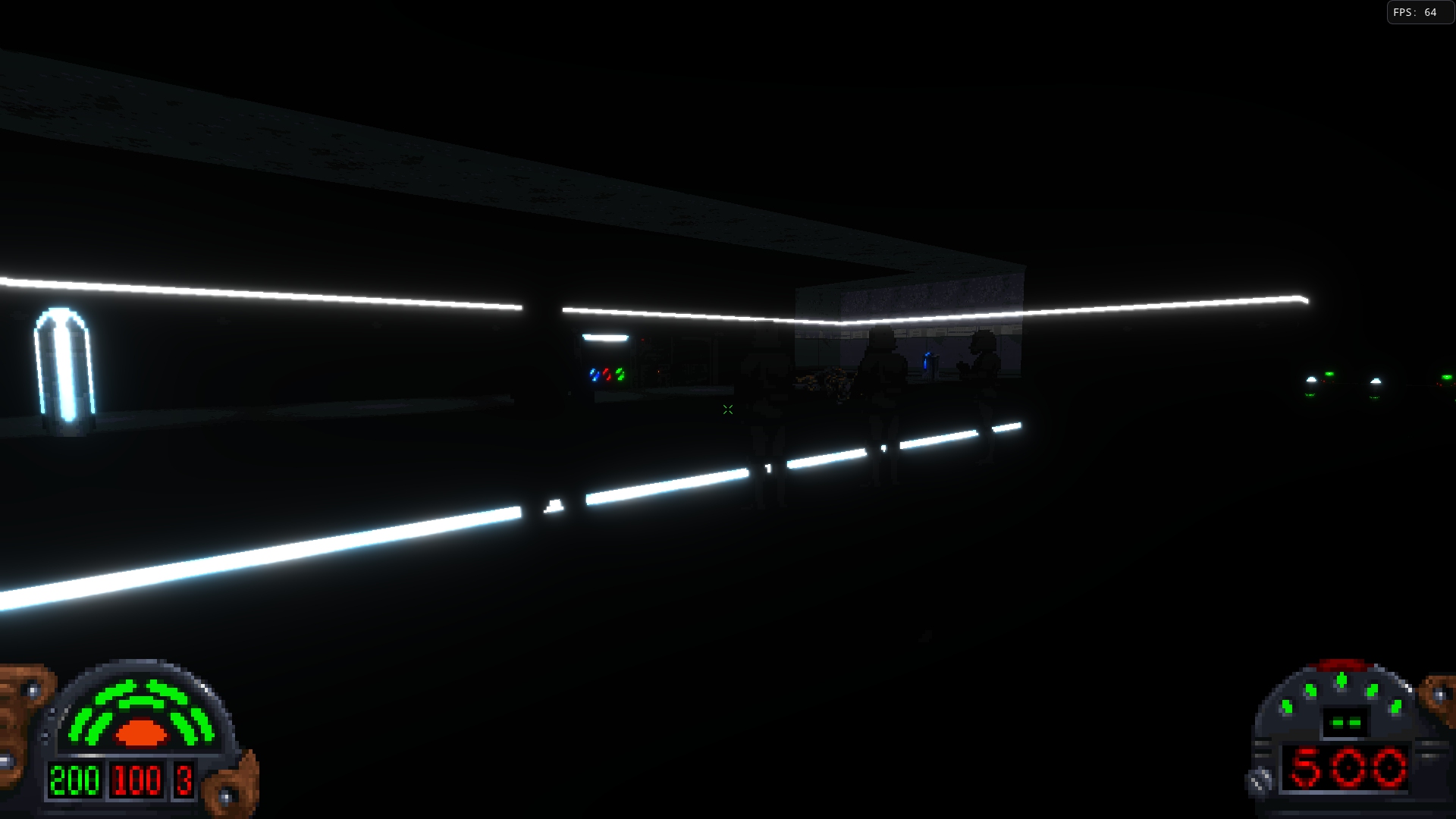

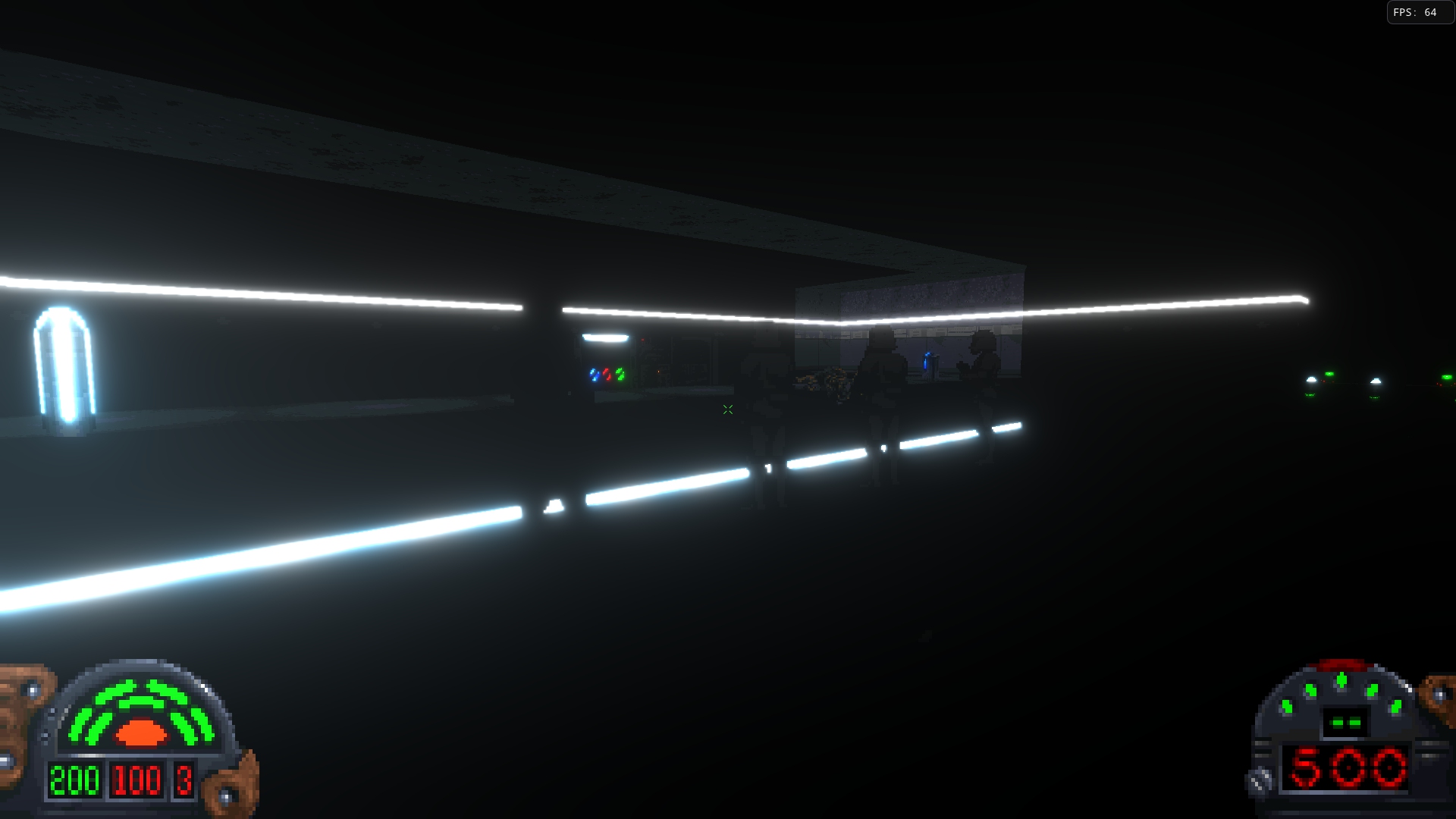

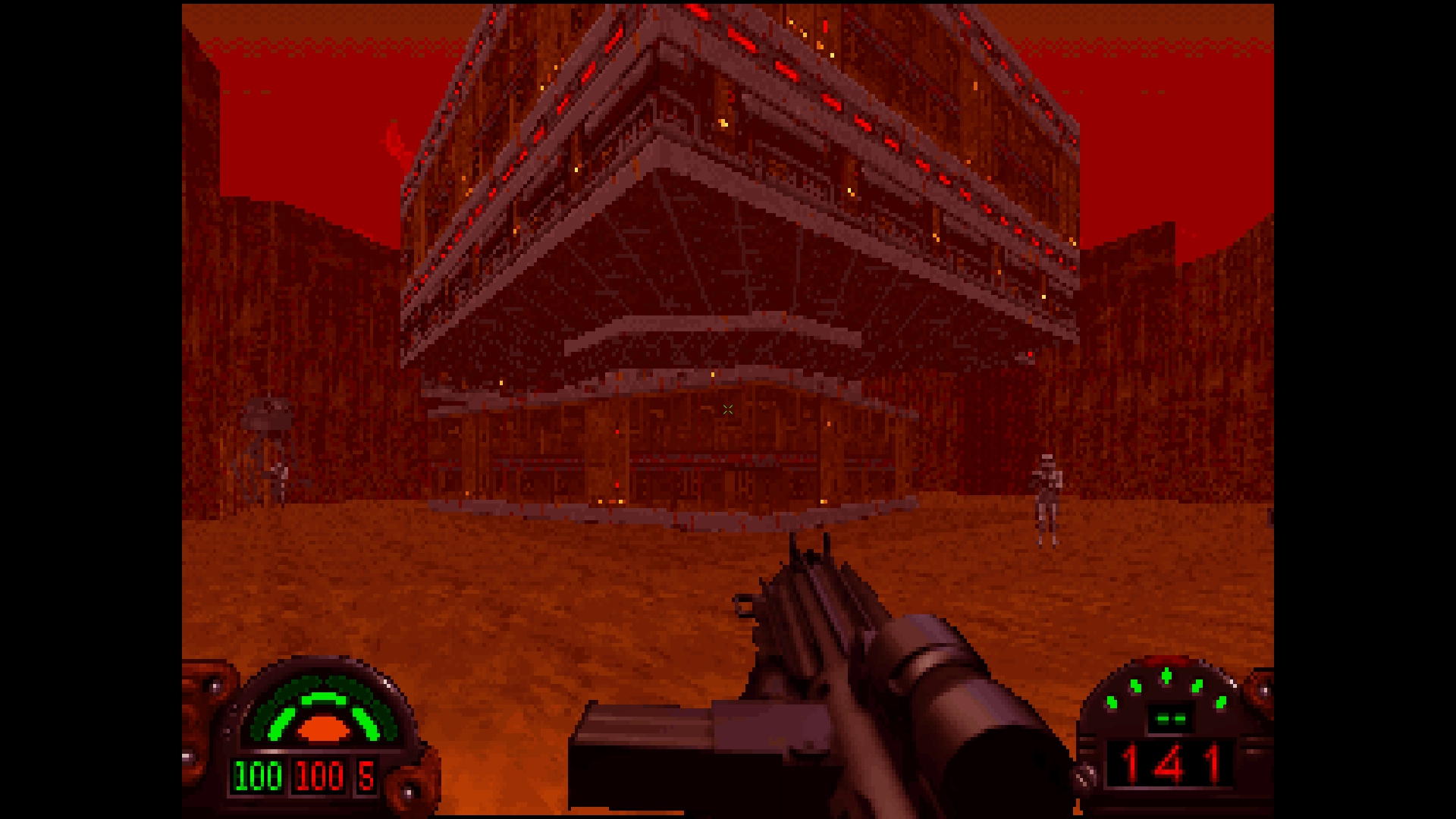

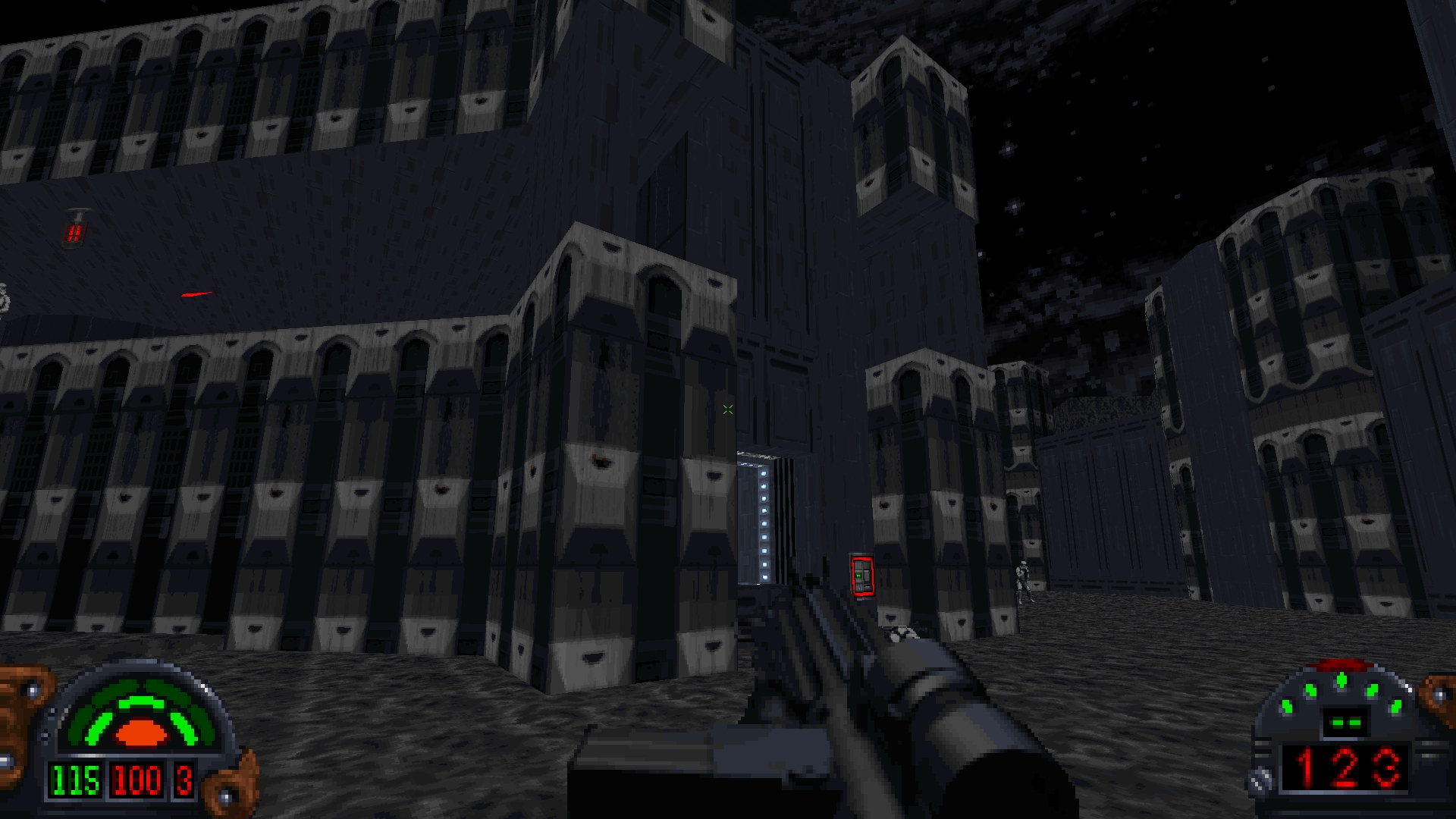

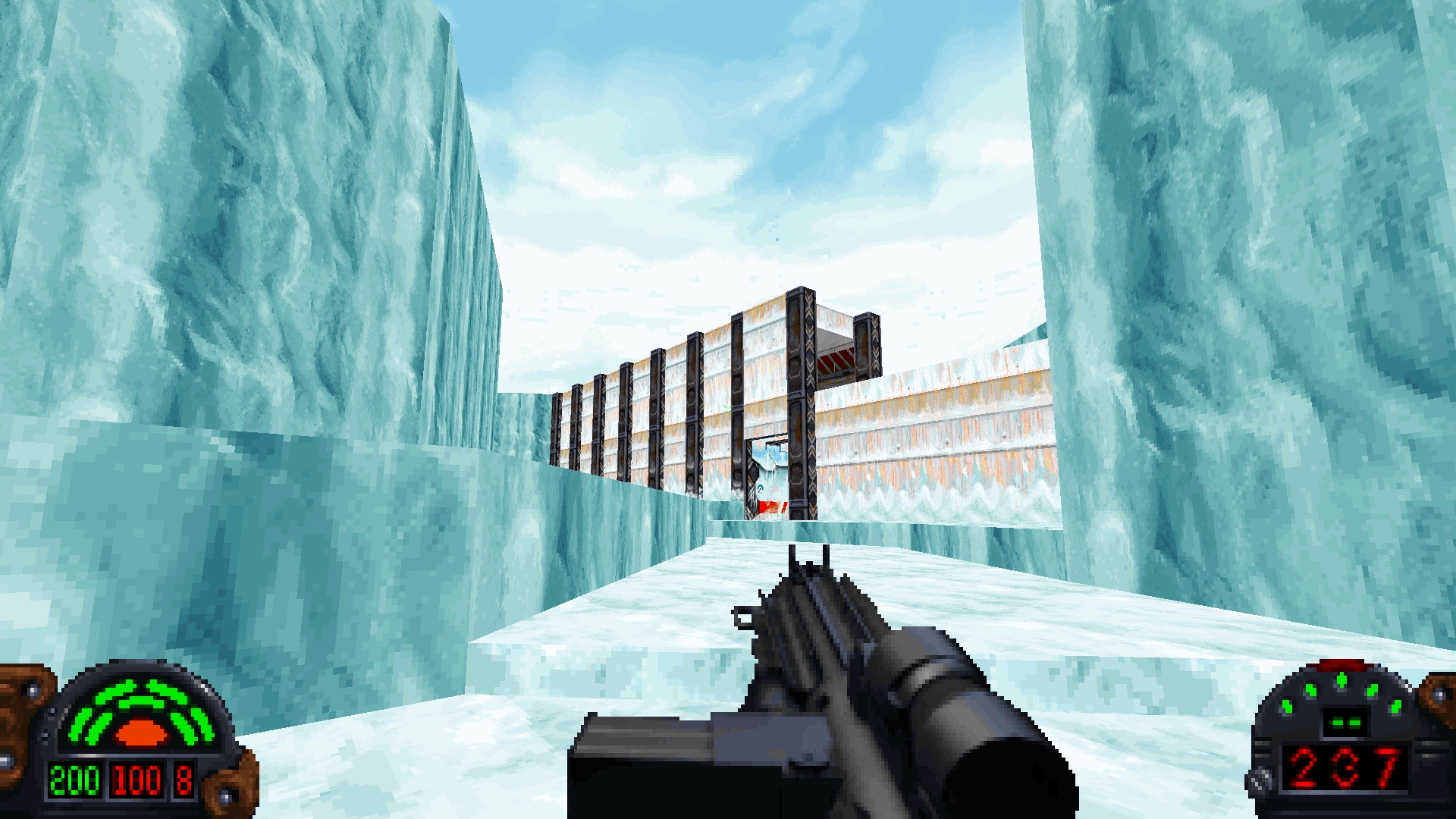

Bloom

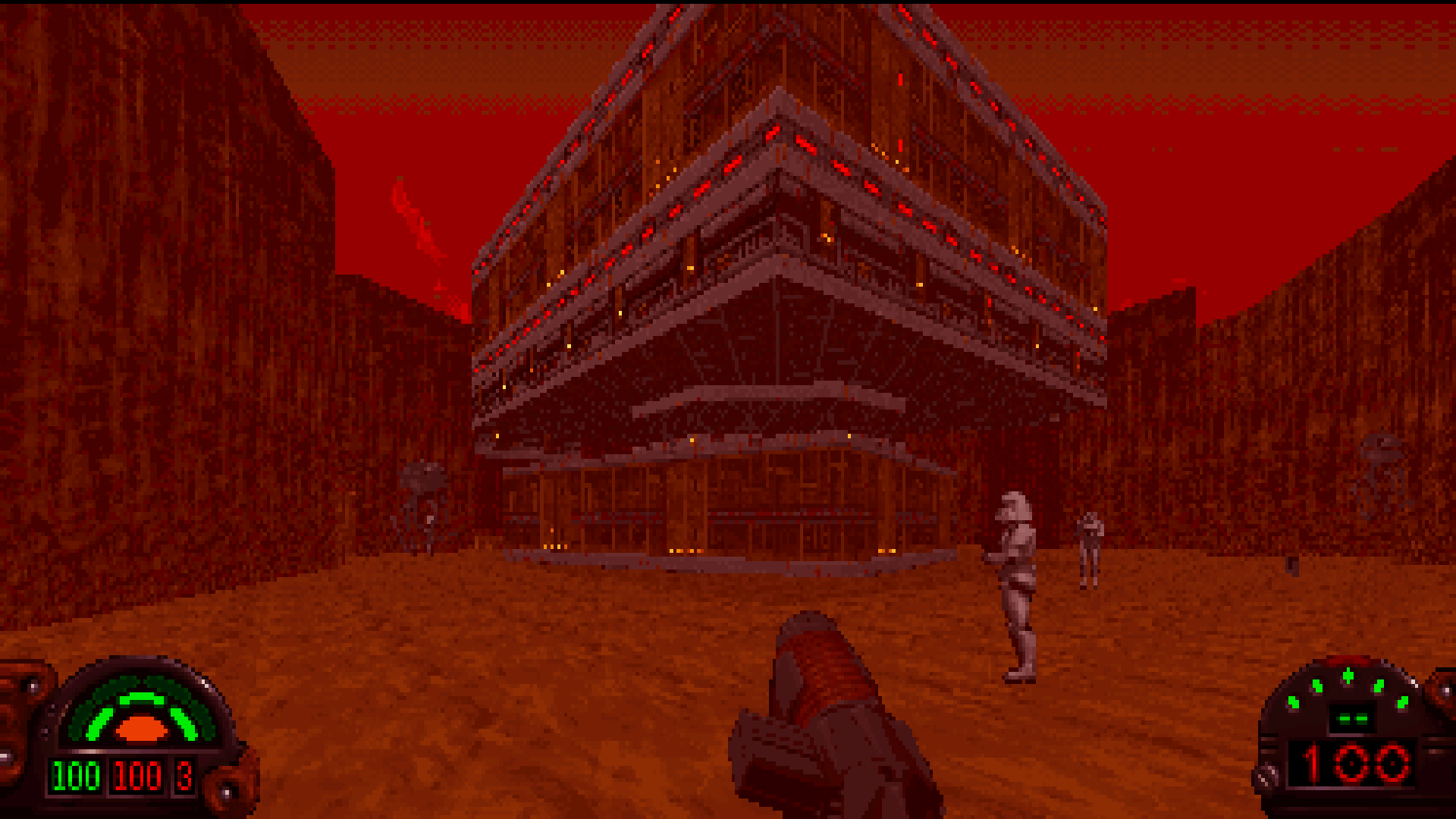

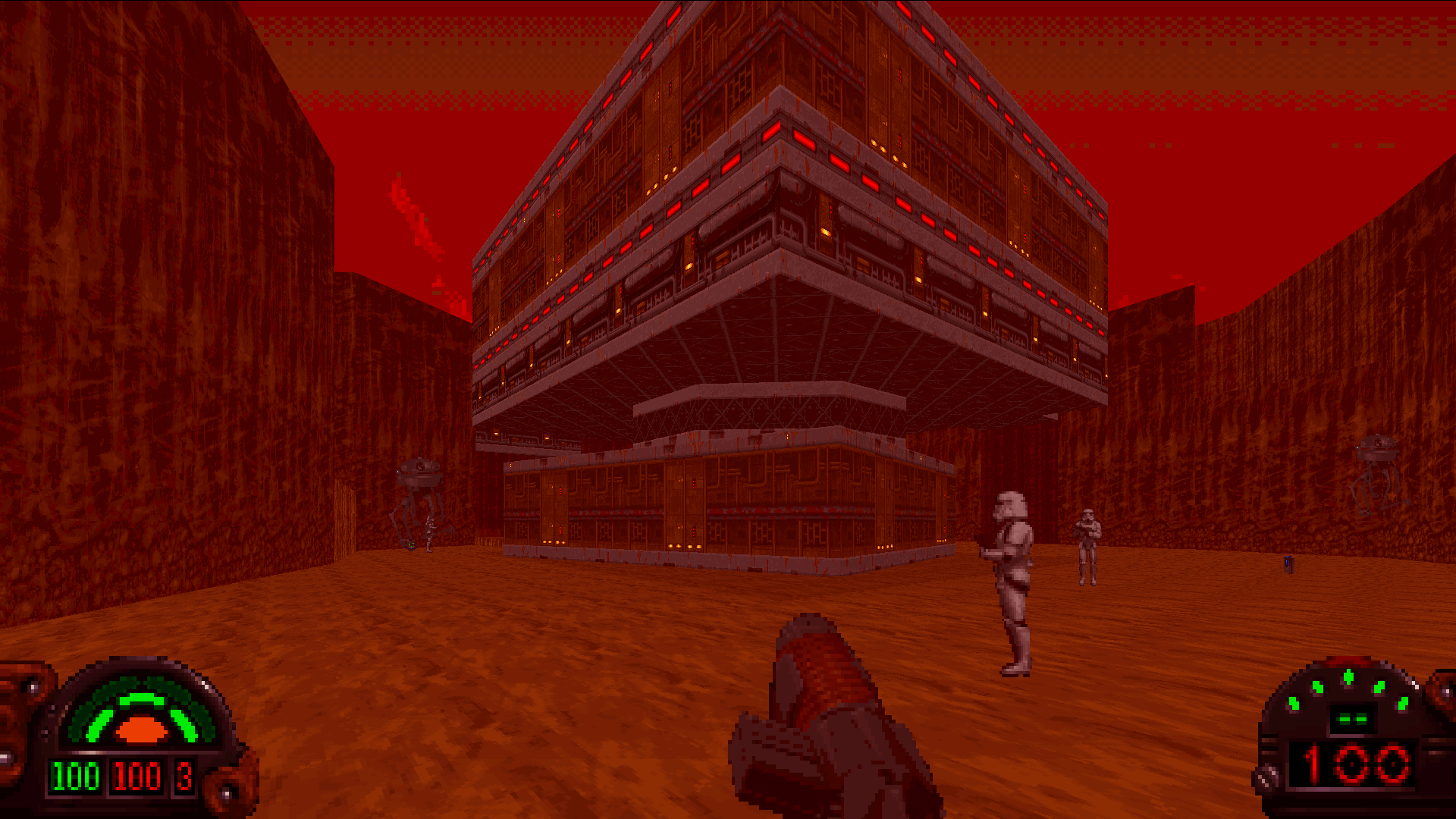

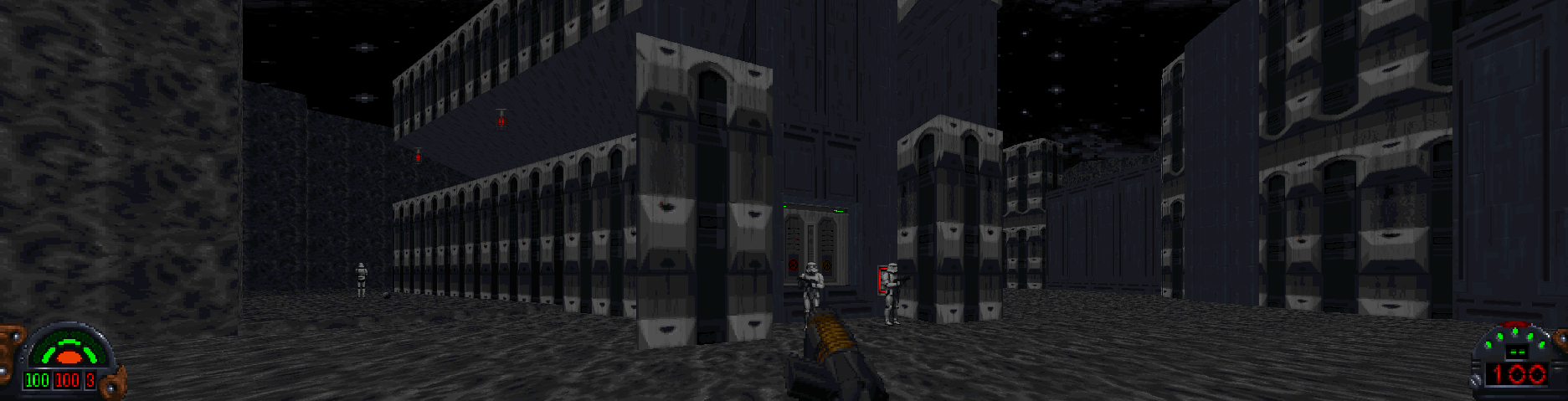

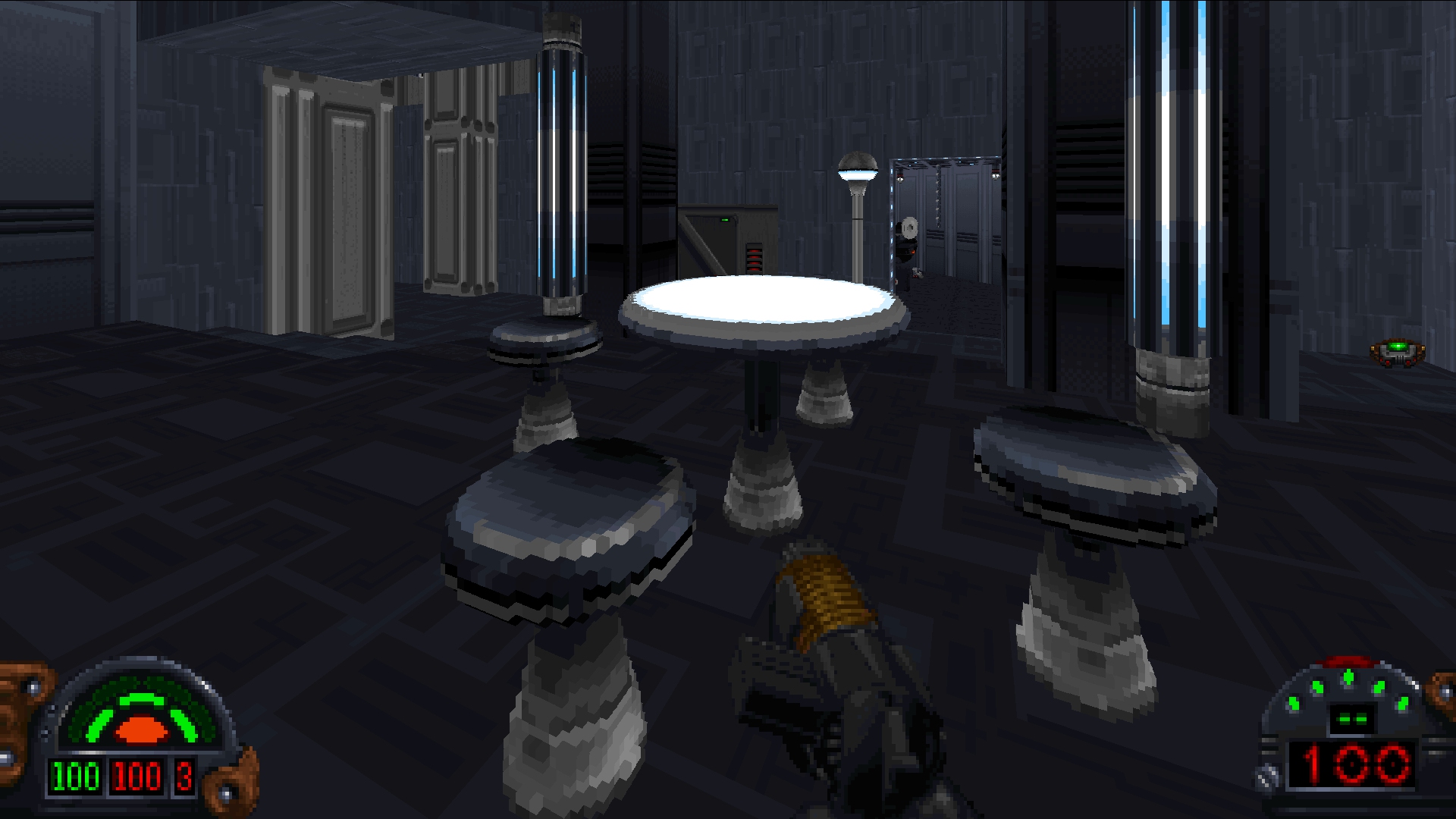

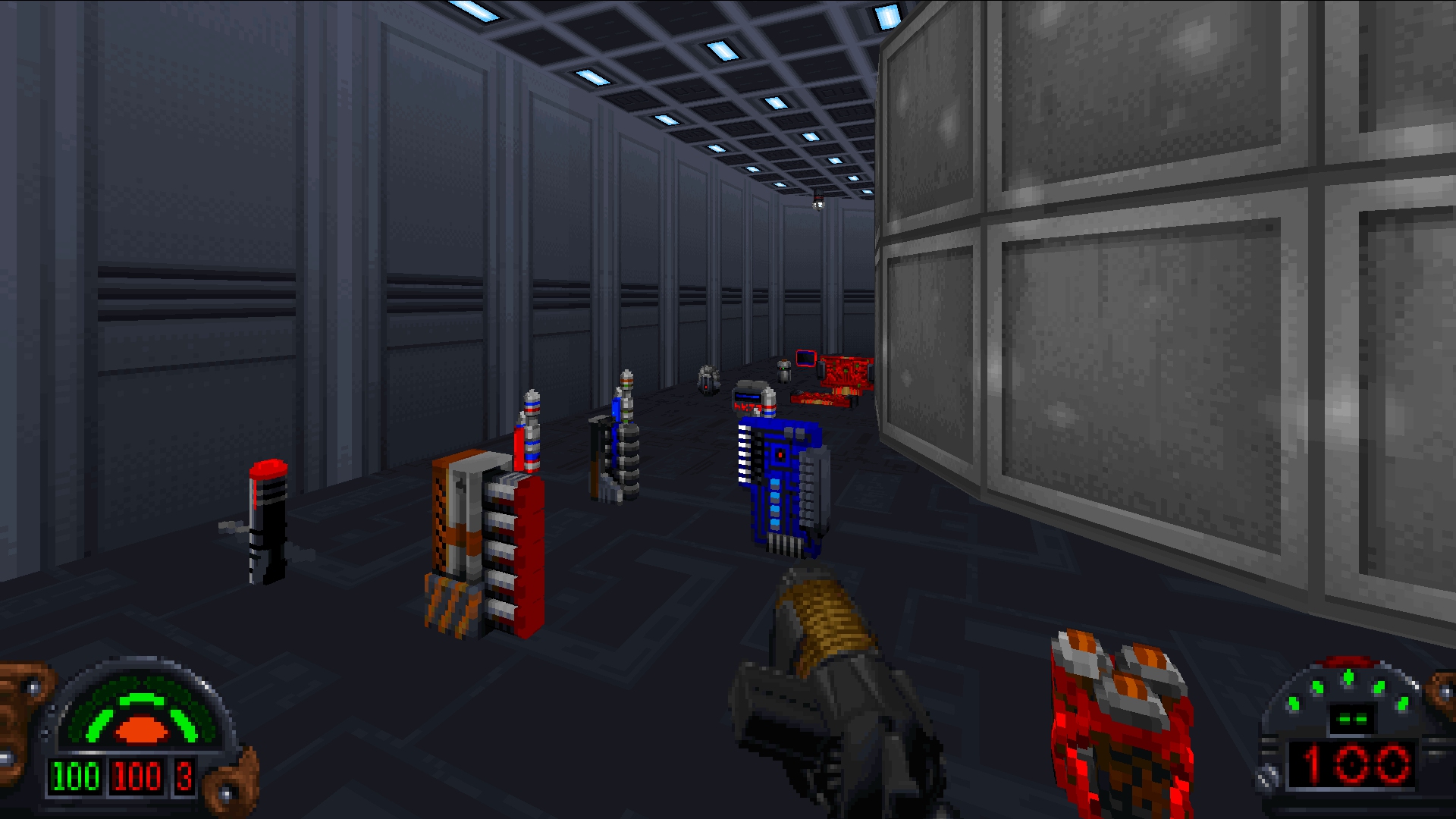

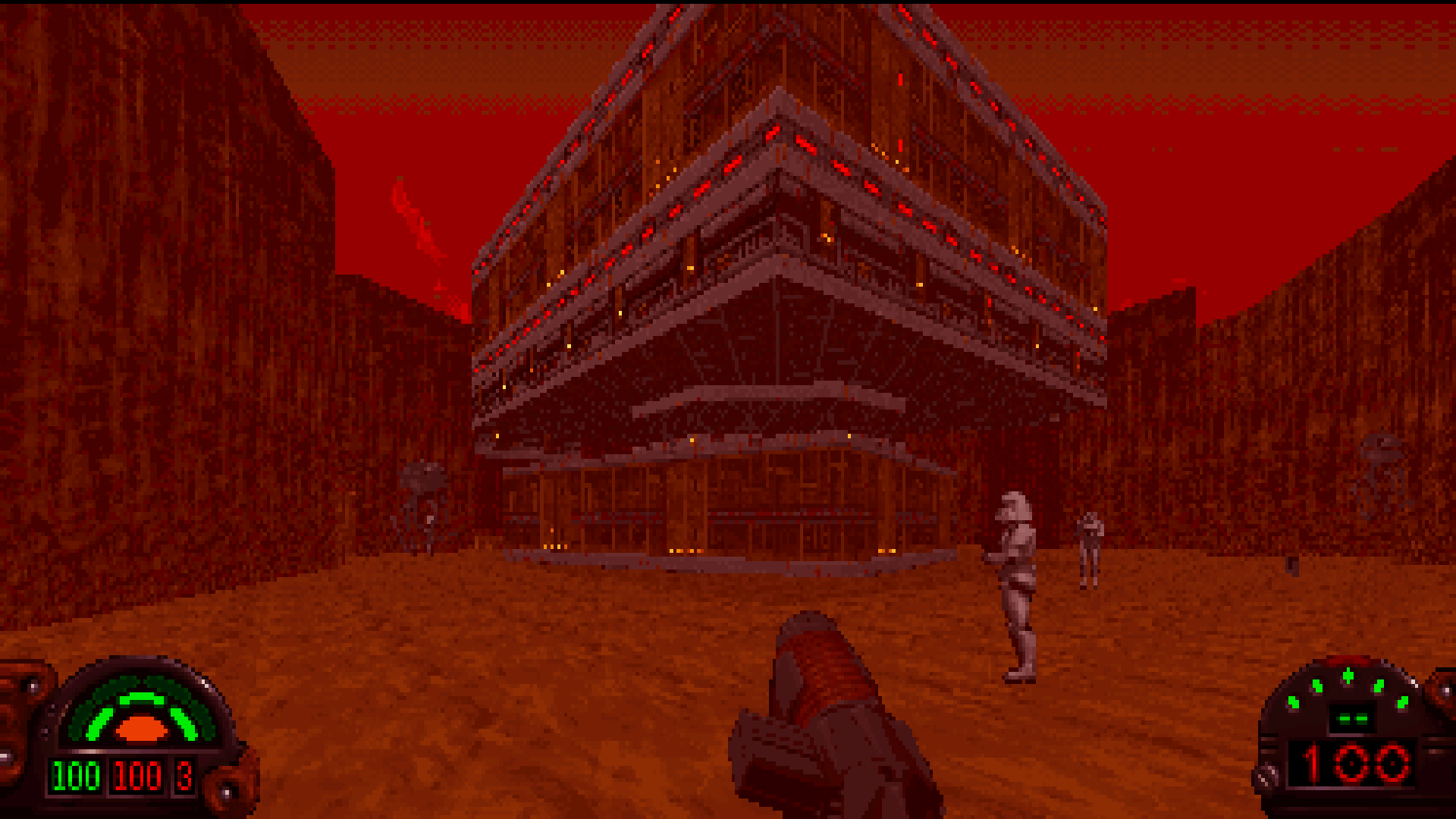

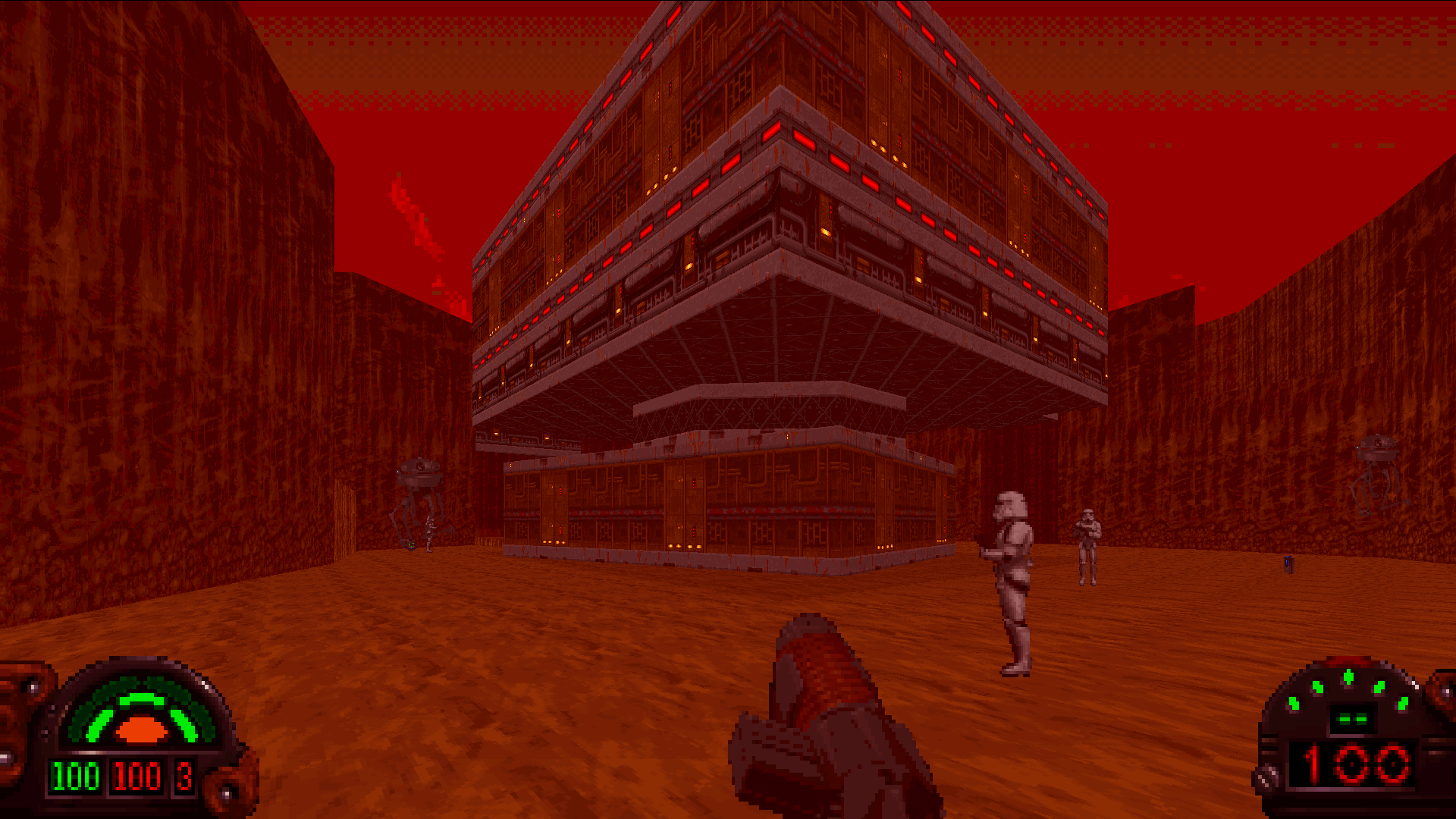

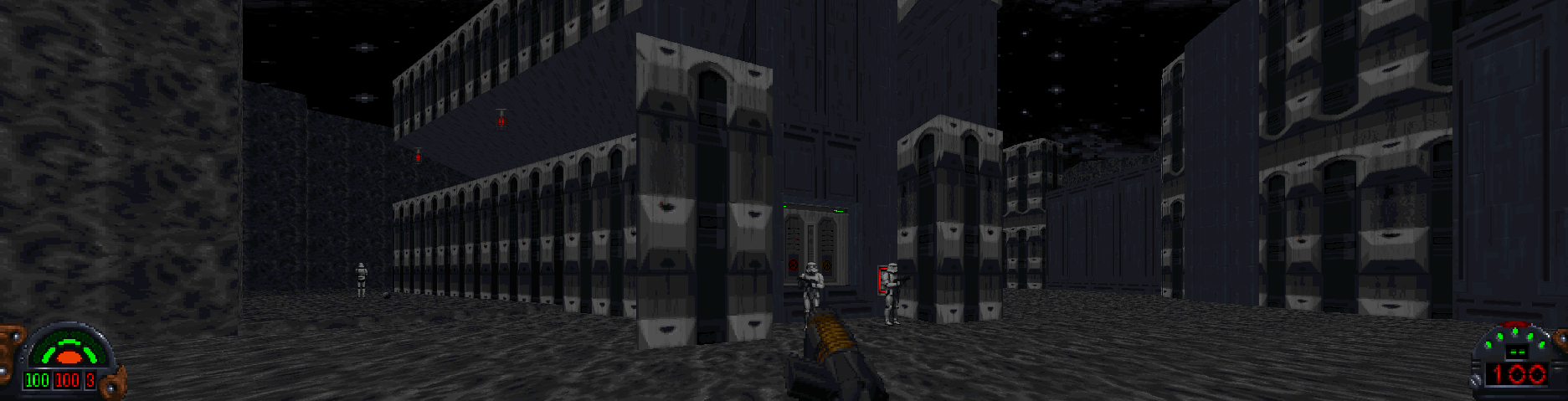

Star Wars environments are filled with glowing panels and lights, and so bloom tends to work really well to simulate this effect. For TFE, I wanted to implement a high quality, tunable effect - so went with a more modern implementation than seen in projects like DarkXL.

Emissive data is automatically generated from the textures, frames, and sprites by assuming that fullbright palette entries map to emissive pixels. This isn’t perfect, but generates very plausible results in the base game. The same mapping is used when decided which solid colors are emissive when rendering 3DOs.

The core technique supports very large blurs, and works as follows:

First a half sized image is generated from the full size color data and emissive data: result = post-conversion RGB * Emissive. A 13-tap downscaling filter is used that utilizes bilinear filtering to emulate a full 36-tap filter, in order to reduce flickering and aliasing.

Then that image is downsampled multiple times using the same filter (though the emissive values no longer need to be used), similar to generating a mipmap chain. Each downsampled texture is lower resolution than the previous, but later upsampling blurs cover a larger area.

Finally the downsampled images are recombined and merged together. At each step a lower resolution merged result is blurred and added to the next highest resolution. The way the frequencies are mixed together changes the spread of the blur.

Threshold/Downsample -> Downsample chain (smallest 8x8) -> Merge from bottom up (8x8 + 16x16 -> 16x16, 16x16 + 32x32 -> 32x32, ...->Final bloom with all frequencies composited

Controls

- Strength - Intensity of the bloom effect.

- Spread - How far the effect spreads, lower spread results in more focused bloom, higher results in more atmospheric/foggy looking bloom.

Emissive Overrides

In a future release, it will be possible to override the generated emissive values for each texture. This is useful for adding emissive pixels where they are missing, to handle True Color assets later for mods, and to remove emissive pixels that are generated by assets that accidentally used fullbright palette entries incorrectly.

Texture Exporting

To make creating overrides easier in the future, a new console command exportTexture has been added. To export a texture that you can see, simply look at it (point the crosshair at it, if that is enabled), and the type exportTexture in the console. TFE will write out the BM, a palette converted version as PNG, and finally a “material” PNG that contains the generated emissive values.

No Bloom / Default / High Strength / High Strength + High Spread

More screenshots are various settings

Smooth Vue Animations

Contributed by Kevin Foley.

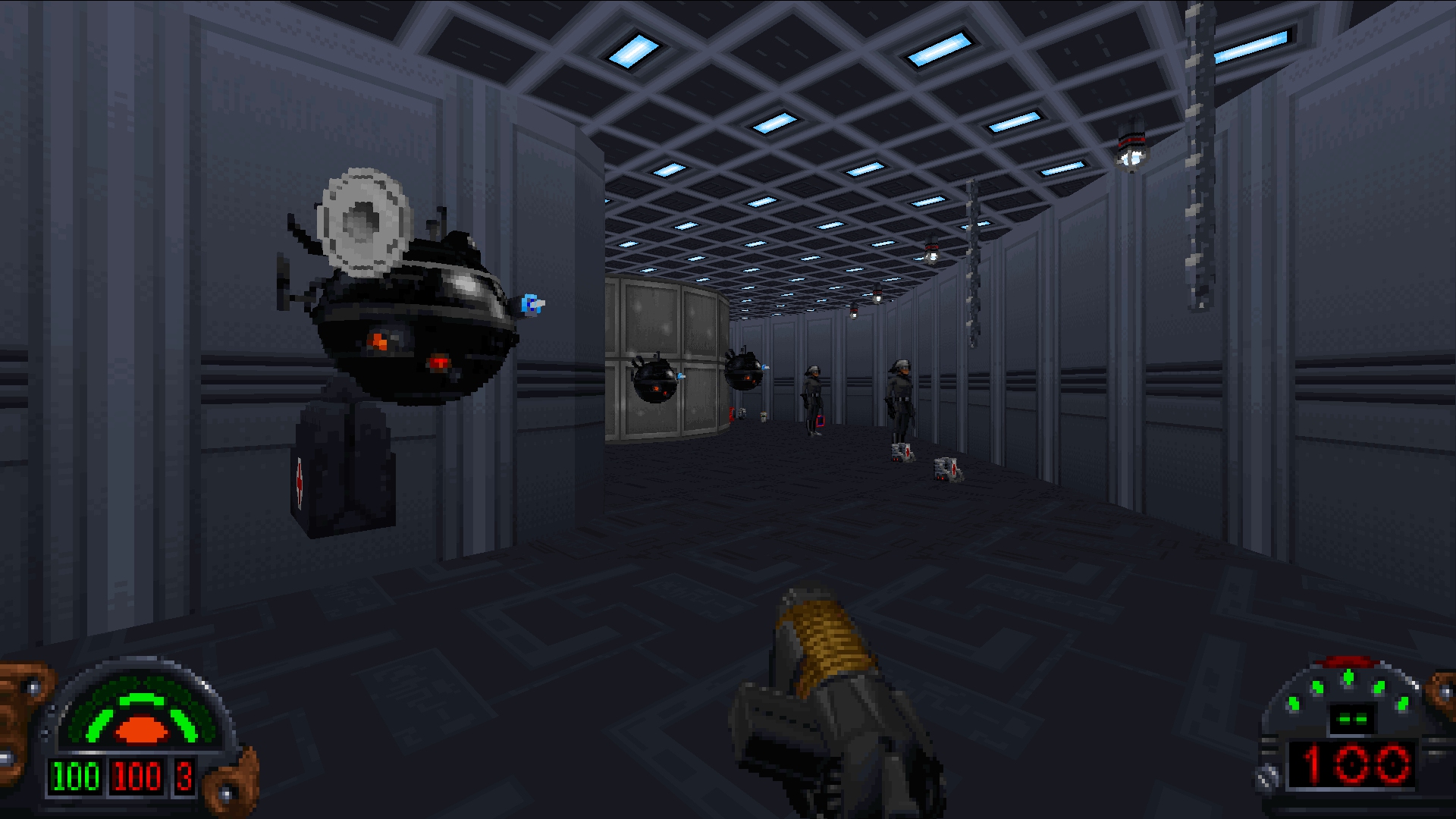

A previous project, DarkXL had a feature that smoothed out Vue Animations. For a while now, I have been meaning to implement a similar feature for TFE, but contributor Kevin Foley recently contributed an implementation of the feature.

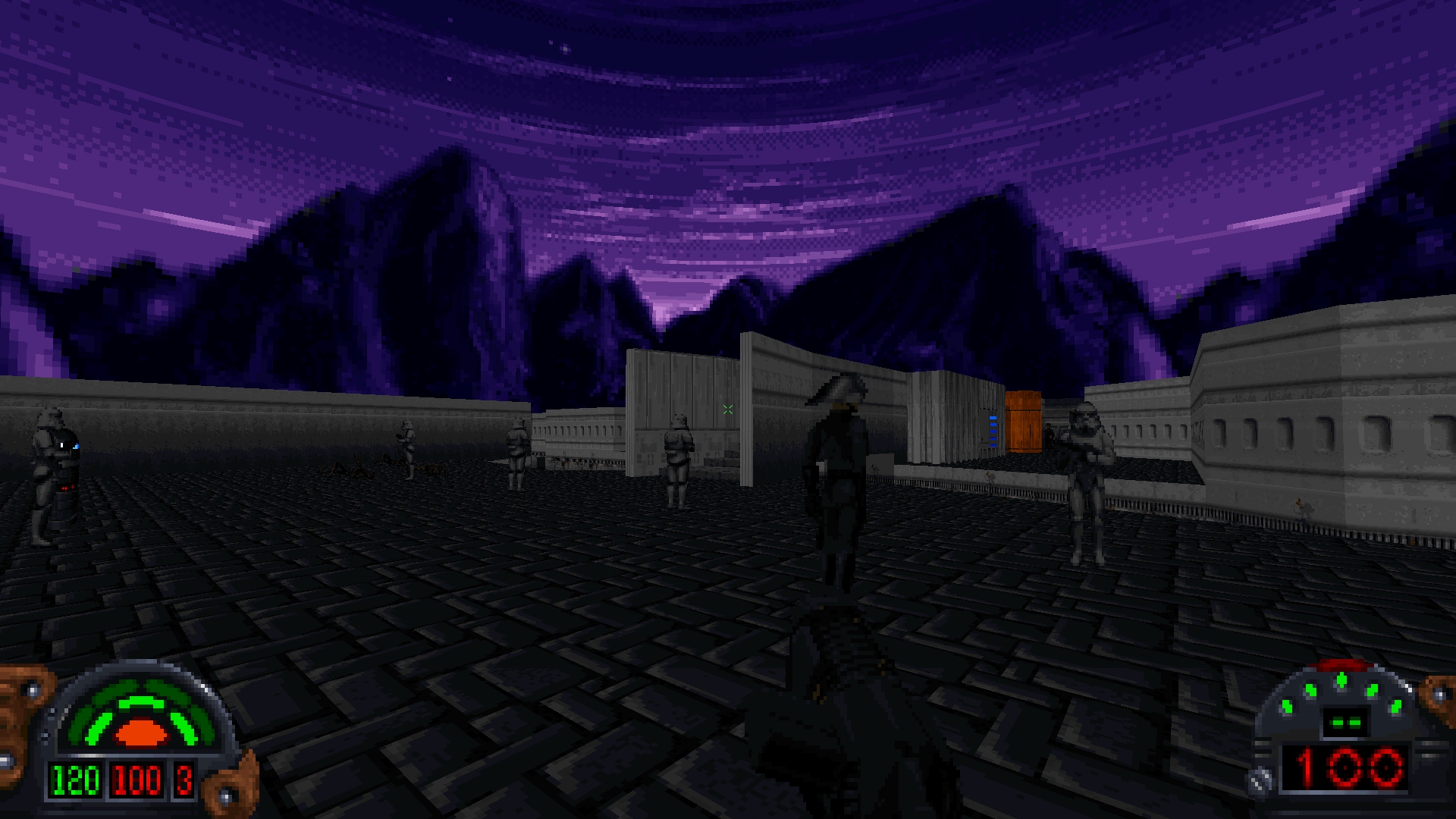

Smooth Vue Animation blends between frames when animating 3D objects or sprites using the Vue System. This produces a much nicer animation and does a far better job of selling the movement of the various ships and cargo containers that fly through the levels of Dark Forces.

Closed Captions

Contributed by Kevin Foley.

Since before TFE version 1.0 was even released, there are been requests for subtitles for cutscenes. And so Kevin Foley has been hard at work implementing a Closed Caption system for TFE that covers both cutscenes and gameplay. As voice lines are spoken or certain sound effects play, the appropriate subtitles are displayed. Kevin implemented a number of options to customize the system, such as changing the font size and color; and disabling captions for different categories of sounds.

There is a lot of upcoming work, such as supporting UTF8 and handling translations, but most of this will not be tackled until Outlaws support is further along, so that we have a better view of its implementation and how the systems interact.

Below, Kevin will talk about what to expect in this release in his own words:

Hey everyone, here are my dev blog notes for the new Accessibility options. First, what to expect with the next release:

- Full subtitles for all voice lines in cutscenes and gameplay

- Descriptive captions for key sound effects in cutscenes and gameplay, such as

[Door clanking] and [Alien hissing]

- New Accessibility menu with many customization options:

- Enable/disable captions and subtitles separately for gameplay and cutscenes

- Adjust font size and color

- Adjust text background opacity, with optional border

- Adjust how many lines of text are displayed during gameplay

- Adjustable volume threshold for gameplay (sound effects that are quieter than the specified volume are not captioned; good for eliminating captions for distant sound effects)

- Subtitles/captions are defined in a user-editable file (please note only ASCII is supported for the next release)

Post-1.10

Level Editor

A major focus, once version 1.10 releases, will be on the TFE level editor and support editors. During this phase some of the new engine features from Outlaws will be integrated and tools developed to use those features. This is the first way that previous and future Outlaws work will be integrated in a visible way. That said, there is little point to implement new level-based features until there are tools that can be used to test them.

There are other long standing features that will be added during this period as well, with tools - such as the long awaited voxel support that was prototyped before version 1.0 shipped.

Outlaws Support

In the background, Outlaws work has already begun. Initial work involve looking at the low-level engine code and loaders, with the goal of being to able to load and fly around in levels. This initial work will be used to integrate some of the Outlaws engine-features into options for new Dark Forces mods as well.

After the initial Level Editor release, most of the focus will shift to Outlaws support, but the level editor and other editors will be updated over time - to iterate on the tools, make improvements, and fix bugs; but also to add more Outlaws specific features for testing.

16 Jan 2023

Version 1.02 Release

Version 1.02 has finally been released, containing many bug fixes as well as several quality-of-life improvements to TFE. With this release, several user-levels that could not be finished are now completable; many INF, collision and jumping issues have be resolved. In addition, the GPU Renderer now behaves much closer to the software renderer in many ways, especially in relation to exterior adjoin behavior. Finally the Sound UI has seen a large improvement, with the ability to select output devices, reset to default. In addition, TFE will attempt to use several different audio APIs when the default does not work due to driver issues.

Version 1.10

This doesn’t solve all of the bugs, of course, but I think it puts the project on solid ground when it comes to playing the core game and most user levels. In the previous post on Upcoming Plans I discussed the early 2023 plans for The Force Engine. I plan on starting on the next step in the plan, Version 1.10 - the official Linux release - this week. The plan is to use the Steam Deck as a test platform in order to ensure that TFE supports the device well. I will be starting from existing Pull Requests (PR) that have already been submitted as well as building in TFE native support for using sound fonts and midi synthesis using the audio system. This will, in turn, require the audio system to be upgraded to handle higher frequency output (either 44.1 or 48kHz) - which will enable support for HQ audio later this year.

Next Steps

During this process, I will still fix bugs, of course, but that won’t be the focus for a while (except for critical issues of course). And once version 1.10 has been released, the plan is to move on to Version 1.11 - which will be full color support, along with various optional visual enhancements and image quality improvements. See the Upcoming Plans post for more details.

Final Words

The Version 1.0 Release of The Force Engine has been a great success overall. Projects like these take time to mature, and people found many bugs and issues - just look at the massive list of fixed issues in version 1.02 - see Downloads for example. Yet the release went really smoothly and many people had a great experience playing Dark Forces using TFE. And, for that, I largely credit the many individuals listed in the credits who tirelessly tested the game and reported the many, many issues they found along the way.

So, thanks everyone for testing!

06 Jan 2023

Version 1.02

I am currently working towards version 1.02 - this post will cover what has already been done and what I am still planning to do. Players have found a number of issues, especially with mods, in the release version. So version 1.02 will be another bug-fix release with some minor quality-of-life improvements as well.

Improvements Already Completed

- Alt+Enter to toggle fullscreen (in addition to F11).

- Secret Percentage update after loading from a save, fixed LADATA save percentage issue.

- Fixed layer keys swapped in PDA.

- Frame rate limiter (still needs the UI).

- Fixed a crash when playing Evacuation of Hoth due to a frame scenery object being destroyed.

- Fixed a bug where sprites didn’t always light up correctly when attacking.

- Fixed a bug where the Probe Droid would stay fullbright after firing.

Improvements Still In Progress

Version 1.10 - Linux Support

The main goal of version 1.1 is to add official Linux support and clean support for the Steam Deck. Mac support is planned but will probably happen a bit later.

- Integrate Linux PRs already submitted.

- Fix sound issues on Linux.

- Add support for midi synthesis and sound fonts.

- Add support for higher audio processing frequencies, to support midi synthesis. This will enable high quality audio support later this year.

Version 1.11 - True Color Support

- DirectX 10/11 render backend to better support GPUs with poor or non-existant OpenGL drivers on Windows, such as some integrated Intel GPUs.

- Vulkan render backend support for better GPU support on Linux.

- True color render support - with options for texture filtering and antialiasing. This will also include support for 8-bit color lighting + color map interpolation.

- Bloom post-fx (optional).

- Better low-end support for the software renderer.

- I might add dynamic light support as well, or maybe wait until a later release. This will depend on how long it takes to get this release out.

Version 1.12 - Voxel Support

- Integrate the experimental voxel loading and rendering code.

- Add GPU voxel rendering support.

- Improve “vox” asset loading.

- Add metadata support for voxel WAX/Frame/3DO replacements.

- Add metadata support for voxel texture replacements (for switches).

- Add “maphacks” equivalent to TFE for fine-tune voxel object positioning.

Version 1.20 - Editor

- Bring back the asset visualization tools (with the idea of making them more fully featured in future versions).

- Bring back the level editor.

- Initial level editor release.

24 Dec 2022

2022 Retrospective

2022 was a hectic year for The Force Engine. With the basic cutscenes working at the end of 2021, I had high hopes for quickly getting iMuse out of the way. As it turns out, there was a lot of change at the beginning of the year and development slowed for quite a few months. But during this initial period, the reverse-engineering process on the iMuse library began, which itself was a massive endeavor. The iMuse library not only handles midi, but also digital audio mixing for Dark Forces. The iMuse system supports blending, fading, conditional loops and jumps, even callbacks into game code. It will even sustain specific notes between songs - in order to smooth out transitions. In mid-May, the iMuse system was finally released as version 0.9. Though the year was already half over I still had high hopes of releasing version 1.0 in 2022.

Once iMuse was complete I made two, somewhat risky decisions - 1) that the GPU Renderer would be part of the version 1.0 release and 2) to also add a save and load system to the release. I felt confident that both those tasks could be completed in the remaining 6 months, though it would certainly turn out to be a close call.

GPU Renderer

I had always planned to add a GPU Renderer and there were several reasons - but mainly to support high resolutions and high framerates. The other main reason was to support perspective-correct pitch, since many found “y shearing” to be uncomfortable with mouselook. I also want TFE to be an attractive target for future mods and Dark Forces development, which means having to be forward looking.

The GPU Renderer itself is somewhat of a strange beast, it doesn’t really act like a modern game renderer. It takes the idea of the 1d-zbuffer in Jedi and extends that to 2d, all the while supporting being able to look straight up and down like a modern renderer. There is no sector triangulation involved, it directly extends the original algorithms into 3d. So what does this do? It allows the renderer to act in a very similar fashion to the software renderer, no sprite or object clipping issues with the floor and ceiling, sprites sort in front of or behind walls like in the original. Portals behave properly, allowing for all sorts of non-euclidean effects. Lighting and shading match the original - even the dithering on 3D objects, which shows up best at low resolution. Color map effects are preserved.

The other aspect that was important is good performance and avoiding OpenGL pitfalls. This meant pushing as much of the work as possible to the GPU and limiting driver overhead and CPU to GPU bandwidth. Final geometry generation, clipping, and shading had to occur on the GPU. As a result, the sector data is uploaded to GPU memory and updated only as needed - and a sort of “display list” of wall, floor and ceiling parts is built that passes along the wall flags, texture ID, and other compacted data. The shaders than read the compacted data, and maps it to the semi-static data stored in memory and then sets up the geometry correctly. Portal planes are read and used to setup GPU clip planes. The textureID is used to look up a texture table that maps to an array of texture atlases (essentially virtual textures).

As a result all sector data is rendered in two draw calls, sprites one or two draw calls, and 3D objects one draw call per object, no matter how many textures or shading modes they use. CPU to GPU bandwidth is minimized and a large amount of the work is pushed to the GPU that would traditionally be done on the CPU. All with the feature set offered by OpenGL 3.3.

Save System

At this point in development, there were a number of latent crashes hidden in the code due to global state issues in the original code. In addition, exiting back to the menu from in-game was fraught with bugs and issues. Something needed to be done to get a handle on all of the global, DOS-style state. And so, I spent a large amount of time cleaning up the global state situation, collapsing state into structures and properly handling setting and clearing these structures to known values. And when thinking about this, it occured to me that I could also handle serialization at that same time. And so, I decided to implement the save system in version 1.0.

In order to build a robust system and avoid save compatibility, I planned out my approach:

- Versioning as a first-class consideration.

- Every read and write specifies the desired version and a default value.

- Read and Writing occur in the same functions, often with the exact same code.

- Serialization to binary data and it should be fast.

- Saves store the mods used, so you can load directly from the save without having to select your mod(s) first.

- Saves act somewhat like save states - full level state, AI state, player state, INF state, and game state is stored. This includes a list of all the per-level assets used. When loading from a save, the original level data is not loaded - it is reconstructed from the save itself. Assets, like textures, are loaded as usual, of course.

The Save System has already paid dividends and made the next phase of development much easier.

The Final Push

In the final weeks, builds were coming out almost daily. Every night, after work, I was churning through the bug list as people found yet more bugs (for which I am grateful). But slowly, things started coming together. I made the final version 1.0 list and within a surprinsingly short time managed to finish it. Many important issues were found and fixed in those final weeks. Some bugs that had been in the project for months or years. And then, after about three years of development - it was finally time to release version 1.0.

Of course the process isn’t over. There are still bugs, though only a few that an affect gameplay. But overall, despite the large increase in testing - the release went really smoothly. And that is mostly thanks to the tireless efforts of all of those listed in the credits - the people who played through the game multiple times, who played various custom levels, who did weird things and got TFE to crash.

2023 Plans

2023 promises to be an exciting year for The Force Engine. Initially there will be a focus on fixing issues with the version 1.0 release. A lot of that has been done already with version 1.01, but there are still some bugs that need to be resolved and features that would be nice to have, such as being able to select audio and midi devices from the menu. There are a few major areas of development that will be the focus for 2023.

I list items in a specific order but in reality I expect more of a mixing. For example Vulkan support might not be done immediately, or Metal support added very late in development. The editors will also be an ongoing project extending well past the point where Outlaws development begins. There will be some parallel development, shifting of priorities and other changes as we go.

And like all plans, things may change in response to other events, findings, or just changes in perspective. None of this is set in stone.

Others have already spent a lot of time to help add support for Linux. My goal is to take that work and make any other changes and fixes needed to make TFE work well on Linux - especially the Steam Deck, as well as OS X. These efforts include:

- Support for midi synth and sound fonts.

- High quality audio support in the engine, as a result of the above.

- Fix other sound issues on Linux/Mac.

- Proper CMake build system.

- Support for Keyboard and Contoller control of the System and Game UI.

- DirectX, Vulkan, and potentially Metal backends. The current renderbackend was designed with this in mind, so I expect this to go fairly smoothly. This will also help with low end Intel GPUs without proper OpenGL drivers as well as newer models with poor or emulated drivers.

During the development of TFE, I had built a level editor to help me visually inspect the data in a friendly way. I integrated the editor directly into TFE for quick iteration and debugging. And I plan on using that for Outlaws. Unfortunately, the editor had to be removed because the engine just changed too much and it was getting in the way of development. So in early 2023 I want to bring it back, refactor the code and get it into a usable state. Various other tools are also in the mix, such as being able to view, import and export textures, WAXes, and so forth.

So why a new editor, why not use WDFuse or even earlier options? Here are the features the editor supports (or will support):

- The ability to directly draw sectors, and place objects.

- CSG support - union, subtraction, clipping, and more.

- Full 3D editing support with the same UI. An entire level can be built using only the 3D editig tools.

- Modern UI and tools.

- Support for both Dark Forces and, later, Outlaws.

- Built-in INF editing with helper tools.

- And more.

True Color Rendering

True color rendering is also planned and needed for Outlaws. This will include new features such as dynamic lighting, antialiasing, and post processing options. This also means that texture filtering wil become available, including anisotropic filtering and mipmapping.

Voxels

Finally taking the prototype voxel work I did during TFE development and make it “real.” The software renderer was basically done, but this includes the GPU Renderer, definition/replacement metadata, and “map hacks” so that objects can be repositioned in levels.

Outlaws

And finally, the last major project of the year. Reverse-engineering work on Outlaws, using the Dark Forces code as a base. Like Dark Forces support, this will start showing up as bits and pieces that can be tested as development continues. Outlaws Jedi Enhancments and features will also be added to the editors as progress continues - and made available for new Dark Forces mods.

19 Dec 2022

After 3 years of development, I am ready to announce the Version 1.0 Release of The Force Engine (TFE). The Force Engine is a project with the goal to reverse engineer and rebuild the Jedi Engine for modern systems and the games that used that engine - Dark Forces and Outlaws. For version 1.0, Dark Forces support is complete but Outlaws is not yet playable. Full Outlaws support is planned in the future, for version 2.0.

For Dark Forces, the goal is for TFE to act as a viable replacement for DosBox and the original executable for most players, to be used to not only play the vanilla levels but also the many user mods developed for the original game - and I believe that goal has finally been met with the release of version 1.0. The Force Engine provides modern conveniences and control methods and removes the need to set up DosBox and deal with cycles-based bugs such as getting stuck on ice or having the missiles that the final boss fires move too fast or not move at all. While TFE supports modern GPU and high resolution software rendering - the original 320x200 fixed-pointe renderer has been preserved - keeping the DOS experience for those who want it.

Like a traditional source port, you need the original game to play. TFE replaces the executable, not the game.

Update

Version 1.01 has been released that addresses several issues in the initial release.

Current Features

- Full Dark Forces support, including mods. Outlaws support is coming in version 2.0.

- Mod Loader - simply place your mods in the Mods/ directory as zip files or directories.

- High Resolution and Widescreen support - when using 320x200 you get the original software renderer. TFE also includes a floating-point software renderer which supports widescreen, including ultrawide, and much higher resolutions.

- GPU Renderer with perspective correct pitch - play at much higher resolutions with improved performance.

- Extended Limits - TFE, by default, will support much higher limits than the original game which removes most of the HOM (Hall of Mirrors) issues in advanced mods.

- Full input binding, mouse sensitivity adjustment, and controller support. Note, however, that menus currently require the mouse.

- Optional Quality of Life improvements, such as full mouselook, aiming reticle, improved Boba Fett AI, autorun, and more.

- A new save system that works seamlessly with the existing checkpoint and lives system. You can ignore it entirely, use it just as an exit save so you don’t have to play long user levels in one sitting, or full save and load with quicksaves like Doom or Duke Nukem 3D.

- Optional and quality of life features, even mouselook, can be disabled if you want the original experience. Play in 320x200, turn the mouse mode (Input menu) to Menus only or horizontal, and enable the Classic (software) renderer - and it will look and play just like DOS, but with a higher framerate and without needing to adjust cycles in DosBox.

Links

The website has additional links to the forums, Discord channel, and GitHub repository.

System Requirements

In early 2023, TFE will gain official crossplatform support - both Linux and Mac. Until then Windows is required.

- Windows 7, 64-bit

- GPU with OpenGL 3.3 or better compatibility

Note that there are plans to lower the requirements for using the classic software renderer in the future. However, the minimum requirements for GPU Renderer support are here to stay. For now only OpenGL is supported, which might limit the use of some older Intel integrated GPUs that would otherwise be capable. There are near-term plans to add DirectX 10/11, Vulkan, and maybe Metal render backends which should enable more GPUs to run the engine efficiently.

Bugs and Issues

Like any project of this nature, and any new release - there will still be bugs. Some of these bugs will be DOS bugs that can be reproduced in the original game - these bugs are unlikely to be changed anytime soon. Other bugs will be TFE related. For those, please report them on the forums or GitHub.

27 Nov 2022

With the recent 0.93 release, The Force Engine is now feature-complete for the version 1.0 release. Since then, I have been busy burning through bugs and issues but there is still work to be done. Now that the build seems to be stable and many AI and INF issues have been fixed, I decided to compile a list of bugs and tasks remaining for version 1.0.

I have made these items checkboxes, so that I can update this list as progress is made.

Version 1.0 Task List

Polish

- [ ] Add a portable option which will cause TFE to store everything (screenshots, settings, saves) in the local TFE directory instead of /Documents/TheForceEngine. (Post 1.0, planned to align with cross-platform work)

General

GPU Renderer

Software Renderer

23 Nov 2022

Save System Release

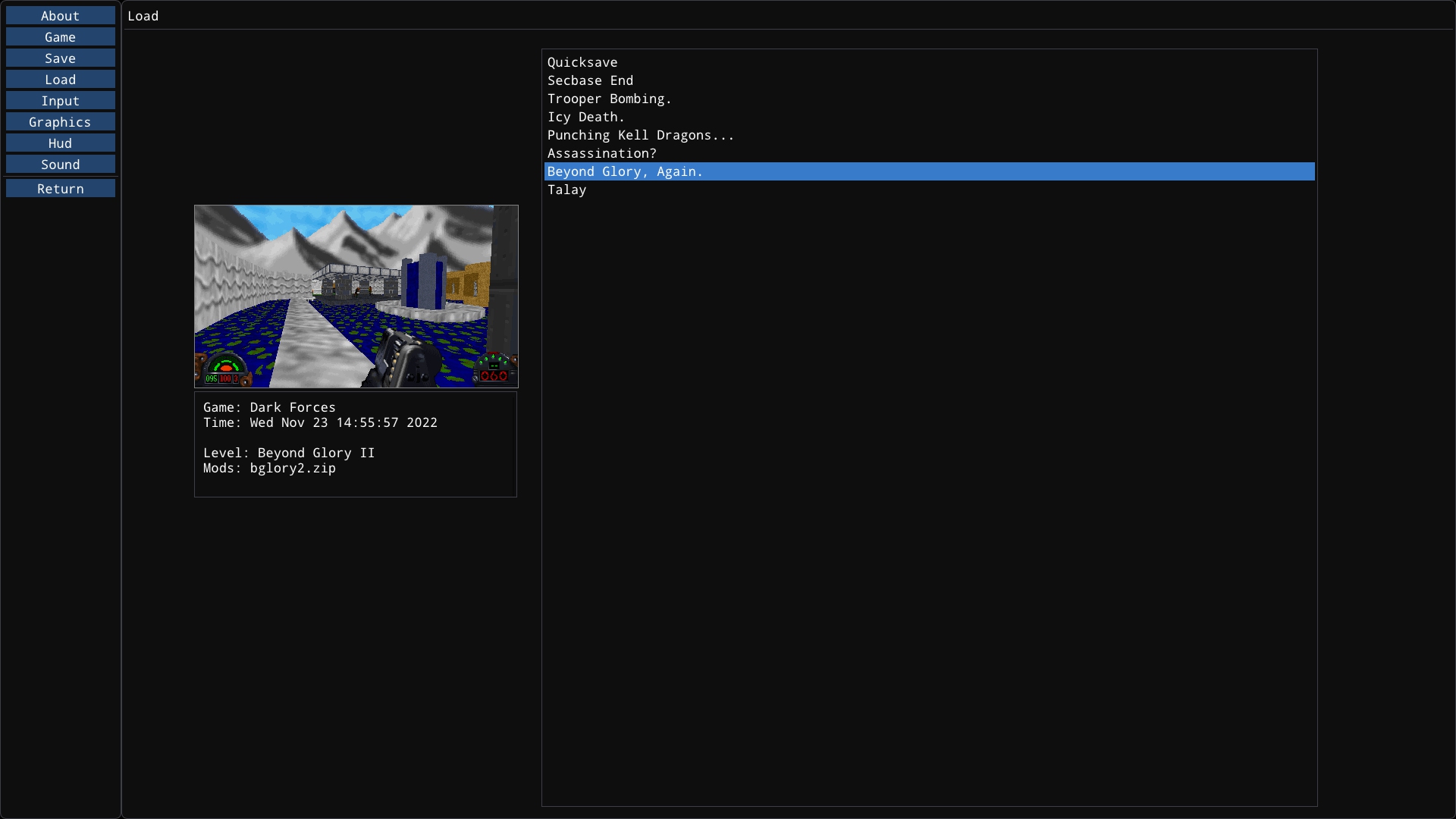

The main feature for version 0.93 build of The Force Engine is the Save System - and Quick Saves.

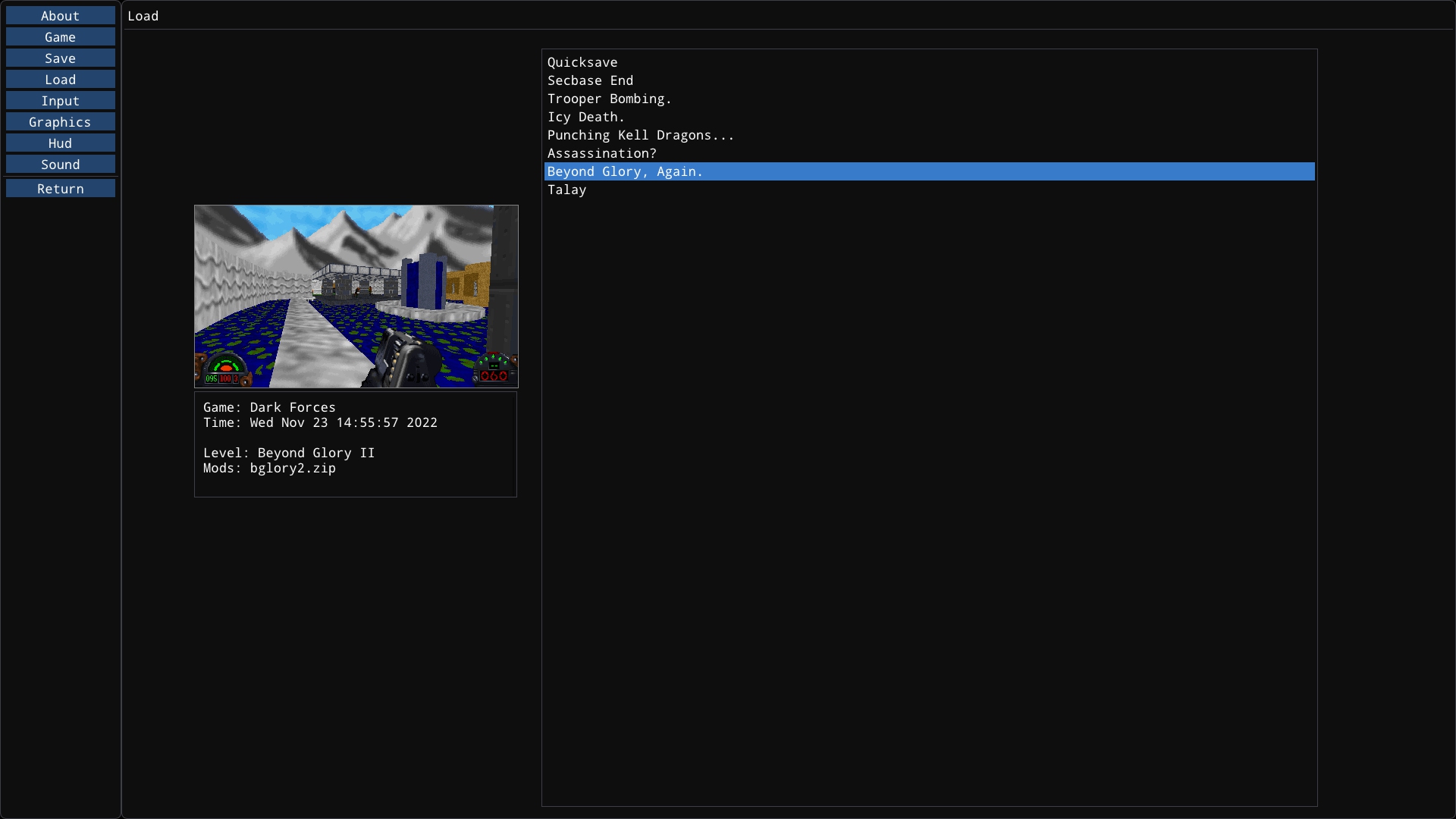

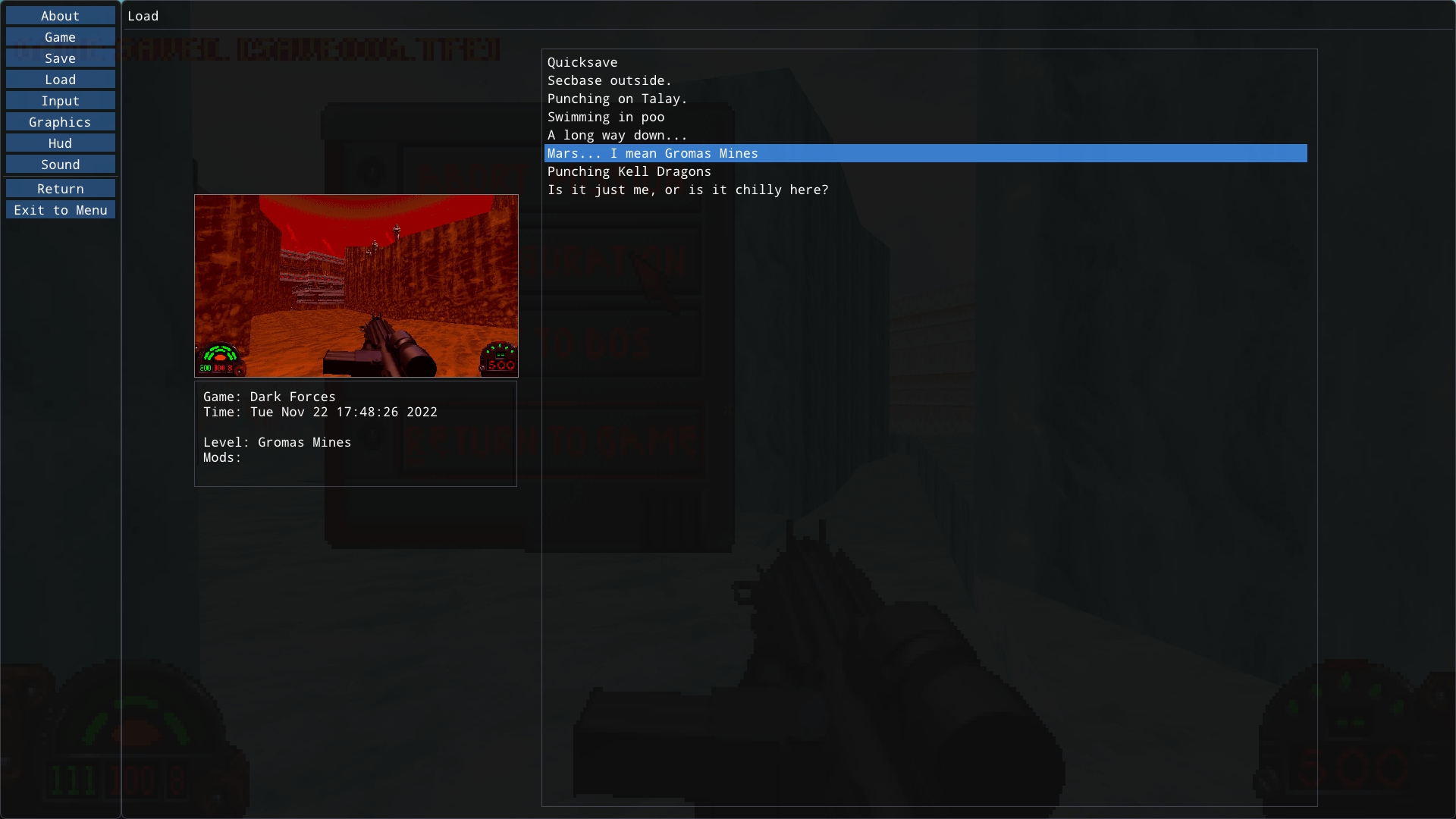

Most of the game and level state is serialized, so that games can be saved and then later continued. It tracks the “Agent” data, which can be reconstructed if needed, so that you can continue the game, abort and play as normal. The original lives/checkpoint system is still there, the save system preserves that data and does not interfere with the core game systems. Using saves and quicksaves is an optional feature and can be completely ignored if desired.

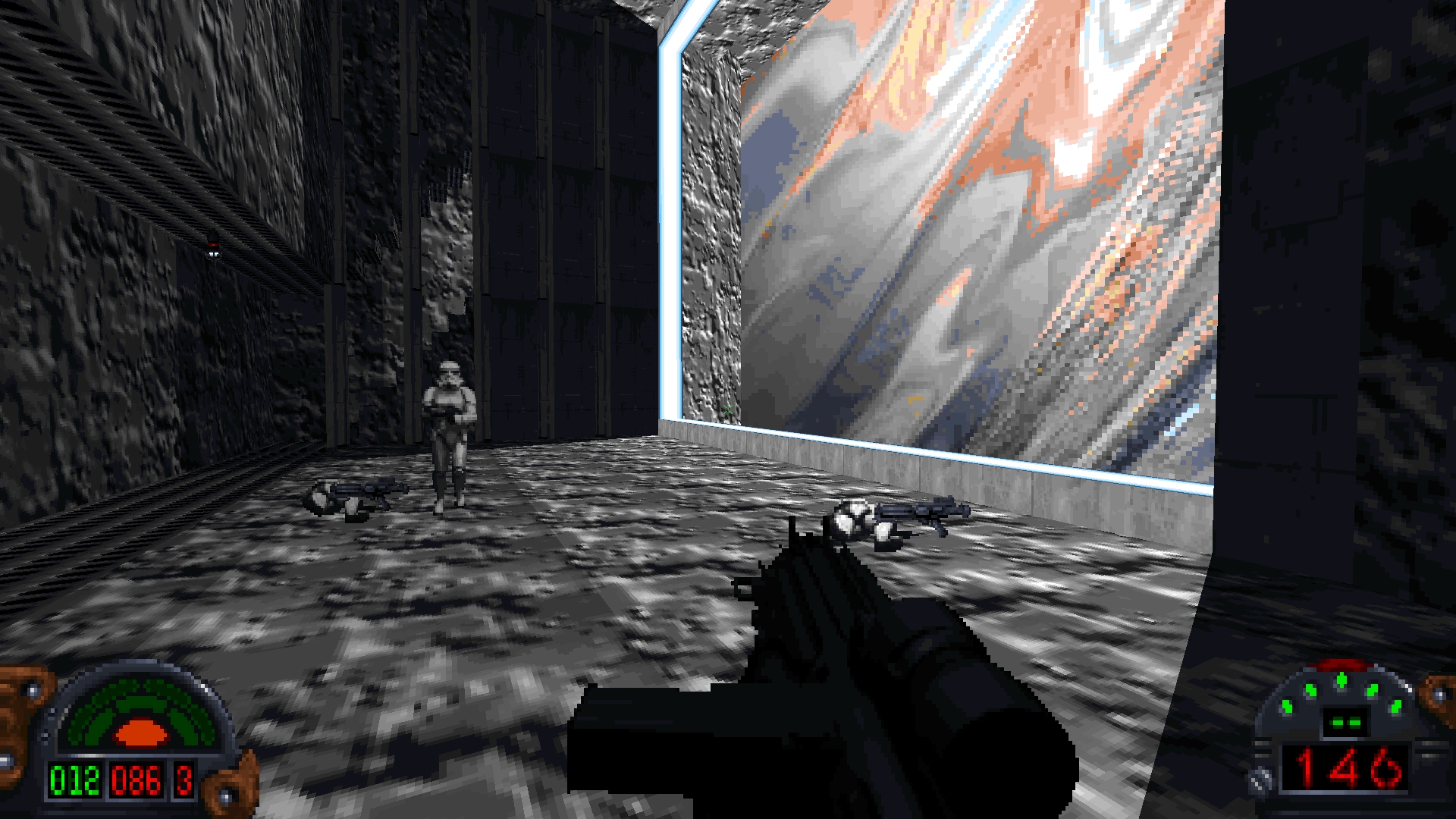

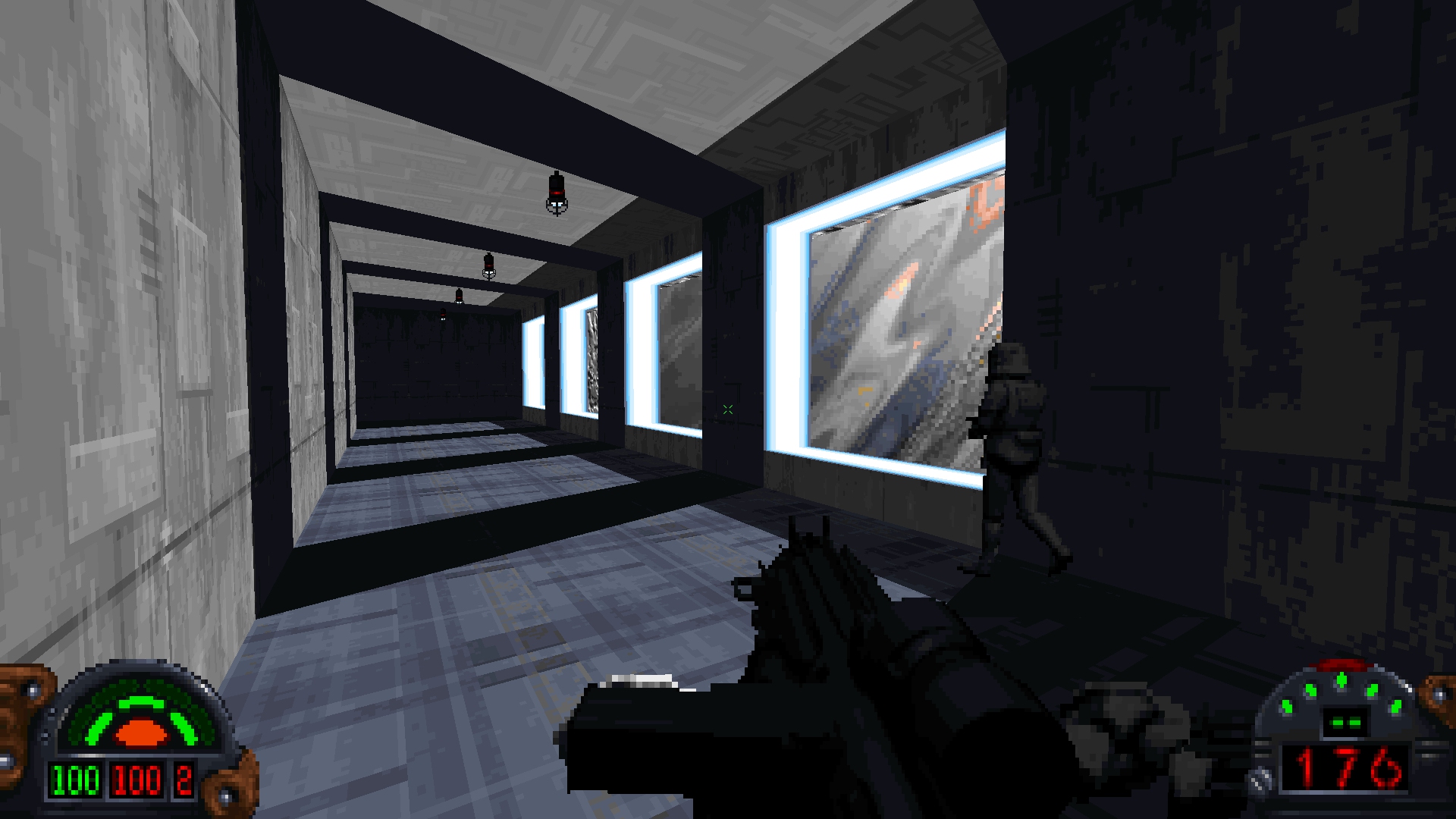

When the game is saved, metadata is preserved - such as the time and date, a small screenshot, the name of the level, and all mods being used. Using this, it is possible to directly load a save even if you were playing a mod, the system will automatically load the same zips and gobs.

Many other fixes were made during this process including:

- Fixed a “random” crash caused by invalid addressing in the sound system.

- Fixed an issue with level progress not being preserved if you exited immediately after completing a level.

- Fixed pitch issues with turrets, they should look much more accurate now.

- Fixed mouse speed issues with the in-game menus.

- Improved the responsiveness of the PDA menu.

- And many other minor fixes.

Version 1.0

With this release, The Force Engine is feature-complete for version 1.0. We are now on the last leg of the journey to version 1.0 - the focus will be on fixing bugs and polishing for the 1.0 release.

30 Oct 2022

GPU Renderer Beta Release

The main feature for version 0.92 build of The Force Engine is the Beta release of the GPU Renderer. The GPU Renderer is a port of the software Jedi Renderer to the GPU utilizing OpenGL 3.3+ hardware.

GPU Renderer

The GPU Renderer has several advantages to help make playing Dark Forces (and eventually Outlaws) smoother using TFE.

- Greatly improved performance when using well supported GPUs - several orders of magnitude improvement on newer GPUs at higher resolutions.

- Greatly improved performance scaling with resolution.

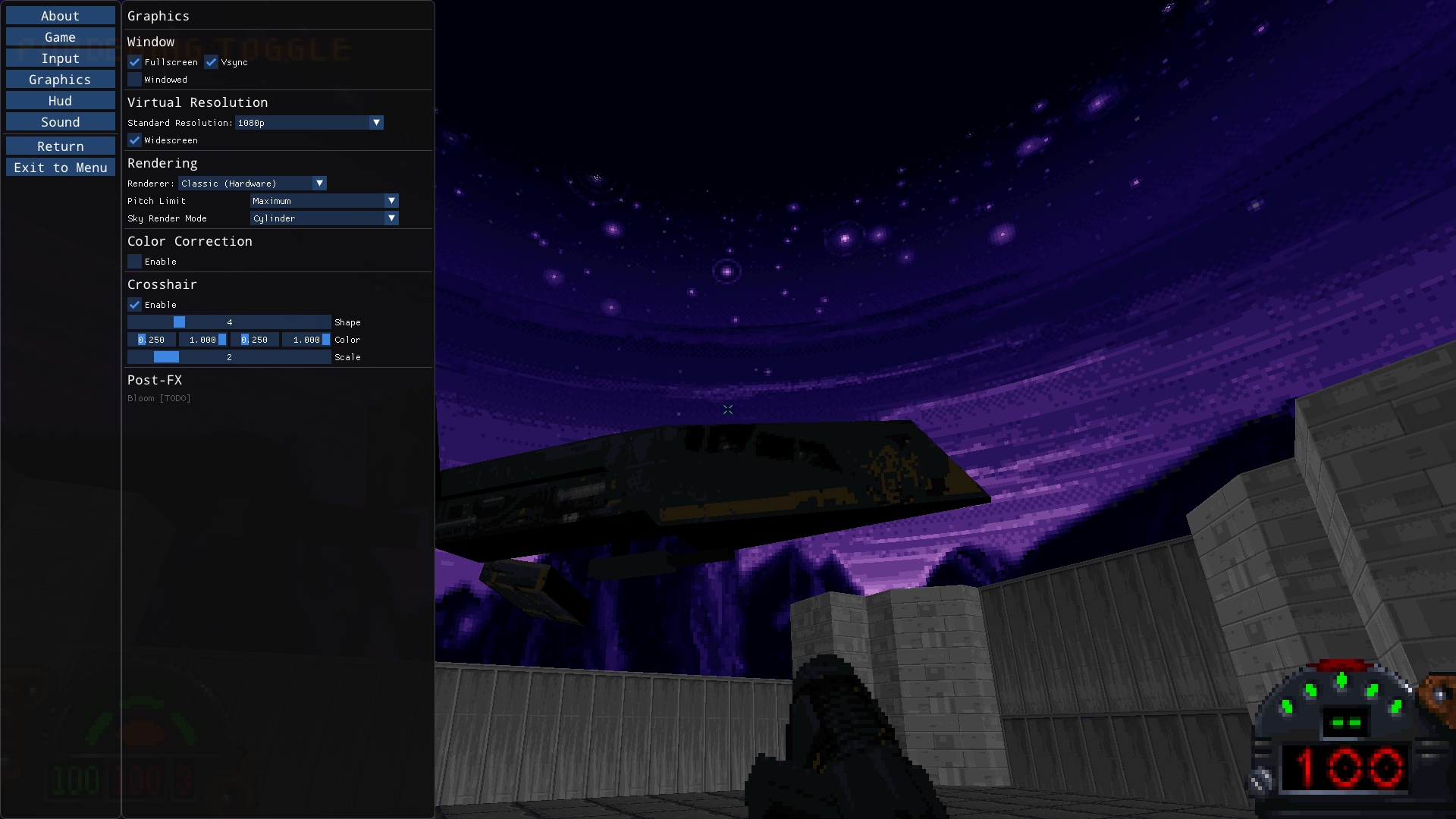

- Perspective correct pitch - meaning no more distortion when looking up and down.

- The ability to look almost straight up and down, depending on settings.

- Cylinderical sky projection to avoid distortion when looking up and down, though the vanilla sky projection is avaiable.

- Perspective correct 3DO rendering - this fixes the texture swim / distortion when using 3DO geometry, which makes 3D objects used as geoemtry - such as bridges - much more seamless.

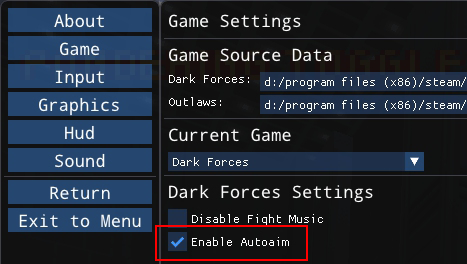

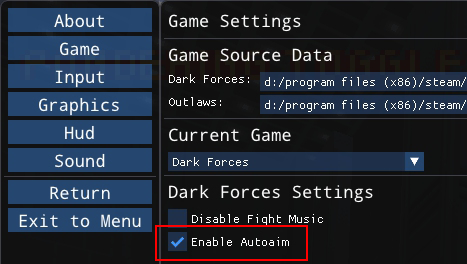

- The ability to disable autoaim if desired. This last option isn’t dependent on the GPU Renderer, but was included anyway.

Known Bugs

The GPU Renderer is still considered Beta and will have bugs. Here are the known bugs so far. These bugs will be fixed for version 1.0.

- The Escape Menu does not show the proper grayscale background when using the GPU Renderer (this still works correct for the CPU renderers).

- There is still some minor incorrect sprite clipping.

- There is at least one place where intersecting adjoins (portals) cause HOM.

- There is occassional z-fighting due to a part size bug.

Version 1.0

The next step, towards version 1.0, is to finish the Quick Save feature. Currently it is roughly 50% complete and I plan on finishing it up next before getting back to GPU Renderer bugs. Once that is complete, TFE will be feature complete for version 1.0. After that I will spend a few weeks going through the bugs, especially any crash or accuracy related bugs, in order to finish version 1.0.

New Features

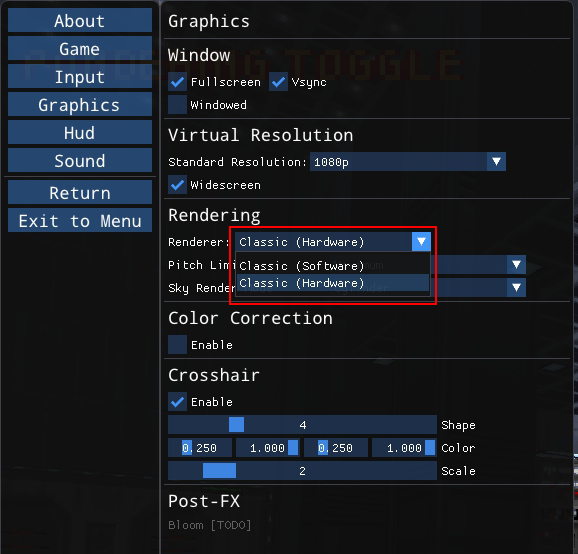

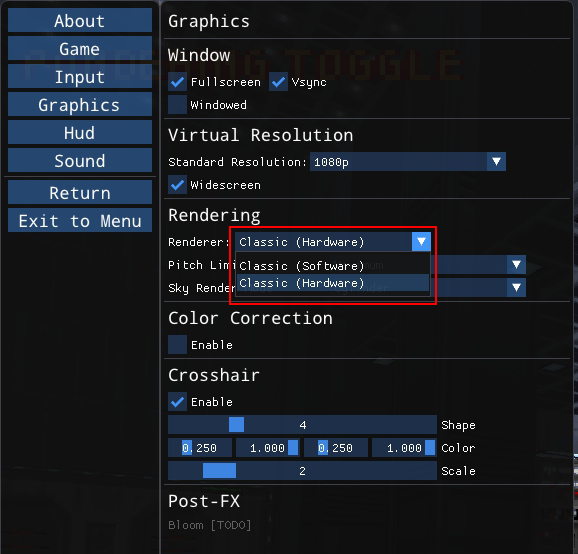

Renderer Select

To change renderers, go to Configuration in the Escape Menu, and then select Graphics. From there find Renderer, which you can change from Software to Hardware.

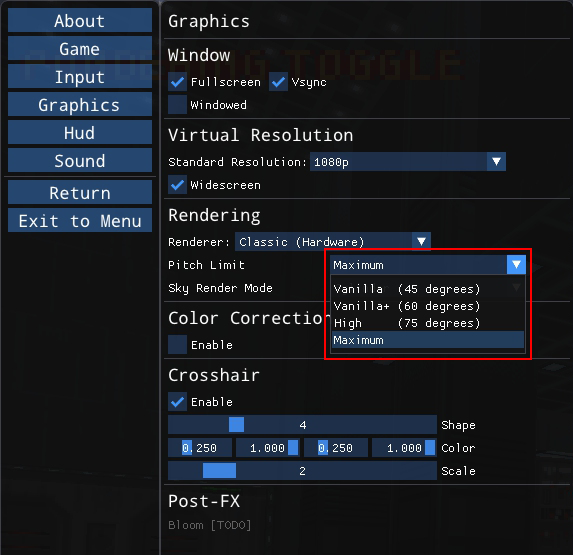

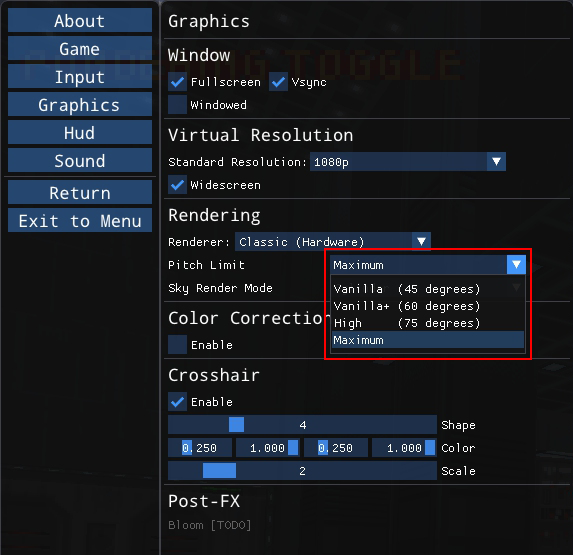

Pitch Limit

Because pitch is now perspective correct, looking up and down can cause sprites to seem flat. To help with this, TFE can limit the pitch to fixed amounts - to give your the freedom to look up and down without distortion but not make the sprites look too flat. The default is Vanilla+ (60 degrees). The Maximum setting allows you to look up nearly 90 degrees.

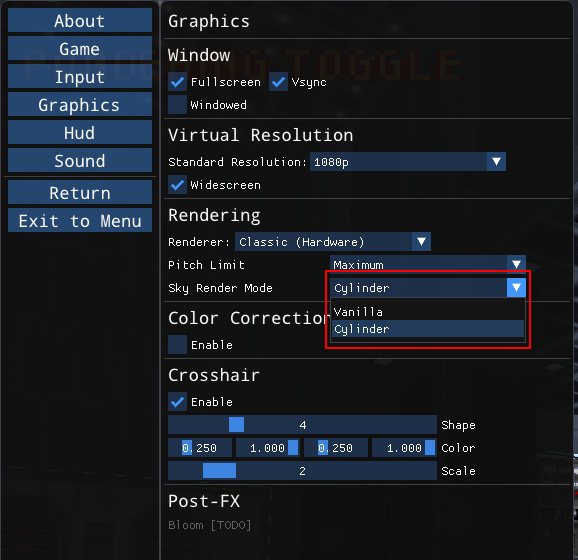

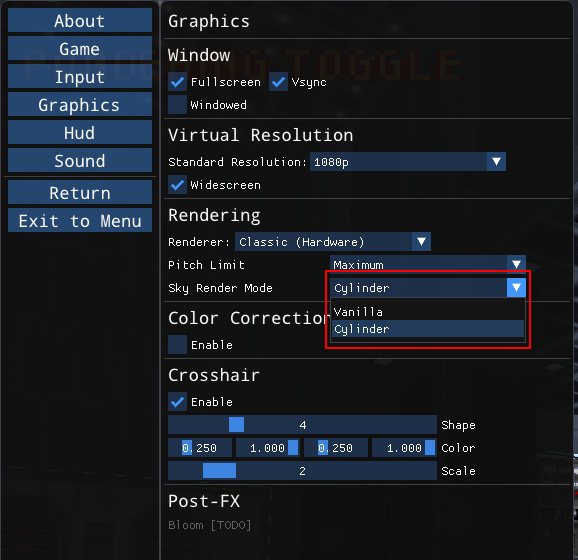

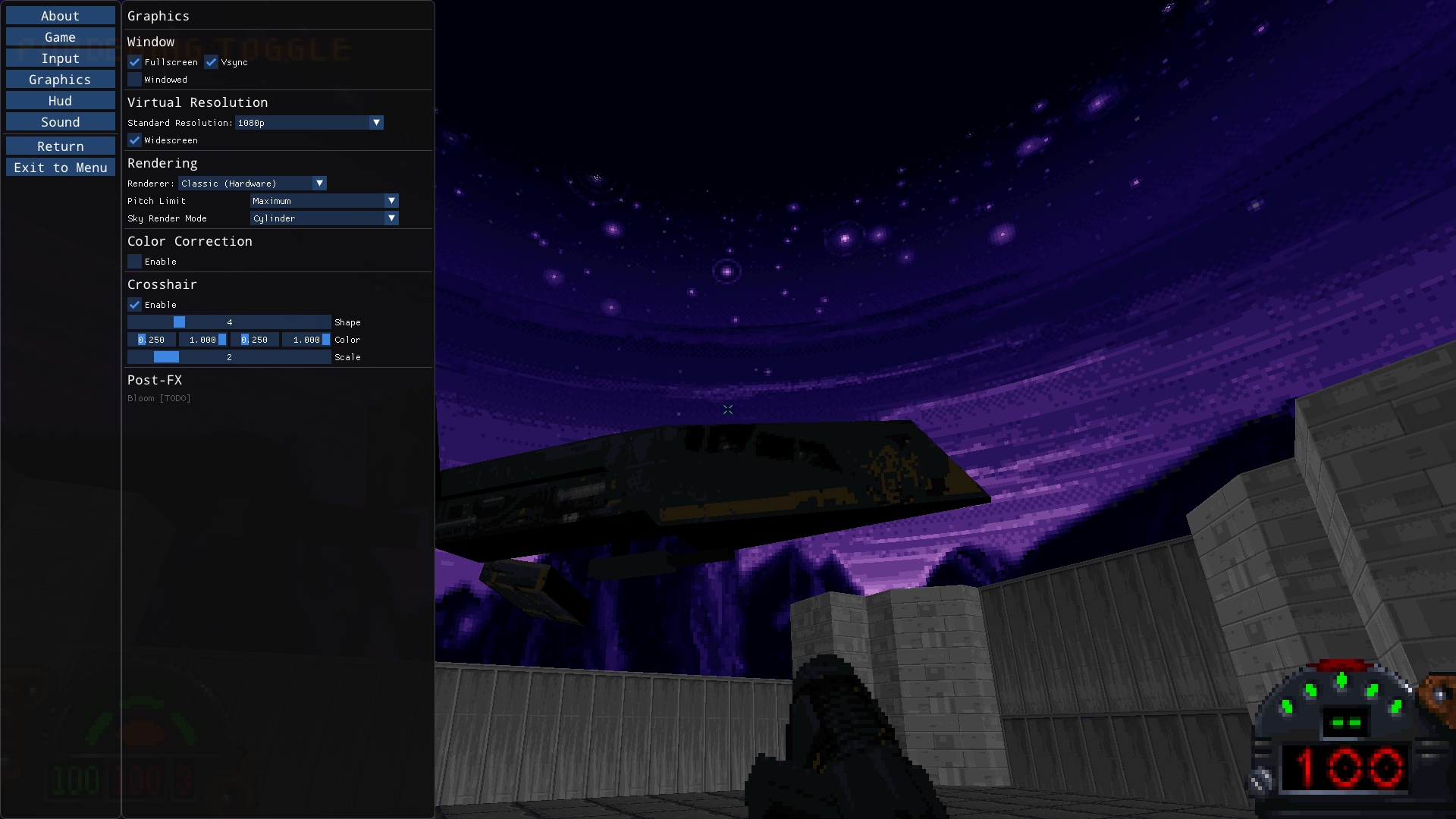

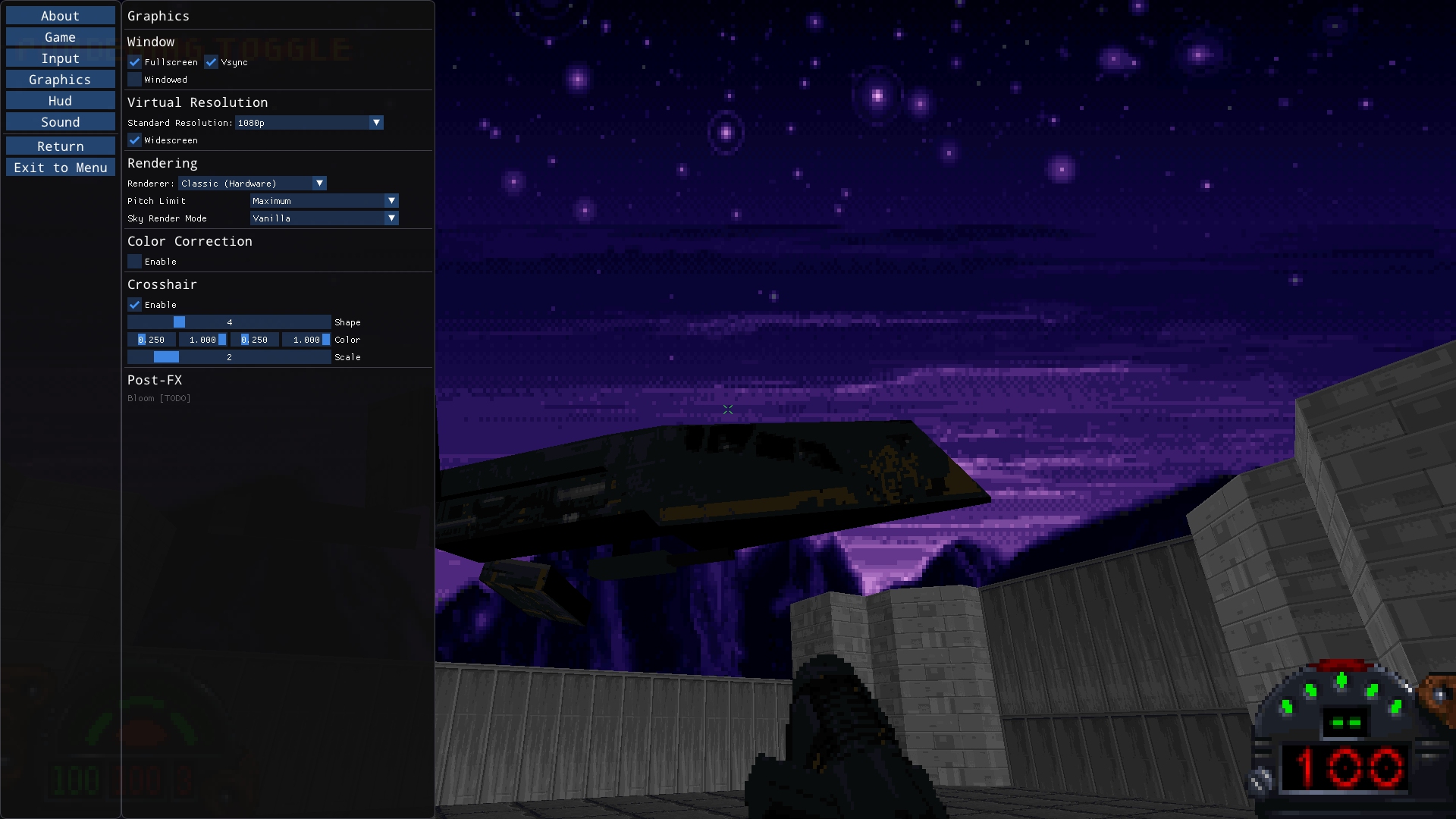

Sky Mode

By default the GPU Renderer will use a Cylindrical projection to avoid sky distortion when looking up and down. This feels more natural than vanilla projection but looks different. If you want the game to look closer to vanilla, you should choose the Vanilla option here.

Cylindrical

Vanilla

Autoaim

Autoaim is very useful when looking up and down is clunky. But when you can look up and down freely, it can get in the way. So TFE now allows you to enable or disable autoaim in the Game settings.

Videos

17 May 2022

Version 0.9

Version 0.9 has been a long time coming, taking much longer than originally anticipated. Version 0.9 is focused on sound playback and music accuracy, ambient sound support, and a few bug fixes.

iMuse

IMuse (Interactive Music Streaming Engine) was the last system needed to complete Dark Forces support in TFE. It is the dynamic music system that Lucas Arts developed and used in many of their games such as Monkey Island 2, Tie Fighter and, of course, Dark Forces. See https://en.wikipedia.org/wiki/IMUSE for a general description.

iMuse, as used in Dark Forces for music, is basically scripted Midi - the midi data itself contains sysex events, such as jumps (like goto in C). The host (Dark Forces game code) can interact with the Imuse playback, such as manually jumping to different places in the midi, changing tracks, or midi files on the fly.

There are often loops in the midi and the cutscene playback system would set hooks at certain points that will cause IMuse to break out of those loops or jump to new places in the midi at specific points in the cutscene - allowing for musical cues, looping while waiting for an animation to finish, and similar control.

Dark Forces used IMuse triggers to set callbacks when certain points of the midi are hit, allowing for smooth transitions between tracks (“stalk” and “fight”) based on what is happening in-game. A level starts with the stalk track playing and when a certain number of enemies become agressive for long enough, the music transitions to the fight track by taking the next transition and then looping. Finally, when things have calmed down, the music transitions back to the stalk track. To help with transitions, IMuse can intelligently sustain individual notes from one track while the next track plays, so that the notes don’t suddenly stop too early.

Imuse has a variety of other features such as fading and panning sound, playing multiple tracks at once, and a variety of other features not used by Dark Forces - such as the streaming music.

Games did not link in IMuse directly, but rather the IMuse binary was shipped with the game data. The code used a header file along with some code to commuicate with the IMuse binary, similar to modern DLLs. The game would call IMuse functions specified in the header file, which then called an internal command dispatch function which finally called the internal equivalent to the requested function.

TFE simplifies the system, integrating the IMuse code into the project directly and removing the command dispatch system - instead the game code calls the IMuse commands directly. IMuse features that are not used in Dark Forces are stubbed out for the most part, mostly because I have no good way to test them.

Rather than call into the driver directly, the iMuse code sends midi commands to the TFE low-level midi player which handles the midi device, acting as the driver layer for iMuse. In order to run in the background as the game played, iMuse setup an interrupt handler running the update every 6944 ns, or about 144 Hz. For TFE, instead of an interrupt handler, I added support for a callback on the midi thread - in order to avoid thread contention - which fires at a fixed time step. Of course this caused complications, such as interactions between the Landru/Cutscene thread (with updates running at ~291.3 Hz) and iMuse, necessitating the use of atomics for a few of the iMuse state variables.

iMuse is also used for low-level digital audio playback and normalization, linking to the TFE AudioSystem thread for updates in a similar way that midi was implemented. In the original code, the digital audio updated occured in the same interrupt handler as the midi update. The way it worked is that it would query the driver if it was ready for the next set of sound samples (512) and if not, skip the update. For TFE, the iMuse digital audio update is instead called by the low-level audio thread to provide the samples instead. Interestingly iMuse features such as sound fading and triggers work the same way for digital audio as music tracks.

In terms of complexity, iMuse is very similar to the INF system - which is also a pseudo scripted system. Fortunately, iMuse is the last major system needed to support Dark Forces. Except for bug fixes, the reverse-engineering phase for Dark Forces is complete.

Game Sound

On top of iMuse, Dark Forces basically implements two sound systems - one for Landru (cutscenes), most likely directly from Tie Fighter, and the other using Dark Forces game data and Jedi math functions to handle in-game sounds. Because there are only 8 ditigal audio channels available, though iMuse (and now TFE) support 16, sound priority is very important and every loaded in-game sound is provided a prority so that you always hear the most important sounds.

The sound falloff in TFE was approximated up to this point, but I knew it would not be accurate. 3D Sound in Dark Forces can be heard from up to 150 units away and will play at full volume at 30 units. Between 30 and 150 units from the eye, the volume is linearly interpolated.

Ambient sounds were also implemented. These are objects placed in the world that emit 3D sound that loops continuously. They are used for effects such as the wind ambient sounds in Nar Shaddaa.

Other Features

Other features that were implemented include the ability to bind the mouse wheel to controls, such as switching weapons (the new default), the use of mouse wheel as an option in various game screens - such as mission briefings, System UI scaling to better support 1440p and 4k, a proper crash handler to write out minidumps on event of a crash, and a variety of fixes. In addition the System UI sound panel is now working and gives you the ability to adjust sound fx volume and music volume separately for cutscenes and in-game. The Sound config also has an option to enable 16-audio channels for iMuse, and the Game config allows you to disable the fight music in-game if desired.

Version 1.0 Plans

With version 0.9 finally released, the next major release will be version 1.0 - complete support for Dark Forces in TFE. Unlike the 0.9 release, the plan is to split up the release into several smaller releases. There will be 2 main parts:

- Bug Fixes. I plan on splitting bug fixes by system and do one or more 0.9x release for each system. Examples include AI bugs, weapon bugs, collision issues, INF issues, etc..

- The GPU renderer - the last major feature for version 1.0. The GPU renderer will support both shearing for looking up and down (which emulates the software renderer) and accurate perspective projection to allow the player to look up and down further without distortion like modern 3D games. Initially it will support 8-bit color emulation with optional colormap interpolation to remove banding. Later, after version 1.0 is released, true color rendering, dynamic lights and other features will be added.

24 Dec 2021

Version 1.0 Release Delayed

This is obvious by now, but The Force Engine version 1.0 will not hit this year. There has been a lot of progress towards completing the iMuse reverse-engineering work for version 0.9, but that will spill into early January.

2022 Plans

The iMuse work is roughly 70% complete, which means that version 0.9 is expected to land in the second week of Janurary. At that point, Dark Forces support in TFE will be feature complete and the reverse-engineering process for Dark Forces will be finished. The following few weeks will be dedicated to bug and inaccuracy fixing, with version 1.0 planned for late January or early February.

February will be spent finishing the GPU Renderer, which will handle looking up and down with proper perspective by default - though the shearing effect will be available. The initial release of the renderer will only allow for palette emulation with true color options and other effects coming later. I will talk more about the GPU renderer in a future post. In short it will allow for much better performance when running at high resolutions and refresh rates but maintain the proper look, including the way objects sort with the floor and ceiling, the way they sort with walls, the way portals enable “non-euclidean” geometry in some cases. At this point, the voxel code will also make its way into the master branch, finally properly adding voxel support.

March will see the release of an early version of the built-in level editor and other asset tools, including some initial basic support for voxel replacements. These tools will be expanded even further when working on Outlaws, including support for Outlaws engine enhancements and non-vanilla Dark Forces mods using those enhancements. Finally there will be smaller quality of life enhancements, and bug fixes. Early March will also be spent working towards the Mac and Linux release, with the help of gilmorem560 (Matthew Gilmore) and others.

Finally, once the Mac and Linux ports are working and the initial tools have been released it will be time to focusing on adding Outlaws support to TFE. Like I mentioned previously, this will include adding support for Outlaws Jedi enhancements to the level editor and support for Outlaws formats in the asset tools.

2021 Retrospective

I thought it would be interesting to look back at the 2021, in terms of TFE, and see how far we have come.

Early 2021 saw just a few commits to master. There were some improvements to the perspective correct 3DO texturing code. This feature, while it looked great, was not moved into the final code for performance reasons. There were also a few experiments with scripting, though mainly for future work. The main focus at this point, however, was the reverse-engineering work. At this point I was working in two locations - a branch of the main TFE code base, and the “code document” where the raw reverse-engineered code lived before being refactored and cleaned up for TFE.

Breaking Everything

TFE had existed in this strange state for some time where things seemed to be working fairly well but most of the code placeholder. I had originally written a sector renderer based on what was known about the Dark Forces formats, and then added the reverse-engineered classic renderer in late 2020 - but you had to use a console command to use it. I had an INF system built based on my existing understanding. But none of it was “real”. It was there so things could be tested, and initial tools could be built.

At this point, it was time for it to become “real” and, so, in early February 2021 I ripped out the renderer, the INF system, the previous object system and initial scripting support - breaking everything.

The INF System

With the old code gone, I had to spend some time to get things compiling again. During this period there was no rendering, but I could test things through the debugger. In mid to late February, I stubbed out the Dark Forces sound system and started integrating the INF code I had previously reverse-engineered. This process was not yet complete, in terms of INF, but the larger structure were there and I could finally compile and begin testing the code.

During this process, I found that the INF system also touched a lot of level data, so I begun stubbing out those interactions. Late February saw those sector functions getting integrated and becoming “real.”

Gap

Between late February and late March, there was a gap of about 1 month. Here I was focused purely on reverse-engineering the code, filling in missing pieces of the INF system, level loading, and more.

Level Loading

The last few days of March were spent porting all of the reverse-engineered Dark Forces level loading code to TFE. This meant moving code to the correct locations, such as moving code out of the INF system involving sector functions. During this phase I split out the “level data” from the renderer, INF system, and collision system. I had previously reverse-engineered the sector renderer, and it had been accessible in TFE using the console, but making it “real” - hooking it up correctly - meant finding code I missed and correcting past mistakes.

Reverse-engineering the INF system was a massive undertaking. And I wasn’t done yet. Early April would show how much work was left digging through the INF code in Dark Forces, merging the new code into TFE and fixing a seemingly never ending stream of INF bugs and issues.

Gameplay

By mid-April I had move on to the game code. Mid-April saw the integration of the player inventory, which originally had pieces of the structures in the INF system since it needed them to be stubbed out (keys and the like). April would see the introduction of the logic system, though there was still a lot more to figure out here. The player finally got its own file and the game code started to take shape. At this point the way that Dark Forces handled timing became much more clear and now the game ran at the correct speed. Mid April to mid May were dedicated to reverse-engineering the gameplay code in prepration for what was to come. But there was little activity in the TFE branch.

Collision Detection

Mid to late May was spent integrating the reverse-engineered collision detection code from Dark Forces. So far I have spoken about “moving code” and integrating as if it is a one way process. It is not. As reverse-engineered code is integrated, it needs a place to live. Because I don’t have access to the original files, function names, variable names, structure or member names during this process - it all lives together in my “code document” as a mass of code. As I integrate it into TFE, I have to figure out how to organize the files and integrate the code with already existing code. Then I see what I missed, what parts I forgot to reverse-engineer, or mistakes I made. Then I would go back to the “code document” and original game and then work through my mistakes or missing code.

Hit Effects and Projectiles

During this period the Hit Effects system was also integrated - this is the system that allows projectiles and other systems to spawn animated effects on hit. It handles explosions, “puffs” as projectiles hit, and splashes when objects hit water. The other side of this was the projectile system, which is responsible for spawning projectiles, updating them, handling collision detection, and then spawning hit effects. Projectiles use an update callback which gets assigned when they are created. This allows thermal detonators to arc, mines to falls, and updates blaster bolts as they move. In late May the Sound System was finally fully stubbed out and the API took shape.

Logics and Pickups

In April there was some initial work with object logics and this work continued into June. During this period the animation logic was added, which allows objects like the shield pickups to animate. The projectile logic function fully formed, connecting projectiles to the logic system. In Mid-June I finally factored out the object/INF messaging system from the INF code. Originally I thought it was an INF feature since a lot of INF interactions are done by passaging messages to sectors, lines, and triggers. But it turns out the system is also used to pass messages to objects.

Late June saw the integration of the “pickup” update function, which meant it was now possible for the player to pick up objects, such as ammo and shields.

The Task System and Game Loop

During the previous few months of work, it was becoming increasingly clear that game behavior was too dependent on the original “task system” for me to ignore it. It was, at this point - now July - that I began the very painful task of reverse-engineering and integrating the task system. Late July saw the introduction of the main game entry point - “darkForcesMain”. This was using the new “game system” that will allow TFE to run different games. This month saw a massive refactoring to use the proper reverse-engineered game loop. By the end of July the core game loop was taking shape.

The first two thirds of August was spent reverse-engineering more game code but also saw the file searching abstracted to make file handling simpler and to handle mods. But towards the end, there was a massive amount of code integrated into TFE. This included a lot more refactoring, moving Jedi related code TFE_Jedi/, converting the various engine-level namespaces to TFE_Jedi, and cleaning up the Jedi Renderer.

Towards the end of August there was a lot of work integrating HUD code, including off-screen buffers that Dark Forces uses while updating the HUD to avoid redrawing all of the HUD elements every frame, moving over more Jedi memory management code to make porting reverse-engineered code easier, starting to properly load data and startup various systems and finishing up the Dark Forces game startup.

The end of August saw the player controller integrated, as well as initial weapon code. The automap was also integrated. I also spent the time converting many systems back to tasks, which continued to have issues. At this point, level loading was finished, object in sector assignment issues were fixed and the level loading screen was displayed. Some of the AI code was integrated, though there was still a lot of work to do here.

Core Game Loop

During September the core game loop started really coming together. The code was switched to using the original sin/cos tables, which fixed various rendering issues with the Automap, palette based effects were integrated, and the HUD code was fully integrated and displayed properly. The classic renderer, reverse-engineered many months prior, was finally properly hooked up. It was finally “real.” I could see again - after almost 7 months of most of the game not displaying properly because the data was not in place and the renderer not hooked up.

With so much reverse-engineering work already done and all of the main systems coming online, things started to move quickly from here on out.

In early September the cheats were mostly finished, and the general “mission controls” were working - meaning the automap could be properly toggled, the headlamp worked, and various other features were accessible. Weapon drawing and animation were integrated. Player controls were then integrated, and then player physics and collision. At this point, I was finally able to run around the levels again with proper controls and collision. Finally the rest of the Player controller was finished. On September 12th, I posted the “TFE Core Game Loop Release Preview” video - just days after hooking up the renderer again.

The player weapon system was integrated at this point, but the individual weapon fire functions still needed the be reverse-engineered and brought over. On the 14th the Fist was itegrated, which led to fixing various bugs. At this point, I moved everything to using the TFE allocator system, so that levels could be flushed and reloaded. On the 15th the Mortar was integrated. The 16th and 17th saw the other weapons also integrated, as the general patterns became more clear.

On the 17th, after getting through most of the player weapon handling code, I posted the “TFE: Dark Forces Weapons” video.

AI

At this point I started to focus on the AI, splitting off the basic actor code I already integrated - knowing that the AI code would soon get much bigger. Initial AI work revolved around the mines - which are in essence both an AI actor and a projectile. Once mines worked, it was time to move on to exploding barrels and then generalize to exploders. Next up was scenery, which is also considered AI because it can animate and reacts to damage, causing it to change states. The mouse bot was partially completed, and then I made a slight detour to prepare for version 0.7.

TFE needed a system to remap keys to actions, which had been implemented previously. What hadn’t been implemented yet is the UI. So the UI was created, though not fully hooked up yet.

More AI

Late September saw a lot more AI work, with more reverse-engineering time required as gaps became evident during the integration process. Along with the AI, the Task system was being cleaned up and simplified. Finally the mouse bot was completed, but the AI journey was just getting started.

In Dark Forces, AI agents are split up into a number of actors, which all have little bits of functionality. With the introduction of the “troopers” - more of this functionality needed to be integrated.

On September 30th I posted the “TFE: AI System” video. By this point the “trooper” AI was complete, as well as the mouse bots, land mines, exploding barrels, and scenery (like the red lights in Secbase).

Flyers and Bosses

In early October I started work on “fliers” - which have yet more “actor” structures and code. At this point the AI code was complete enough that I was able to add several more enemies that had very little custom code. Then came the Sewer creature, which doesn’t use completely unique code but shared less code that any other enemy so far.

Next was the Kell Dragon, which was the first “boss” enemy to be integrated. These enemies are different than any so far in that most of the code is custom. Most of the regular enemies share code, with their initial settings determining their behaviors. But the bosses change that.

Turrets, Generators, and Vues

The Welder and Turrets were integrated, which also use mostly custom code. Fortunately they use fewer states and less code than the bosses. I also fixed some latent rendering bugs in this period and removed a lot of no longer used code. Finally VUE animations and Generator logic were integrated.

On October 14th, I posted the “TFE: VUE Animations, Enemy Generators, and Fixes” video.

Level Reloading

So far, you could only load a single level and then restart the program and load another. Mid-October saw that finally fixed with level skip cheats and by fixing level reloading issues. It also saw the ability to add new agents using the in-game UI. In late October player death and respawning was integrated, making the game loop feel more real. Jabba’s ship was now properly handled, the code for it had been previously reverse-engineered but never integrated until now.

At this point I started uploading “Pre-Core Game Loop” releases, with the idea of updating them regularly for testing until the Core Game Loop release was finally finished. When I had previously ripped out all of the old code, including the reverse-engineered classic renderer (until it could be hooked up properly), various problems were fixed and corrections made to the classic renderer. As a result, it was parred back to the original fixed-point renderer - meaning builds were in 320x200 only.

People quickly found numerous bugs, some of which are still waiting to be fixed. Work on the boss AI continued, with new pre-release builds often coinciding with a new enemy being integrated. The Input Remapping was also finished during this period.

By early November, all of the enemies were finally integrated.

Towards Version 0.7

With the enemies all in place and the core game loop complete, it was time to re-implement the floating-point version of the Jedi renderer. I used the code from my original version of the floating-point classic renderer as reference, but I re-implemented it directly from the fixed-point renderer in order to capture all of the fixes and changes in 2021.

On November 14th, I posted the “TFE: Widescreen & High Resolution Rendering” video.

Once the floating-point renderer was complete, it was time to finally prepare for the Core Game Loop release. This involved fixing more menu code, fixing crashes due to resolutions not divisible by 4, fixing various 3DO model rendering bugs, and many other issues. But the biggest new feature was the mod loader - it was finally possible to play mods using TFE.

On the November 18th, version 0.7 was released and the core game loop was complete.

Version 0.8

With the Core Game Loop complete, it was time to tackle the cutscene system. During this period I also fixed many bugs, and cleaned up the renderer code. But most of the time was working through the “Landru” system. The Landru system uses its own “actor” model for handling images and sounds. It also has its own sound and music management code. Even the display code is different then the rest of the game. There were numerous systems, such as the fading system, that needed to be converted from DOS-style while loops to state machines.

And, finally, on December 5th, I posted a video and posted the official 0.8 release.

Today

That brings us to today. I have been spending the last few weeks reverse-engineering the iMuse system, and prior to that had successfully integrated the game music module that interfaces with iMuse.

It has been a long road and a wild ride. We didn’t quite make it to version 1.0, but we came really close. The renderer, AI, INF system, in-game UI, cutscenes, game systems - all of it derived directly from the original executable using reverse-engineering, which is almost complete.

You can now watch the cutscenes, though the music still has issues, create a new agent and play the game from beginning to end. You have all of the relevant in-game UI, the mission briefings, the gameplay. Within mere weeks we will finally reach version 1.0.

18 Nov 2021

Version 0.7

After a long time in development, the Core Game Loop Release, version 0.7 is finally available. All of the weapons, items, and AI has been integrated. Dark Forces can be completed from beginning to end user The Force Engine. TFE continues to support the original, 320x200 fixed-point renderer but now properly supports high resolution rendering, and widescreen (including Ultrawide). TFE can load mods directly from ZIP files, you can drag and drop them onto the executable. It also has a built-in Mod Loader, accessible from the main menu.

To get mods to show up, simply create a Mods folder in your TFE directory, the Dark Forces game data directory, or under /ProgramData/TheForceEngine. In that mods folder you can put the zip files, one per mod, or extract them to one sub-directory per mod.

Below is a video that marks the release of version 0.7 - the Core Game Loop release. This video shows part of Detention Center, and then small parts of three user levels from “back in the day.”

Note that mod support is currently in “Beta”, not all mods fully work, and some may even crash. However, these issues will be resolved in future versions. There are still bugs and issues, but the Dark Forces can be finished from beginning to end using TFE.

Click to watch.

What’s Next

Now that the CGL release is done, it is time to move to the next milestone on the Roadmap - Cutscenes, Mission Briefings, and completing the in-game UI (PDA). That will be version 0.8. The rest of this year is dedicated to finishing version 0.8, 0.9 (sound and iMuse) and finally version 1.0.

18 Sep 2021

The Core Game Loop release is proceeding at a brisk pace, with work on integrating the AI code about to start - which will be the focus of the work next week. Here are two videos that show the current state of the build:

Core Game Loop Release Preview

Weapons Complete

(with a few bugs)

30 Aug 2021

As The Force Engine (TFE) approaches the Core Game Loop Completion milestone, I thought it was time for another update and to talk about plans for reaching TFE version 1.0 and beyond.

Contents

Update